AMD's no angel, but Intel's public usage of benchmark data is feloniously misleading

In recent weeks here at ZDNet, I have been matter, and my colleague George Ou has been anti-matter. Or maybe the other way around. Publicly on ZDNet's blogs, George has taken both me and the New York Times' David Pogue to task over our position on digital cameras and their megapixel myth. Behind the scenes, the dispute spilled over into a virtual shouting match over email and it's quite clear that it's one of those situations where George, Pogue, and I need to walk away, agreeing to disagree. No sooner did that debate reach a simmer than did another one reach full boil. This one has to do with benchmarks and was touched off by my video coverage of a recent AMD press conference where the company's senior vice president and CMO Henri Richard's opening remarks included the following passage:

I'm going to be straight. I think that we've been too quiet and I think that part of that is that we're trained to be very honest, grounded in reality, [and] truthful with our benchmarks. I'm sick and tired of being pushed around by a competitor that doesn't respect the rules of fair and open competition.

Following the press conference, I asked Richard to elaborate on what he meant and he told me (this is in the video):

I think its very important for us to make sure that information that's diseminated in the marketplace is fair and honest and I think that people should be able to win on the merit of their products without trying to make reality into fiction. Our industry is lacking standard metrics like miles per gallon.

Richard probably meant the opposite when he said "reality into fiction" since "fiction into reality" would make more sense if he's accusing Intel or anybody else of flooding the market with suspicious or fictitious claims.

Benchmarks are inherently a contentious subject for vendors looking to gain an edge over their competitors. At the very least, the problem is three dimensional. On one dimension are the sheer number of benchmarking tools at a vendor's disposal. On another dimension is the fact that benchmarks typically don't tell the entire story. For example, a particular microprocessor may win in some head-to-head performance test against another but when those processors show up in actual products (ie: desktops, notebooks, or servers), that performance advantage could easily be ameliorated or even worse, reversed by the other components (busses, hard drives, memory types, etc.) that play a role in a system's overall performance.

On the third dimension, and perhaps the one that's subject to the most manipulation in an effort to curry favor with anybody that matters (press, research analysts, Wall Street, etc.) is a complete dearth of rules, best practices, and/or standards when it comes to sharing benchmark data. For example, whereas the organizations behind some of the benchmarking tools like The Standard Performance Evaluation Corporation (SPEC) have stringent rules regarding fair use of benchmarks and benchmark reporting when comparing the results of one of their benchmarks to another, those rules don't apply out of their jurisdication where the results from one of their benchmarks are presented in the same chart as results from another organization's benchmarking tools.

According to my colleagues at News.com, AMD's Richard is well known for his rhetoric when it comes to accusing Intel of unfair competition. But until this recent press conference, the majority of that rhetoric was largely driven by AMD's belief that Intel leveraged a monoply to foreclose on competition. AMD filed an antitrust suit against Intel in June 2005. Just a couple of weeks ago, Intel admitted to the court that it lapsed in preserving documents that might be relevant to the case. But for Richard to add Intel's benchmarking practices to his list of anticompetitive grievances is new and must have been triggered by something.

That something according to an AMD spokesperson is what AMD considers to be a serious manipulation of the aforementioned third dimension on Intel's behalf. Not surprisingly, in my phone calls with company officials, Intel disagrees. So did George Ou when he accused AMD of being the kettle that called the pot black.

Where lies truth? As usual, it depends on who you ask. That said, if you ask me, I think Richard was justified in levying the criticism he did against Intel. That doesn't mean that Richard and AMD haven't also erred in judgement as well. But rather than taking my word for it, Richard's, Intel's, or Ou's, here's the data so you can decide.

In terms of what Intel has been publicly showing, there are two slide presentations from which I've drawn a total of seven slides each of which appears in an image gallery we've prepared for you. The first of these presentations -- a Server Platforms Group Update -- was given to press and analysts (a PDF of his slides is dowloadable) by Intel Server Platforms Group general manager Kirk Skaugen on February 21st. The second presentation (downloadable here) -- a Server Update -- was given to the press one week later on Febrary 28th by Digital Enterprise Group general manager Tom Kilroy. That press conference was given at exactly the same time and just blocks away (in San Francisco) from the AMD press conference I attended (the one where we caught video of AMD's Richard ripping Intel).

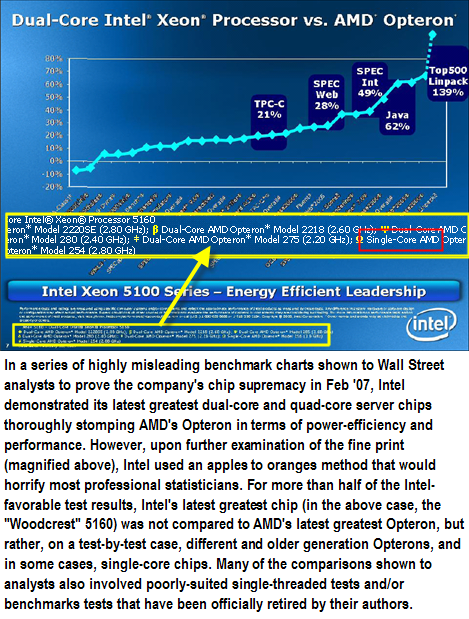

Both presentations contain slides prepared by Intel that would probably horrify a professional statistician. As the former director of PC Week's testing labs (now eWeek), I simply can't imagine presenting benchmark data the way Intel presents it in some of these slides. For example, in a series of charts, one of which appears below, Intel distills tens of comparative benchmarks into line and bar graphs with bold text like "Energy Efficient Leadership" and "Breakaway" (as in Intel is breaking away from AMD). The charts are designed to communicate the supremacy of multi-core offerings over those of AMD.... (continued below)

Yet, upon closer inspection, instead of comparing Intel's latest greatest chips to AMD's latest greatest chips (as Intel should be doing to legimately convince Wall St., the press, and customers of leadership and/or breakaway performance), more than half of the data points that show Intel leading or breaking away show it doing so against older AMD chips (in some cases, single-core chips or chips from an older generation of Opterons) and in some cases, with retired benchmarks (retired in Feb 2007) for which newer versions have been developed (released in August 2006) to better measure the performance of today's systems (particularly of the sort that contain Intel's latest greatest technology). Specifically, the charts compare Intel to AMD using benchmarks from SPEC.org's six year-old CPU2000 suite. In August 2006, SPEC.org released the SPEC CPU2006 suite. According to the SPEC.org Web site:

As of summer, 2006, many of the CPU2000 benchmarks are finishing in less than a minute on leading-edge processors/systems. Small changes or fluctuations in system state or measurement conditions can therefore have significant impacts on the percentage of observed run time. SPEC chose to make run times for CPU2006 benchmarks longer to take into account future performance and prevent this from being an issue for the lifetime of the suites....

... As applications grow in complexity and size, CPU2000 becomes less representative of what runs on current systems. For CPU2006, SPEC included some programs with both larger resource requirements and more complex source code...

... CPU2000 has been available for six years and much improvement in hardware and software has occurred during this time. Benchmarks need to evolve to keep pace with improvements....

....Three months after the announcement of CPU2006, SPEC will require all CPU2000 results submitted for publication on SPEC's web site to be accompanied by CPU2006 results. Six months after announcement, SPEC will stop accepting CPU2000 results for publication on its web site.

I've heavily annotated the slides in the image gallery to show how this isn't simply an honest mistake like the exclusion of an important footnote that changes the entire meaning of a comparative data point (although I show that happening as well). The slides, particuarly the last two, demonstrate a willingness on Intel's behalf to feloniously spin whatever benchmark data it can find into charts that make the company look as though it has a commanding lead that, in reality, it may not have. Even worse, everyone (including the folks at Intel) knows what I know which is that the press and analysts sitting in a press conference are highly unlikely to look past the lines and bars on the slides. After all it's Intel right? It's a big, responsible, public company that would be held accountable by some authority like the SEC for showing misleading charts to analysts who in turn can influence what happens on Wall Street, right? Wrong.

OK, so the slides are riddled with footnotes. There's nothing about them which is an outright lie since Intel does its best, in tiny, tiny print, to disclose the complete truth at the bottom of the slides (even then, I can't make heads or tails of some of the footnotes). Sorry Mr. Ottelini. Not good enough. The slides are deceptive and manipulative. At some point, who ever was preparing them should have thought, "this many footnotes?, perhaps we should redo them." How many disclaimers does it take before you realize you're disclaiming an entire document? Look at the last two slides in the image gallery. The upper right hand side of both say that an Intel part is being compared to an Opteron 2XXX part. Yet, with every data point listed below those headings -- every one -- there's a footnote that says otherwise. It's nothing more than an absurd perversion of the truth.

So, what does Intel have to say for itself? As shown in one of the slides in the image gallery, the company maintains that, for each benchmark, it simply picked the best publicly available benchmark of an Opteron processor that was available. In other words, if there were no published benchmarks for one of the newer members of the Opteron family, Intel simply hunted down the next best thing: a benchmark from an older family. Since a published benchmark of the newer gear doesn't exist, I guess the thinking is that whatever AMD part has last been benchmarked is also the best AMD has to offer. It's a crock. Just because a published benchmark doesn't exist doesn't mean you compare an apple to an eggplant (I was going to say orange, but let's be honest... this is really a fruit and vegetable comparison).

Finally, in his blog, my colleague George Ou chastised AMD's Richard for being a hypocrite. In his post, Ou concluded that AMD was also cherrypicking benchmarking results in an exercise that resulted in similarly manipulative presentations. What Ou found was one benchmark that AMD used for which new data was available. Ou showed how, had AMD used the newer benchmark (it was one month younger than the one AMD used), it would have painted AMD in a more negative light than AMD had painted itself in.

The company claims that it was still clearing the new data through its legal channels before it could be included in a public presentation. I'm not buying that excuse either. George is right. So, Messrs. Ruiz and Richard: Before you take to the stage to slime your enemies, you had better make sure your own house is in order and perfectly so. If newer benchmark data is available, then you have no choice but to light a fire under your General Counsel's ass to make sure that it gets the green light for timely inclusion in any slide that's being shown to the public. It's that important. That said, AMD's crime was a misdemeanor compared to the benchmarking felony committed by Intel. Something has to change.