Cool Codes

Monday of last week was one of those “convergence” days. I’m sure you know the feeling. Besides being my 29th wedding anniversary, it was the first day of the Hot Chips conference at Stanford University. Before my wife and I drove out to Half Moon Bay to celebrate, I was on stage at Mem Aud to give the opening keynote of the conference with a talk entitled Cool Codes for Hot Chips and to announce a new multi-core applications initiative. I’ll come back to the latter item in a moment.

The theme of my keynote was very much related to the question I raised in my last post – have we reached the end of applications or are we at the start of a new wave of innovation? Even though many of your comments had assumed I was in the opposite camp, I firmly believe that we are sitting on a plateau just waiting for the next order-of-magnitude leap in computer (and communication) performance and capability to unleash a new age of application innovation.

To get off this application plateau we have to have access to some radically better hardware. Unfortunately, the hardware won’t happen unless the architects (and their bosses) believe there will be software to take advantage of the new hardware. To resolve this chicken-and-egg question, we need to start building and testing working prototypes of these future applications. That’s what we’ve been doing at Intel for the last three years, and I took the opportunity at Hot Chips to call for a community wide-effort along the same lines.

A collection of future applications, ones that take today’s systems beyond their limits would serve two purposes. First, it would help stimulate much more thinking about what can be and should be done. More programmers would pick up the challenge and start thinking more expansively about the future. Second, it would give architects and engineers a set of working, prototype applications against which to evaluate the efficiency and programmability of their new designs.

Let me share one of the demos that I used at Hot Chips as an example of what’s possible if one has the necessary processing power.

Here’s the basic recipe (click on an image to see the video in action):

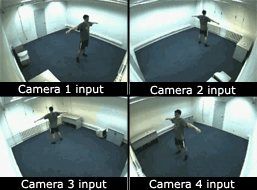

- Take input from four cameras located in the corners of a room (Fig. 1a)

- Analyze the video streams to extract the location and motion over time of the individual body parts (torso, arms, legs and head) based on a programmed skeletal model

- Animate a synthetic human figure with skin using ray-tracing and global illumination within a virtual scene based on the actual kinematics determined in step 2 (Fig. 1b)

While live-action movie animations usually sprinkle LEDs over actors wearing dark clothes and then just track the bright lights, the Intel system works without any special markers on the person. You literally walk into the camera-equipped room and it just works.

The applications for this technology are wide open beyond the obvious ones in game play: you might compare your golf swing to that of Tiger Woods or see how you look walking or even dancing in a new outfit without ever putting it on. Given the model has your physical information, you’d know if you need the next size up or if the color isn’t quite right given your skin tone.

This system is appealing to us not because Intel is planning to ship one of these applications, but because it points to a broad new class of algorithms that we refer to as “recognition, mining and synthesis” or RMS.

The recognition stage answers the question “what is it?” – modeling of the body in our prototype system. Mining answers the question “where is it?” – analyzing the video streams to find similar instances of the model. And synthesis answers the question “how is it?” – creating a new instance of the model in some virtual world.

This flow between recognition, mining and synthesis applies beyond the entertainment and visual domains. It works equally well in domains as diverse as medicine, finance, and astrophysics.

Such emerging “killer apps” of the future have a few important attributes in common – they are highly parallel in nature, they are built from a common set of algorithms, and they have, by today’s standards, extreme computational and memory bandwidth requirements, often requiring teraFLOPS of computing power and terabytes per second of memory bandwidth, respectively. Unfortunately the R&D community is lacking a suite of these emerging, highly-scalable workloads in order to guide the quantitative design of our future computing systems.

The Intel RMS suite I mentioned earlier is based on a mix of internally-developed codes, such as the body tracking and animation prototype, and partner developed codes from some of the brightest minds in the industry and academia. As researchers outside of Intel learned more about the suite, they started to ask if we could make it publicly available. Since it contains a mix of Intel and non-Intel code, we couldn’t just place it in open source. A conversation last spring about the suite with my good friend Professor Kai Li of Princeton gave rise to the idea of a new publicly available suite, and my Hot Chips keynote gave me the opportunity to engage the technical community in its development.

At the end of the keynote I announced the creation of a publicly available suite of killer codes for future multi-core architecture research. I also announced that Intel would contribute some of our internally-developed codes in body-tracking and real-time ray tracing to launch the effort. I was also pleased to announce that Professor Ron Fedkiw at Stanford will contribute his physics codes, the University of Pittsburgh Medical Center will add their medical image analysis codes, Professor David Patterson at UC Berkeley will provide codes of the “Seven+ Dwarfs of Parallel Computing”, and Professors Li and JP Singh at Princeton will make additional network and I/O intensive contributions including content-based multimedia search, network traffic processing, and databases.

Professors Li and Singh have graciously offered to manage contributions to the suite and host the repository. A workshop is being arranged for early next year to establish some guideline on contributions. I’ll provide more information here as the date gets closer.

And that brings me back to the question of when will we have the computational capability to break free from today’s rather quaint applications? Sooner than most people think if we come together to create the future.