Google turns to machine learning to build a better datacentre

Google is employing artificial intelligence techniques to squeeze efficiencies from its global fleet of datacentres.

The US technology giant is using neural networks to learn how to waste less electricity running the facilities that support its online services.

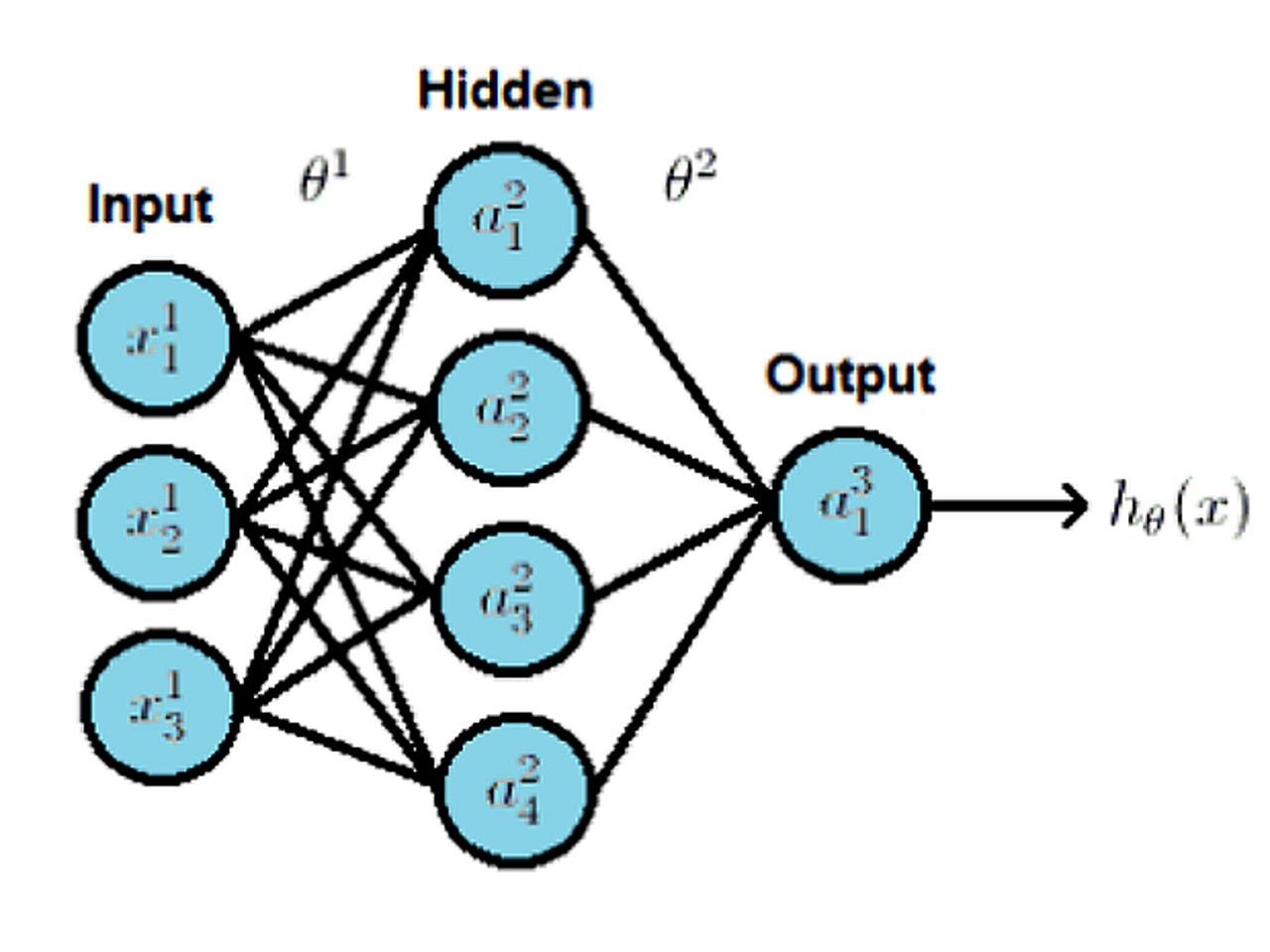

Neural networks — more commonly used to recommend products to shoppers based on their past purchases — are a class of machine learning algorithms designed to mimic aspects of the brain, specifically the interactions between neurons.

"The application of machine learning algorithms to existing monitoring data provides an opportunity to significantly improve DC operating efficiency," Google's Jim Gao, a mechanical engineer and data analyst, wrote in a paper online.

"A typical large-scale DC generates millions of data points across thousands of sensors every day, yet this data is rarely used for applications other than monitoring purposes. Advances in processing power and monitoring capabilities create a large opportunity for machine learning to guide best practice and improve DC efficiency."

The idea to use neural networks reportedly came from Google datacentre chief Joe Kava, who took an online class in machine learning offered by Stanford University.

The motivation stemmed from the diminishing returns when improving datacentre efficiency.

A datacentre's Power Usage Effectiveness (PUE) rating reflects how much of the electricity used by a facility ends up powering the servers, rather than driving associated infrastructure handling cooling and power distribution.

In recent years major tech companies like Facebook and Google have been edging closer to the ideal of achieving a PUE of 1.0, using methods such as hot air containment, water side economisation, and extensive monitoring.

But as they have moved closer to a PUE of 1.0 the rate at which their facilities have become more efficient has slowed. This graph shows Google's historical PUE performance, improving from an annualised fleetwide PUE of 1.21 in 2008 to 1.12 in 2013.

Google says its work with neural networks is designed to "demonstrate a data driven approach for optimising DC performance in the sub-1.10 PUE era".

Why neural networks?

The volume of data generated by the array of mechanical and electrical equipment inside a large datacentre, and the complex interactions between them, makes it difficult to use traditional engineering formulas to predict datacentre efficiency.

Google gives an example of how minor changes within a facility can cascade throughout its connected systems.

"A simple change to the cold aisle temperature setpoint will produce load variations in the cooling infrastructure (chillers, cooling towers, heat exchangers, pumps, etc.), which in turn cause nonlinear changes in equipment efficiency.

"Using standard formulas for predictive modelling often produces large errors because they fail to capture such complex interdependencies.

"Furthermore, the sheer number of possible equipment combinations and their setpoint values makes it difficult to determine where the optimal efficiency lies."

Neural networks are suited to generating models that can predict outcomes from these complex mesh of datacentre systems, according to Google, because they can search for patterns within data and interactions between systems without requiring these myriad interactions to be defined up front.

These models can accurately predict datacentre PUE and be used to automatically flag problems if a centre deviates too far from the model's forecast, identify energy saving opportunities and test new configurations to improve the centre's efficiency.

"This type of simulation allows operators to virtualise the DC for the purpose of identifying optimal plant configurations while reducing the uncertainty surrounding plant changes."

Google gave an example of how it is using these predictive models to identify ways it can tweak infrastructure and drive PUE improvements.

"For example, an internal analysis of PUE versus cold aisle temperature (CAT) conducted at a Google DC suggested a theoretical 0.005 reduction in PUE by increasing the cooling tower LWT and chilled water injection pump setpoints by 3F. This simulated PUE reduction was subsequently verified with experimental test results."

Google reports the neural network detailed in its paper was able to predict the PUE at one of its major datacentre with a high degree of accuracy over the course of one month, achieving a mean absolute error rate of just 0.004 and standard deviation of 0.005 on the test dataset.

"Actual testing on Google DCs indicate that machine learning is an effective method of using existing sensor data to model DC energy efficiency, and can yield significant cost savings," the paper concludes.

"Model applications include DC simulation to evaluate new plant configurations, assessing energy efficiency performance, and identifying optimisation opportunities."