Google's DeepMind and the NHS: A glimpse of what AI means for the future of healthcare

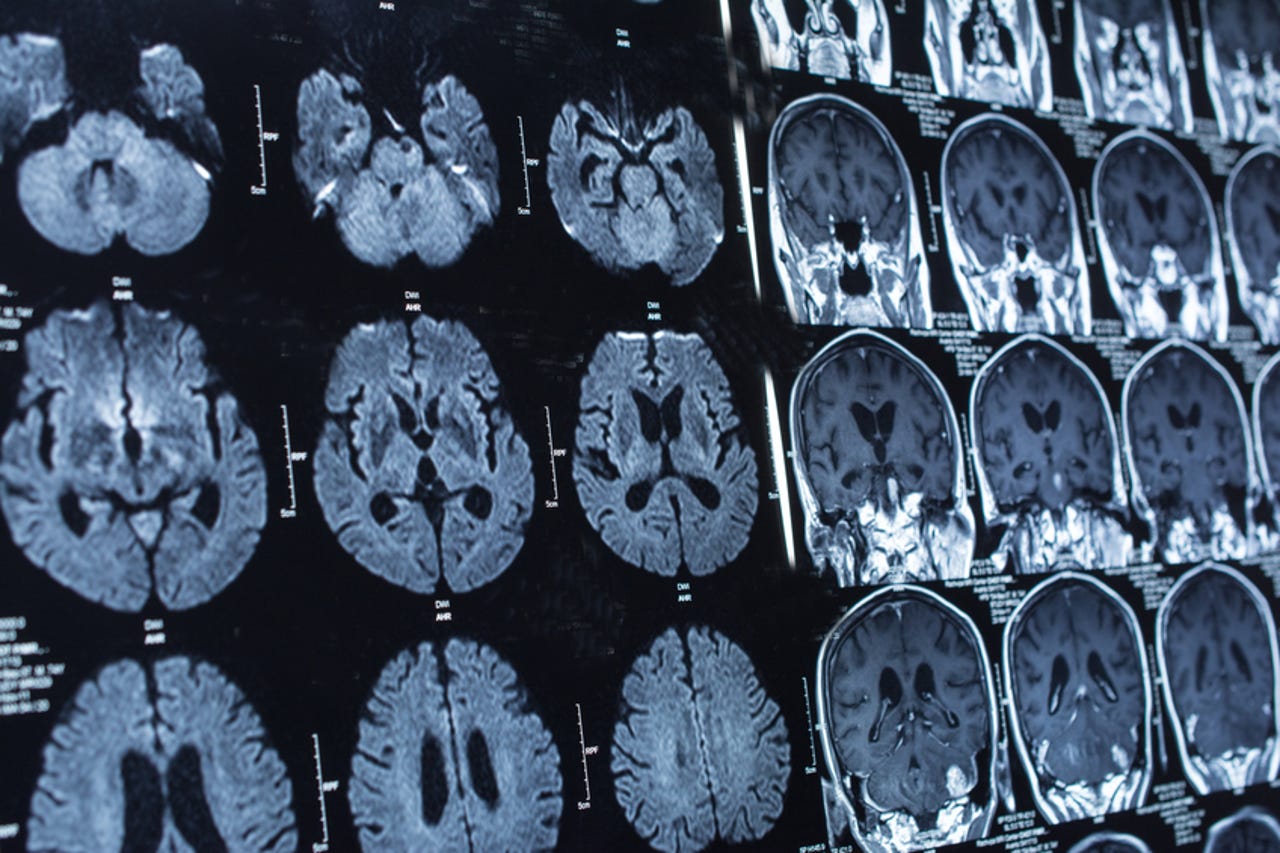

Head and neck scans are one of the areas where Google's DeepMind will be finetuning its health skills.

Healthcare has always been seen as rich pickings for artificial intelligence: when IBM first decided to kit out Watson for use in the enterprise, its earliest commercial test was in cancer care.

There are a number of reasons why health and artificial intelligence might seem like a good fit.

One is simply that healthcare organisations around the world, and in the UK in particular, need to save money: any task that can be taken off a clinician's workload and automated by AI potentially represents a cost saving.

What's more, healthcare has loads of data -- test results, scans, consultation notes, details of appointment follow-ups -- most of which is unstructured. For AI companies, that means lots of material that can be used to train up AI systems, and for healthcare providers, it means a lot of data that needs organising and turning into usable information.

For an NHS under pressure to deliver better healthcare at lower cost, the lure of AI will prove hard to resist.

Take the agreements between Google subsidiary DeepMind and the likes of Moorfields Eye Hospital and University College London Hospitals (UCLH) Trust: both pave the way for a future where the routine work of reading scans is done by an algorithm rather than a healthcare professional, leaving clinicians more free time to attend to patients.

The deals lay the foundation for greater use of AI in the NHS by providing data that DeepMind can use to train up its algorithms for healthcare work. In the case of Moorfields, a million eye scans along with associated information about the conditions they represent will be fed into the DeepMind software, teaching it how to recognise eye illnesses from such scans alone in future.

Under the UCLH deal, 700 scans of head and neck cancers will be given to DeepMind to see if its AI can be used in 'segmentation', the lengthy process whereby the areas to be treated or avoided during radiotherapy are delineated using patient scans. Currently, it's a process that takes four hours -- a figure that the DeepMind and the trust claim could eventually be cut down to one hour with the use of AI.

It's not quite clear who will be benefitting most from these deals: DeepMind or the NHS. Moorfields' scans will allow the Google subsidiary to improve the commercial viability of its systems, by improving the accuracy with which it can detect particular eye diseases -- potentially making a commercial version of the software a must-buy for the hospital. However, according to a freedom of information (FOI) request filed by ZDNet, there has been no deal agreed between the two organisations to roll-out the software once it's trained up, and DeepMind is only paying Moorfields for the staff time involved in processing the data before handing it on to the AI company.

It's a similar story with UCLH: "The collaboration between UCLH and DeepMind is focused on research with the goal of publishing the results. There are currently no plans for future rollouts... DeepMind is not providing financial compensation to UCLH for access to data. DeepMind will support the costs of UCLH staff time spent on the de-identification and secure transfer of data," UCLH said in response to an FOI filed by ZDNet.

Both trusts have already made clear that they, rather than DeepMind, remain the data controller and that ownership of the scans remains with them. DeepMind for its part will appoint a data guardian to control who has access to the scans, and will destroy the data once the agreement ends.

The need to champion good data hygiene likely comes from an earlier deal DeepMind made with the Royal Free NHS Trust, which came in for a good deal of criticism over its handling of patient data.

After a New Scientist investigation revealed that the details of 1.6 million people were being made available to DeepMind -- including data over and above that which related to acute kidney injury -- the Information Commissioner's Office began an investigation into the pair's arrangement. It was also recently criticised in an academic paper, Google DeepMind and healthcare in an age of algorithms, which said "the collaboration has suffered from a lack of clarity and openness", adding: "if DeepMind and Royal Free had endeavored to inform past and present patients of plans for their data, initially and as they evolved, either through email or by letter, much of the subsequent fallout would have been mitigated".

The Royal Free entered its agreement with DeepMind last year, when it announced it would be using an app called Streams to identify people who could be at risk of acute kidney injury. By keeping tabs on patients' blood test results and other data, the DeepMind system can alert clinicians through the Streams app on a dedicated handheld device about when patients are experiencing a deterioration in their condition, and so aid medical staff to take preventative action.

The system was first used with live patient data in January of this year, the Royal Free Trust told ZDNet in response to an FOI. Up to 40 clinicians will be using Streams in the first phase of the rollout, and "the implementation will be phased across all Trust sites starting with the Royal Free Hospital".

A similar agreement with the Imperial Trust looks to be more of a slow burner than the Royal Free's: when the trust announced the Google deal, it said it had signed up for an API that would allow data to be moved between electronic patient record systems and clinical apps, be they DeepMind or other companies'.

The trust did note that the deal would cover the eventual rollout of Streams. In response to an FOI request by ZDNet, Imperial said it will begin piloting Streams app in either April or May, and the trial is currently working its way through the Trust's governance processes for technical and clinical approval.

As a result, Imperial said it couldn't provide details of where Streams will be piloted and by how many staff, but added it will be used "in a limited environment and in parallel with existing response processes and procedures".

It also appears to not be following in the footsteps of the Royal Free when it comes to functionality just yet, saying the initial deployment won't use the alerting facility and so there have been no targets set around when staff should respond to any alerts sent.

Interestingly, given DeepMind is best known as an AI company, there is no whiff of AI used in Streams, as Imperial's website makes clear, saying: "this partnership does not use artificial intelligence (AI) technology and the agreement between the Trust and DeepMind does not allow the use of artificial intelligence".

Streams is, according to Gartner analyst Anurag Gupta, more akin to standard issue analytics software than AI.

"Streams has nothing to do with AI at this point in time. It basically collects information from a few different systems, it then uses NICE's proprietary algorithm on top of that... and it presents information from multiple systems in an easy to understand format. It's common sense. It's more like business intelligence."

While DeepMind's work with the UK's health service seems to have attracted a great deal of headlines -- many of them negative -- there's no doubt that we'll be seeing more artificial intelligence in healthcare in general and in the NHS in particular. Anecdotally, the non-AI Streams app has already been saving hours of nurses' time, and the benefits of such systems could potentially be even greater once AI has been properly brought to bear. Used well, with proper data governance, patient buy-in, and competition among AI providers, it can help the NHS deliver better patient care by freeing up clinicians from some of the more mundane tasks.

"In the short term, our skills and the AI's skills are complementary: things that AI systems can do very well, crunching a lot of information, making sense of a lot of information in a narrow domain, doing things in a repetitive fashion -- that work can be done by AI, and [humans' skills] can sit on top of that AI, because we are much better in building the context to that information," Gupta said.

Diagnosis, he adds, is "part science and part art". Artificial intelligence can provide the former, but only human intelligence can do both.