Heat doesn't kill hard drives. Here's what does

At last months Usenix FAST 16 conference, in the Best Paper award winner Environmental Conditions and Disk Reliability in Free-cooled Datacenters, researchers Ioannis Manousakis and Thu D. Nguyen, of Rutgers, Sriram Sankar of GoDaddy, and Gregg McKnight and Ricardo Bianchini of Microsoft, studied how the higher and more variable temperatures and humidity of free-cooling affect hardware components. They reached three key conclusions:

- Relative humidity, not higher or more variable temperatures, has a dominant impact on disk failures.

- High relative humidity causes disk failures largely due to controller/adapter malfunction.

- Despite the higher failure rates, software to mask failures and enable free-cooling is a huge money-saver.

Background

Datacenters are energy hogs. A web-scale datacenter can use more than 30 megawatts and collectively they are estimated to use 2 percent of US electricity production.

Moreover, the chillers for water cooling and the backup power required to keep them running in a blackout are costly too. As the use of cloud services has grown, the cost of hyperscale datacenters has led to more experimentation such as free-cooling and higher operating temperatures.

But to fully optimize these techniques, operators also need to understand their impact on the equipment. If lower energy costs are offset by higher hardware costs and downtime, it isn't a win.

The study

The researchers looked at 9 Microsoft datacenters around the world for periods ranging from 1.5 to 4 years, covering over 1 million drives. They gathered environmental data including temperature and relative humidity and the variation of each.

Being good scientists, they took the data and built a model to analyze the results. They quantified the trade-offs between energy, environment, reliability, and cost. Finally, they have some suggestions for datacenter design.

Key findings:

- Disks account for an average of 89 percent of component failures. DIMMs are 2nd at 10 percent. [Disks are the most common component in datacenters.]

- Relative humidity is the major reliability factor - more so than temperature - even when the data center is operating within industry standards.

- Disk controller/connectivity failures are greatest during high relative humidity.

- Server designs that place disks at the back of the server are more reliable in high humidity.

- Despite the higher failure rates, software mitigation allows cloud providers to save a lot of money with free-cooling.

- High temperatures are not harmless, but are much less significant than other factors.

That last finding is key to why the cloud clobbers current array products. It is good for global warming and good for the bottom line.

Environmental impact

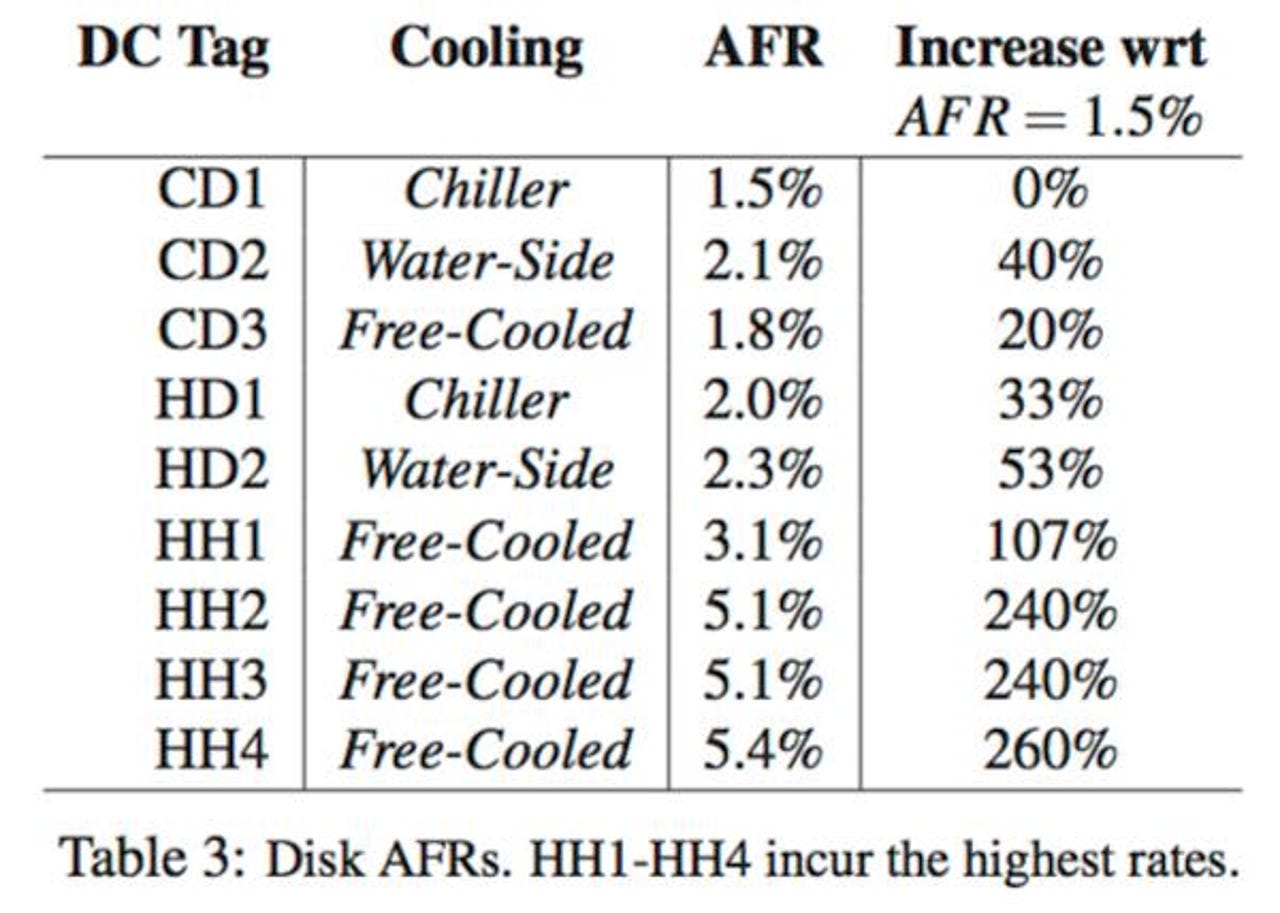

The paper classifies the datacenters by either Cool or Hot and either Dry or Humid. The following table from the paper breaks out the details:

The chart that best underlines the conclusion that it is humidity and not temperature that causes failures is this one:

The Storage Bits take

For far too long storage vendors controlled the release of data on device reliability. But web-scale datacenters owe nothing to legacy vendors, so they win by sharing their experience with consumers looking for a better deal.

The RAID array model - building costly duplication in storage silos - does not and cannot scale to meet Big Data needs. This paper proves the logic behind the design of the Google File System and other web-scale object stores: lower reliability components in a software framework that expects failure can be both lower cost and more available than traditional RAID array designs.

My only question about the paper is the fact that the authors seem to think that disk drives are sealed against ambient air. As regular readers know, this is not the case. I've pinged the lead author and will update the post if I get a reply.

Update: I did get a reply and my concern was unfounded. Here's what lead author Ioannis Manousakis said in response:

Indeed, we are aware of the breathing holes and I agree that we could have phrased the sentence better. Our data (Figure 2), though, suggests that disks in HH1 (hot - humid environment) experience a much higher number of controller/connectivity failures than dry datacenters do. At the same time, the number of reallocated sectors significantly drops. These observations suggest that the mechanical part is not affected much (or the effect is much less significant than that at the controller). We observed the same effects in other humid datacenters as well.

Thanks for clearing that up! End update.

Comments welcome, as always. OK, so how do we control humidity at home? Air conditioning? Wrong answer!