IBM Research unveils new chip architecture inspired by the human brain

IBM Research has debuted what it is boasting to be a "breakthrough" new software ecosystem and programming model inspired by the human brain.

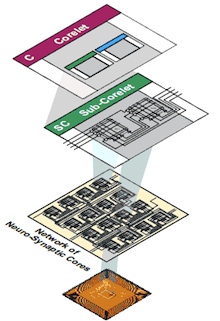

Introduced amid the International Joint Conference on Neural Networks in Dallas this week, the cognitive computing project is a new silicon chip architecture inspired by the power, function and "compact volume" of the brain.

Also following up the introduction of two prototype cognitive computing chips back in 2011, IBM reps asserted that the particular model being unveiled today breaks the mold of sequential operation underlying the traditional von Neumann architecture separating CPU and memory.

They stipulated that traditional software and programming languages (i.e. Java or C++) would also not work on this type of chip because the architecture, while complimentary to existing computing systems, is fundamentally different.

It is instead tailored for a new class of distributed, highly interconnected, asynchronous, parallel, large-scale cognitive computing architectures.

Still, at it's core, this project is about tackling one of the biggest items on the current and long-haul agenda at IBM: big data.

The long-term goal for Big Blue is to develop a chip platform with 10 billion neurons and a hundred trillion synapses -- all while only consuming roughly a kilowatt of power and taking up less than two liters in volume.

In a nutshell, the brain served as the inspiration for the groundwork to eventually support applications by using similar techniques and patterns in regards to human perception, action, and cognition.

IBM researchers offered the example of the human eyes, which they posited could sift more than a terabyte of data each day. A system mimicking the cortex could give way to low-power eyeglasses to aide visually impaired users.

Thus, by building a platform replicating the source of where most data is arguably generated, researchers suggested that "systems built from these chips could bring the real-time capture and analysis of various types of data closer to the point of collection."

Eventually, researchers are hoping to integrate this technology across everything from smartphones to cars for accumulating and analyzing sensory-based data.

Image via IBM Research