In-memory computing: Where fast data meets big data

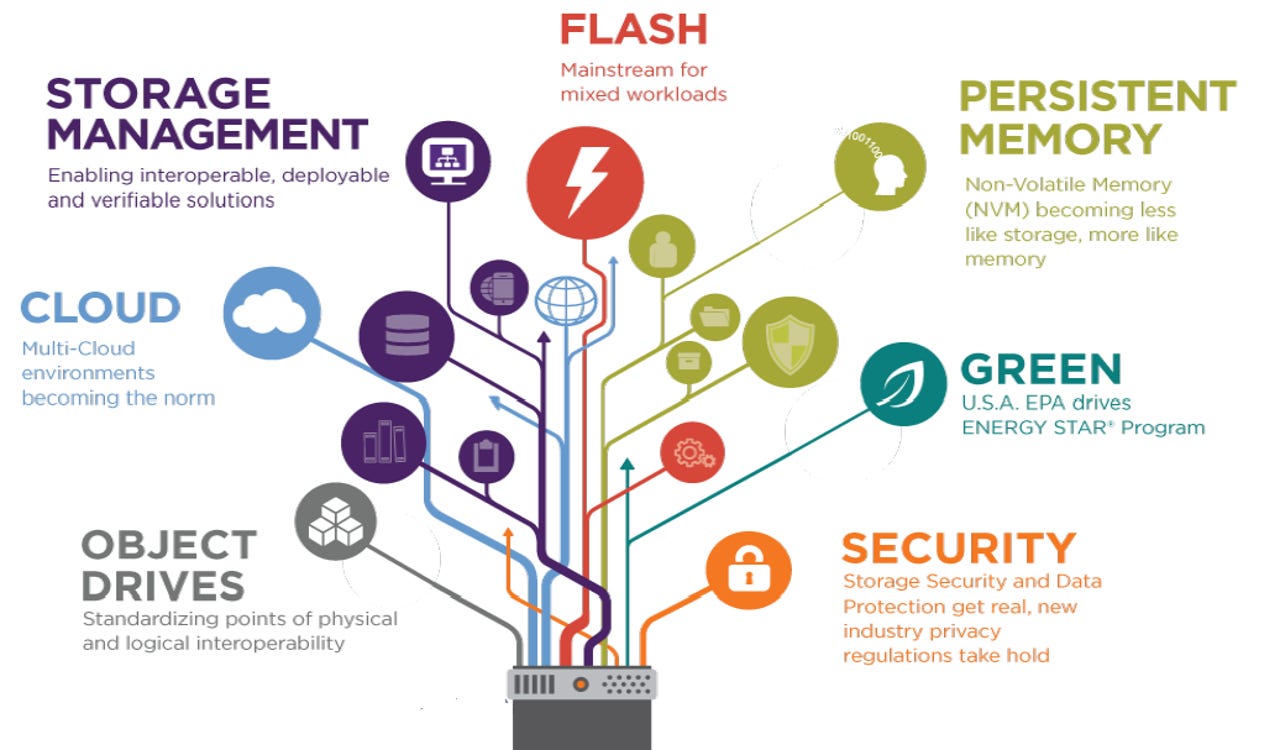

The evolution of memory technology has an impact on storage and compute architectures as well as the software that works on top of them. Image: SNIA

Traditionally, databases and big data software have been built mirroring the realities of hardware: memory is fast, transient and expensive, disk is slow, permanent and cheap. But as hardware is changing, software is following suit, giving rise to a range of solutions focusing on in-memory architectures.

Featured

The ability to have everything done in memory is appealing, as it bears the promise of massive speedup in operations. However, there are also challenges related to designing new architectures that make the most of memory availability. There is also a wide range of approaches to in-memory computing (IMC).

Some of these approaches were discussed this June in Amsterdam, at the In Memory Computing Summit EMEA. The event featured sessions from vendors, practitioners and executives and offered an interesting snapshot of this space. As in-memory architectures are becoming increasingly adopted, we'll be increasingly covering it, kicking off with IMC Summit organizers: GridGain.

Sliding in

First off, IMC is not new. Over time caching has been increasingly used to speed up data-related operations. However as memory technology evolves and the big data mantra is spreading, some new twists have been added: memory-first and HTAP.

HTAP stands for hybrid transactional and analytical processing, and was introduced as a term by Gartner. HTAP basically means having a single database backend to support both transactional and analytical workloads, which sounds tempting for a number of reasons. Many IMC solutions emphasize HTAP, seeing it as something through which they can build their case.

As to the second point, IMC turns traditional thinking in the database world on its head. As Abe Kleinfeld, GridGain CEO puts it, "traditionally in databases memory was a valuable resource, so you tried to use it with caution. In our case, we always go to memory first and avoid touching the disk at all cost. The algorithms we use may be the same -- it's all about cache and hits and misses after all -- but the thinking is different."

The reference to cache is not accidental, as GridGain does act as a cache, albeit in a different way. Kleinfeld says GridGain can "slide in" between applications and databases acting as a cache layer that speeds up applications by a factor of 5X - 20X. The thinking there is to be as less disruptive as possible while adding value and setting a foot in the organization.

"Typically people come to us when they have tried everything they could to squeeze more performance out of their applications and databases and realize they need more," says Kleinfeld. "Still, people have invested in their databases in a number of ways. So asking them to replace their database is a very hard sale. As opposed to other solutions, this is not what we do."

Is GridGain a glorified cache then? Hardly, says Kleinfeld, and this is where the HTAP notion comes in: "we become the system of record, and the database becomes the backup. But once people get going, they realize it's a very expensive backup." That sounds like a smart strategy, but how does it work?

IMC is great in terms of speed, and evolution in memory technology promises we will soon have persistent memory at our fingertips, but until then what happens when your system goes down -- do you lose everything? And how do you actually access your data? This is where SQL and storage come in.

In-memory computing is said to enable HTAP (Hybrid Transcation/Analytical Processing), which brings benefits in terms of unified architecture and quick access to data and insights. Image: GridGain

From caching to an in-memory relational database

GridGain has been around since 2004, but it was not until 4 years ago that it got its SQL. Why? "SQL is tricky," says Kleinfeld. "When we first started, our engineers said it was nearly impossible.

But nobody wants to have to use APIs, so we've thrown some of our best talent at it, and now here we are. And we have just added DML and DDL, so we are now essentially a fully in-memory relational database you can use on its own."

Kleinfeld says they realized SQL was key to their "slide in" strategy so they made sure they had it. As for storage, IMC solutions need to utilize storage as a backup solution to resume after failures. For GridGain, this lead to an interesting development. Kleinfeld says many of their clients come from the financial services world, where SLAs is what you live and die by.

When Sberbank, one of Eastern Europe's biggest banks, chose to go with GridGain, one of their SLAs was that they could not afford to have more than 5 minutes downtime. GridGain was forced to take support for restore from database to the next level to live up to this requirement. Then GridGain also decided to add full ANSI-99 SQL support and go for the whole shebang.

As its name implies, GridGain functions as a computational grid. This means it can function on top of a pool of nodes, ranging from super-computers to the proverbial laptop, according to Kleinfeld. The idea is that the grid effectively connects all the resources in this pool, making it transparent to end users.

Now, this idea sounds somewhat familiar, doesn't it? That's more or less what Hadoop does too, so is there some overlap between the two? Actually, GridGain has tinkered with the idea of meshing with Hadoop, "proactively, and to some degree, regrettably. Hadoop and speed are diabolically opposed," as Kleinfeld puts it.

GridGain embarked on this project a couple of years ago, as a proof of concept of their "slide in" approach. Their bet was whether they would be able to deliver instantaneous speed-up to Hadoop with zero code change. "We knew people were using Hadoop for lots of different tasks -- to run MapReduce jobs, do analytics, run SQL etc.," says Kleinfeld.

He continues to add they did it, managing to boost performance by 2X - 20X, but that has not been such a great success for them. The reason? Counter-intuitive as it may sound, Kleinfeld says it turns out not so many people are looking for it, as they mostly use Hadoop for batch processing and do not care that much about speedup.

Kleinfeld adds they have also done some integration work with Spark, aiming to fill in the gap in storage for Spark, but overall they have found that this is not what people come to GridGain for:

"You cannot ignore Hadoop. We make sure we play there and don't lose sight of it, but our most common use cases are transactional, requiring high availability and full ACID support. We think this is the hard part, we got it covered, and we also do analytical workloads."

The in-memory computing market is growing. Image: GridGain

Platform for the win

GridGain actually has a lot of bases covered. Take streaming for example. "Many of our customers need to ingest data at real time," says Kleinfeld. "So we give them this capability. Sure, they can use Kafka for this purpose too, and we integrate with Kafka as well."

When discussing Kafka and its move towards becoming an enterprise platform, Kleinfeld says that this is exactly what they are after as well:

"We took the platform approach early on and have paid the price for it. You start out building on your core strengths, 1-2 things, but as the technology expands you integrate with 2-3 more things, and end up building a platform. If you don't do it, someone else will and you will be out of business."

The writing is on the wall, the Gartners and Forresters of the world concur, and GridGain is aware of it as per Kleinfeld. If the shift towards IMC lives up to its promise, GridGain may indeed be well positioned to become a contender as an enterprise data platform.

This is why they keep adding features, and when having this discussion, it was impossible not to touch upon the topic of machine learning (ML). Wherever lots of data reside, facilities to use them for ML make sense, even though storing data and using them for ML are two different sets of competencies. GridGain realizes this, and says they are planning to integrate ML features in the next six months, after consulting with their community.

For Kleinfeld, this is an important part of their success, and one he sees himself as instrumental to. He explains that GridGain was originally conceived as an open source project, but then its investors decided to go closed source, until 2014 when Kleinfeld joined as the CEO.

His take was that it's impossible to succeed in this space against incumbents as a new player, unless you go open source. The idea is that organizations would be less hesitant to trust you if they were able to experiment with the software, and reduce the perceived risk if they saw a community forming around the software.

Kleinfeld had his way, GridGain donated its codebase to the Apache Foundation, and it seems to be working. Although not the entirety of GridGain functionality is released in Apache Ignite, the open source project that has evolved through this act, Kleinfeld says it is absolutely possible to go to production with Ignite, and many people do. But when it comes to enterprise support and features, they turn to GridGain.

Kleinfeld shared some data according to which Ignite has grown from 40,000 downloads in 2014 to over 1 million now. GridGain has grown from a 37-employee business to an over 100-employee business worth $10 million in the same period, and the company expects to reach the $100 million landmark in 2019 if that rate of growth continues.

GridGain is building its momentum on IMC, and things seem to be going its way. Its platform ambitions are clear, and with substantial funding, including some of its biggest clients turned investors, and a strategy that seems to be working, they may as well be on their way to achieving this goal.

There are more players in this space however, each with their own approach, so it's definitely one to be keeping an eye on.