Nvidia aims to extend its lead in AI

When Nvidia held its first annual GPU Technology Conference almost a decade ago, it was a gaming company is search of new markets for its specialized chips. At the time, high-performance computing was the main target. Then, AlexNet came along and swept the ImageNet challenge in 2012, sparking a boom in deep neural networks trained on GPUs.

special report

Today, Nvidia's data center business generates $2 billion in annual sales leaving larger rivals playing catch up while venture capitalists throw money at AI hardware start-ups vying to build a better mousetrap.

Nvidia no longer needs to make the case for GPU computing. Instead, CEO Jensen Huang's job at the opening keynote of GTC 2018 was to convince the 8,500 developers in attendance that the company can maintain its edge over the competition and bring the benefits of AI to a broader audience.

Read also: Nvidia wants GPUs reserved for those who need it, not those mining ether | Pure Storage and Nvidia introduce AIRI, AI-Ready Infrastructure | Nvidia doubles down on AI | Nvidia redefines autonomous vehicle testing with VR simulation system | Nvidia extends 'cinematic quality' image rendering capability beyond gaming | GPU databases are coming of age

One of the best decisions the company made, Huang said, was a bet that the same processors used to play games would become more general-purpose hardware. Nvidia's CUDA GPU computing platform has since been downloaded more than eight million times and the world's 50 fastest supercomputers now rely on GPU accelerators to provide 370 petaflops of horsepower. "Clearly the adoption of GPU computing is growing, and it is growing at quite a fast rate," he said.

The reason for this is that new architectures, interconnects, algorithms, and systems have enabled GPUs to scale rapidly. Over the past five years, Moore's Law has increased CPU performance by five times, but GPUs have accelerated molecular dynamics simulations by a factor of 25. A traditional HPC cluster with 600 dual-CPU servers consuming 600kW can now be replaced with 30 quad-Tesla V100 servers consuming 48kW. And future exascale systems will allow simulations that take months to run in a single day, though scientists will in turn create larger simulations. "There's a new law going on," Huang said. "The world needs larger computers because there is serious work to be done."

Read also: Spectre mitigations arrive in latest Nvidia GPU drivers

A similar evolution is taking place in deep learning. The AlexNet convolutional neural network (CNN) was eight layers deep and had millions of parameters. Five years later, we have had a "Cambrian explosion" of CNNs, recurrent neural networks (RNNs), generative adversarial networks (GANs), reinforcement learning, and new species such as Capsule Nets. These neural network models have increased in complexity 500 times to hundreds of layers and billions of parameters, creating demand for faster hardware. "The world wants a gigantic GPU," Huang said.

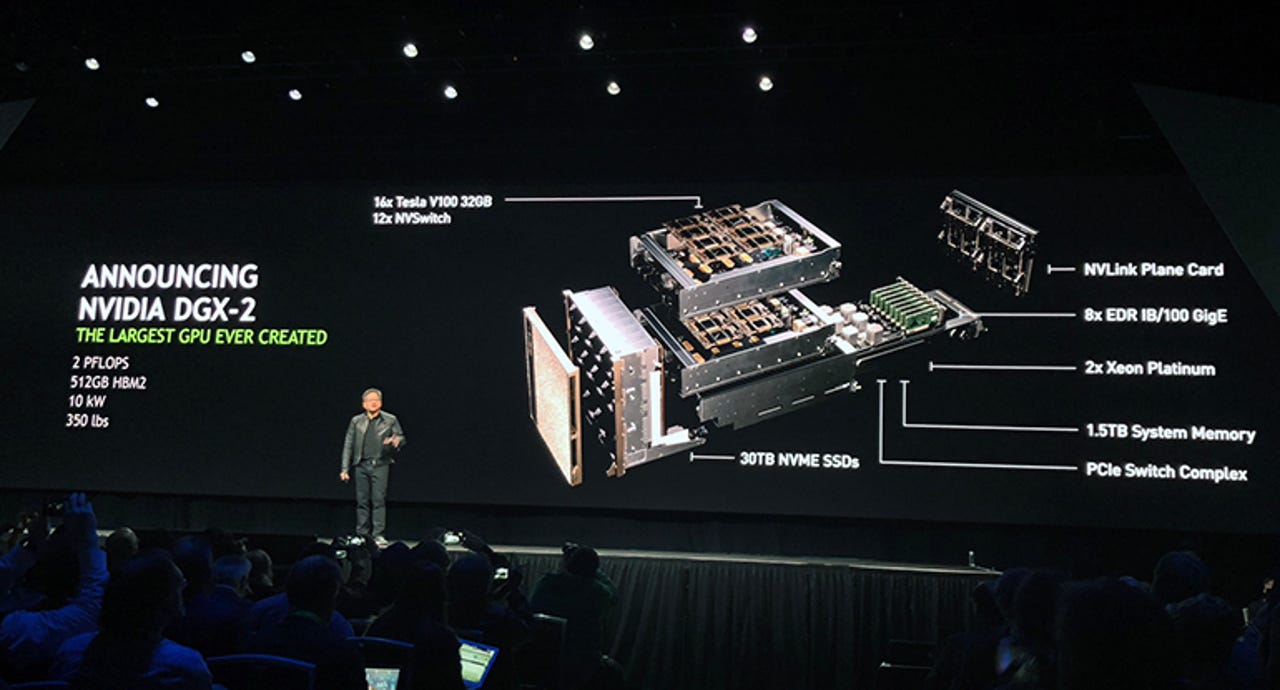

Nvidia's answer to that is the DGX-2, a new server that packs 16 Tesla V100 'equivalents' each with 32GB of stacked High-Bandwidth Memory (HBM2) -- a total of 81,920 CUDA cores and 512GB of HBM2 -- all connected over new, high-speed switch. The server also includes two Intel Xeon Platinum CPUs, 1.5TB of system memory, 30TB of NVMe solid-state storage, and InfiniBand EDR, and 100Gbps Ethernet networking. The system can deliver peak performance of 2 petaflops for deep learning (Tensor Cores) compared with 170 teraflops for the original Pascal-based DGX-1 and 960 teraflops with the Volta upgrade. The AlexNet model that took six days to train on two GeForce GTX 580s would take just 18 minutes to train on the DGX-2, and at the opposite extreme, Facebook's fairseq model for machine translation that requires 15 days to train could be completed in 1.5 days. The DGX-2 will cost $400,000 and will be available starting in the third quarter.

The growing complexity of AI systems and software has led to a push to make it available as a service in the cloud. The Nvidia GPU Cloud is a registry of containers that contain the software needed to run specific workloads on premises on a DGX system or in the cloud. At GTC, Nvidia announced that GPU Cloud now has 30 optimized GPU containers and is available on not only AWS, but also on Google Cloud Platform, AliCloud, and Oracle Cloud.

The company also announced an updated version of its TensorRT runtime for deep learning inference that optimizes and runs trained models on Nvidia GPUs in data centers. TensorRT 4 now supports RNNs, optimization for Kaldi speech recognition framework, and the Open Neural Network Exchange (ONNX) that allows interoperability between frameworks such as Caffe, PyTorch, MXNet, and the Microsoft Cognitive Toolkit/WindowsML. Nvidia said that TensorRT 4 can speed up performance on image and video by up to 190 times; natural-language processing by 50 times; speech recognition by 60 times; speech synthesis by 36 times; and recommendation engines using collaborative filtering by 45 times.

The final, and perhaps most significant, cloud announcement was support for Kubernetes, the open-source software for deploying and managing containerized applications. In an impressive demonstration, Nvidia showed how it could scale an image classification model for flowers from a CPU at four flower types per second to a single GPU at 873 flowers per second to Nvidia's own Saturn V GPU cluster. By using the Kubernetes Load Balancer, it can easily add replicas of containers scaling the system to run at nearly 7,000 flower types per second. It also works across multiple clouds. When it took down some of the Saturn V replicas, the system automatically failed over to AWS GPU instances and continued to run the model at the same high-performance level.

Read also: Baker Hughes GE, Nvidia collaborate on AI for oil and gas industry

While the focus of GTC is GPU computing, Nvidia did make some graphics news as well. The company announced a new workstation GPU that, when combined with the RTX ray tracing technology announced at last week's Game Developers Conference, can enable real-time, cinematic quality rendering.

To demonstrate the capabilities of RTX, Steven Parker, vice president of Professional Graphics, showed storm trooper scenes using Industrial Light & Magic's Star Wars assets and developed with Epic Games' Unreal Engine, rendered in real time. Huang said that with RTX a traditional render farm with 280 dual-core CPU servers burning 168kW can be replaced 14 quad-GPU servers using 24kW "This is a big deal because for the very first time we can bring real-time ray tracing to the market," Huang said. "People can actually use it." Developers access RTX through a ray tracing extension to Microsoft DirectX 12, Nvidia's own OptiX API, and soon the Khronos Group's Vulkan graphics standard.

Manufactured on TSMC's 12nm process, the GV100 is the first Quadro GPU based on the Volta architecture, and it has 5,120 CUDA cores, 32GB of HBM2 stacked memory, and 640 Tensor Cores. Two of these GV100 GPUs can be combined over a new NVLink2 interconnect to create a single GPU with 64GB of HBM2 memory capable of delivering peak performance of nearly 30 teraflops single-precision (FP32). Dell, Fujitsu, HP, and Lenovo will offer workstations with the Quadro GV100 starting in April.

This year, Nvidia did not bring customers on stage, though it is clearly working with hundreds of partners across many industries and Huang spent a lot of time talking about the applications of all of this technology. "AI and autonomous machines will help revolutionize just about every industry," he predicted.

One of the more promising areas is medical imaging where deep learning is already having an impact on diagnosis and treatment of disease, as illustrated by the difference between 15-year-old ultrasound image of a fetus and the 3D/4D images captured by Philips latest EPIQ ultrasound. The problem, Huang said, is that there are millions of medical imaging devices installed around the world, but only 100,000 news ones are purchased each year, so it will take decades to replace this legacy technology. Nvidia's solution is Project Clara, a remote, virtualized data center that takes images from existing devices and performs post-processing in the cloud to produce modern, volumetric images.

Nvidia's most high-profile application, though, is automotive, where it has made a massive, multi-year bet on end-to-end systems for ADAS (advanced driver assistance) and autonomous driving. In a bit of unfortunate timing, during the keynote news broke that Nvidia had suspended its autonomous driving program in the wake of Uber's fatal crash, pushing the chipmaker's shares down sharply. In a press session afterward, Huang explained that Nvidia was taking a cautious approach to self-driving to ensure the safety of its engineers and to get it right. "We are trying to create an autonomous vehicle computing system and infrastructure so that the entire industry can take advantage of the investment we are making," Huang said.

Read also: Nvidia CEO: Give Uber a chance to explain driverless car incident

Read also:AMD and Intel: Frenemies aligned vs. Nvidia

Even this is not enough though. It takes 20 cars driving all year long to cover one million miles, but it will take billions of miles to cover all the possible scenarios required to make self-driving vehicles safe. Nvidia Constellation system runs a virtual reality driving simulator on the same Drive architecture (Xavier and Pegasus) to augment road tests. Huang said 10,000 of these Constellation systems can "test drive" three billion miles per year. Finally, Nvidia announced that its Isaac developer kit and Jetson board for robotics is now available, and showed a demonstration of using its Holodeck VR environment to remotely drive a car located in the parking lot outside the convention center

This year's GTC keynote was also notable for what it did not include. The company did not announce a truly new GPU, and unlike in previous years, it did not even show a roadmap for future consumer or professional GPUs. Instead Nvidia said that it was doubling the memory in the current Volta V100 to 32GB because neural networks are getting larger. Huang also admitted that the popular Titan V remains out of stock but said Nvidia was working to increase the supply.

One likely reason is the lack of a compelling new process. The 10nm process is what the industry refers to as a short-lived node -- some foundries and customers skipped it altogether -- and 7nm isn't ready to go and its benefits without next-generation EUV (extreme ultraviolet) lithography are unclear. Instead, Nvidia has resorted to changes to the architecture, packaging, systems (stacks of high-bandwidth memory, faster interconnects and switches), and software -- a trend that is taking place throughout the industry as Moore's Law slows down. One thing that seems clear, though, is that regardless of where it comes from, the demand for bigger and faster systems remains insatiable for now.

Previous and related coverage

GE Healthcare turns to Nvidia for AI boost in medical imaging

The industrial giant will double down on its existing partnerships to provide better imaging for hospitals.

Nvidia expands new GPU cloud to HPC applications

With more than 500 high-performance computing applications that incorporate GPU acceleration, Nvidia is aiming to make them easier to access.