SC13: Intel reveals Knights Landing high-performance CPU

High-performance computing, once a niche area catering to academia and government, has become a key growth area for the tech industry as countries battle to develop the first exascale supercomputers and companies adopt the technology. Intel’s announcements at SC13 today---including new details of a completely redesigned Many Integrated Core processor—show just how important technical computing has become.

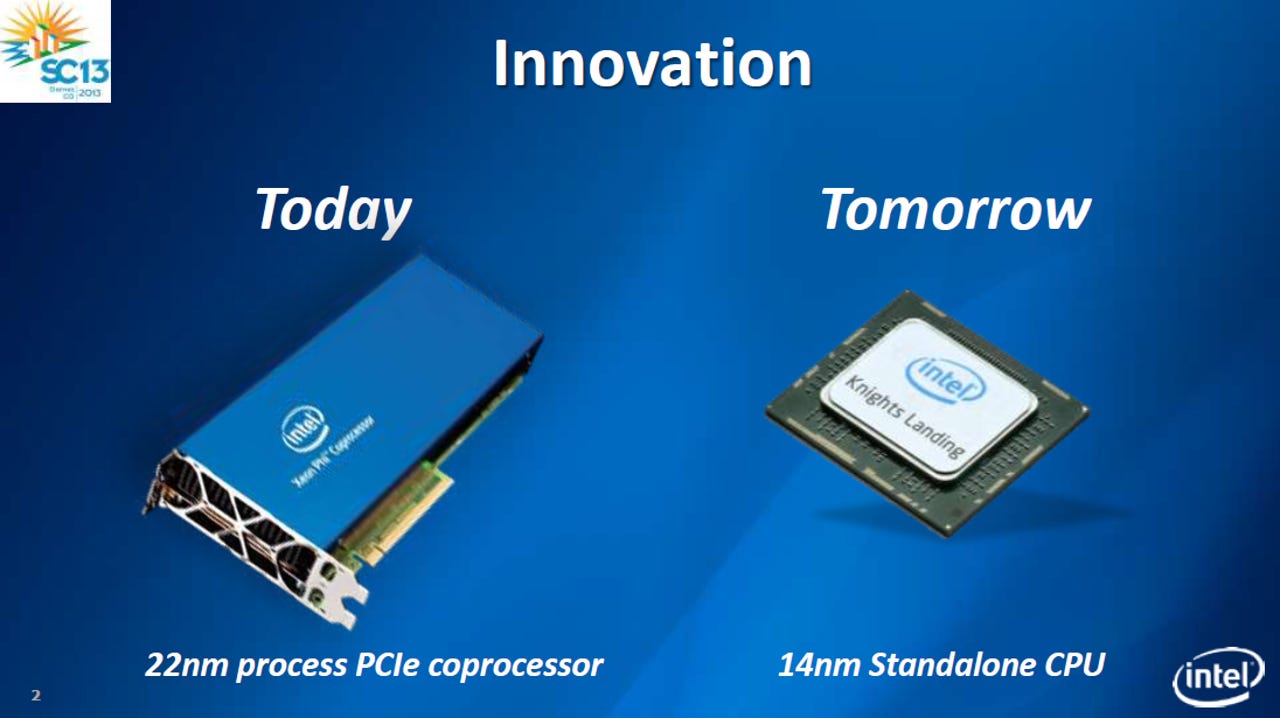

Intel released its first Xeon Phi in late 2012 and expanded the product line in June 2013. Known as Knights Corner and manufactured on a 22nm process, these are all co-processors, meaning they must be used with a host x86 processor (generally a Xeon server chip) connected over a PCI-Express bus much like Nvidia Tesla and AMD FirePro accelerators.

The Xeon Phi co-processor is already used in Tianhe-2, the world’s fastest supercomputer and one of 13 systems on the Top500 list that now employ Intel’s Knights Corner. Hazra said that what is “perhaps more exciting” is that in addition to some wins on the Top500, Xeon Phi is also starting to be adopted more broadly in mainstream high-performance applications.

Featured

Intel is clearly fast-tracking the development of its Many-Integrated Core architecture. The next version, code-named Knights Landing, will not only be manufactured on a more advanced 14nm process, but it will also include significant changes to the core and other parts of the chip designed to increase performance and improve efficiency.

“It’s a major transition from Knights Corner,” said Raj Hazra, Vice President of the Data Center Group and General Manager of the Technical Computing Group. “You can think of Many-Core as a tock, tock, tock cadence” referring to the so-called tick-tock cadence that Intel uses to introduce major changes to its mainstream Core architecture every other year. To translate, Intel will use the extra transistors provided by Moore’s Law to make big changes.

The biggest of these is that Knights Landing will be a standalone many-core CPU that will fit into standard rack architecture and run its own operating system without needing a separate host CPU. That means Knights Landing can be used as a homogenous, many-core processor in everything from workstations to massive supercomputer clusters without having to develop for heterogeneous systems that offload certain data to accelerators.

“It will have the performance of an accelerator but you will view it as a software developer as a CPU,” Hazra said. “It’s the best of both worlds.” Though Intel is clearly emphasizing its use as a CPU, Knights Landing will also be available in a PCI-Express card as a drop-in replacement for Knights Corner.

Near Memory

The second big change is in the memory architecture. Knights Landing will have a relatively large pool of high-bandwidth “Near Memory” in the CPU package, in addition to the standard DDR memory on the board (aka “Far Memory”). The addition of the Near Memory is meant to boost the performance on memory-bound workloads.

Hazra said this isn’t a new memory hierarchy since developers can treat it as one flat memory space and leave everything up the system software, but Intel also plans to offer developer tools to further optimize applications for the extra high-bandwidth memory. Intel did not say exactly how much extra memory will be in the chip package, but Hazra said it will have “enough capacity to hold meaningful workloads.”

Hazra also talked a bit about how Intel is “opening the door” to customer requests for more customized products. This goes beyond system-level customization to the developments of chips with different types of cores, operating frequencies or thermal envelopes designed for specific sorts of tasks.

Competitor AMD has also talked extensively about developing semi-custom SoCs, but so far neither company has provided examples of real-world products.

Software efforts

Intel has also intensified its efforts in software for high-performance computing. Intel has thousands of software engineers, and is already a big contributor to the Linux kernel and the Android ecosystem. Intel’s Boyd Davis, Vice President of the Data Center Group and General Manager of the Datacenter Software Division, said that modular hardware and open software is driving a lot of growth not only in the cloud and high-performance computing, but also “bleeding over” into the enterprise.

These open-source projects are so disruptive, Davis said, that Intel felt it had to develop its own software for the cloud and HPC. That began with the acquisition last year of Whamcloud, one of the key players behind the Lustre parallel file system used in many of the world's top supercomputers, and the release of Intel Enterprise Edition for Lustre.

At SC13 today Intel announced an HPC Distribution for Apache Hadoop (which runs on Intel Enterprise Edition for Lustre), Cloud Edition for Lustre running on Amazon Web Services Marketplace, and turnkey hardware and software solutions for Enterprise Edition for Lustre from several partners (Advanced HPC, Aeon Computing, Atipa, Boston Ltd., Colfax, E4 Computer Engineering, NOVATTE and System Fabric Works).

Earlier in the day, in the opening keynote address of SC13, Dr. Genevieve Bell, an Intel Fellow and Director of User Experience Research, gave the sort of wide-ranging talk on big data that you’d expect from anthropologist. She defined big data as the combination of data, visualization, analytics and algorithms and talked about some of the earliest examples reaching all the way back to the Domesday Book.

More powerful systems may enable us to analyze larger sets, but big data has been around a long time. “Computers didn’t invent big data. Humans did,” she said. “We are the people who build, we are the people make it, we are the people who use it.”

Bell said that big data holds extraordinary potential in areas such as climate change, energy, medicine, education, and it will be limited not by technology but only by the human imagination.