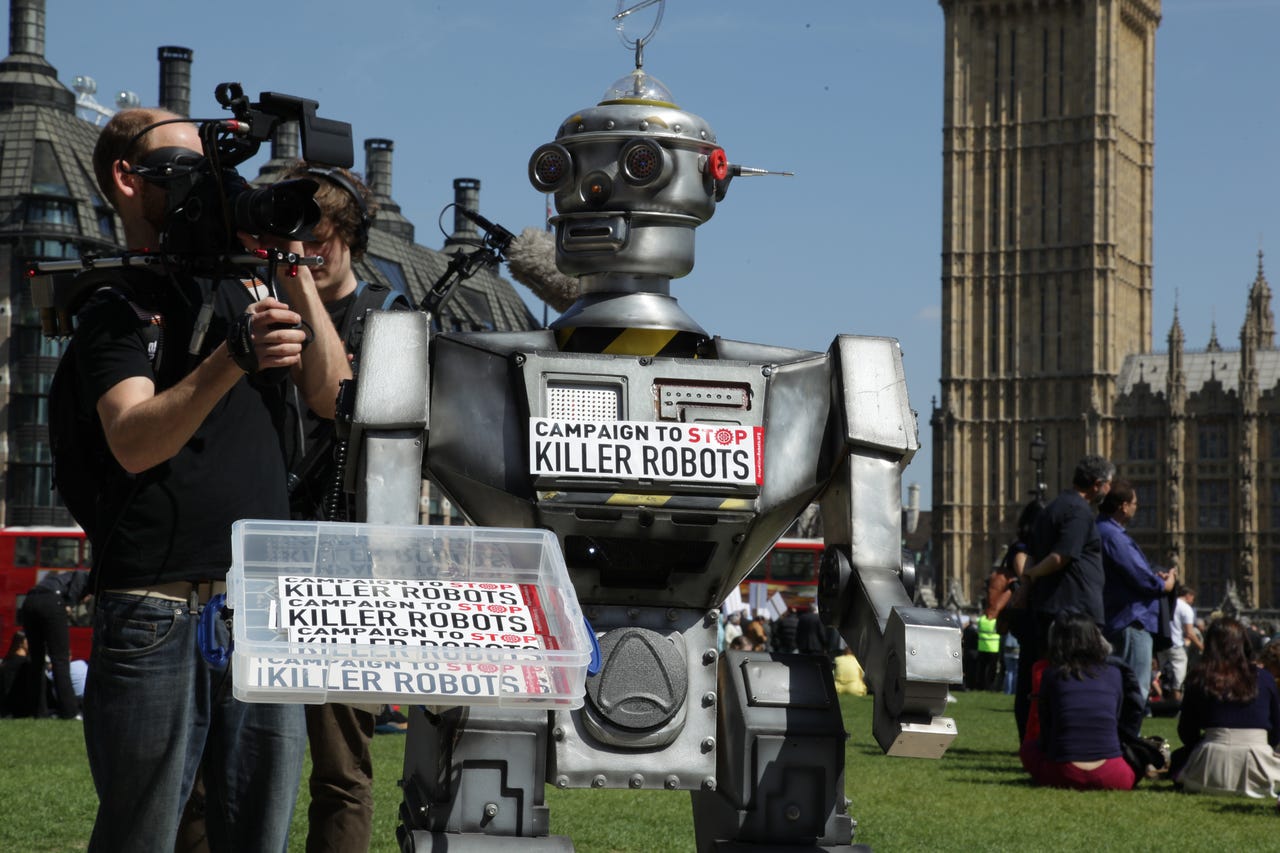

Should we ban killer robots?

Image: Campaign to Stop Killer Robots via Flickr

The Campaign to Stop Killer Robots was formed in 2012 by an international group of non-governmental organizations (NGOs). Since then, we've seen robots kill in warfare, law enforcement, manufacturing accidents, and more. Killer robots aren't always bad -- they are even being put to work killing invasive species in order to save the oceans. But these aren't the robots that the Campaign is targeting. The missing word here is autonomous. The Campaign to Stop Killer Robots is specifically working to ban fully autonomous weapons.

The Campaign's website explains:

Allowing life or death decisions to be made by machines crosses a fundamental moral line. Autonomous robots would lack human judgment and the ability to understand context. These qualities are necessary to make complex ethical choices on a dynamic battlefield, to distinguish adequately between soldiers and civilians, and to evaluate the proportionality of an attack. As a result, fully autonomous weapons would not meet the requirements of the laws of war.

A University at Buffalo research team recently published a paper in the International Journal of Cultural Studies that analyzes certain aspects of the Campaign. They conclude that governance and control of systems like killer robots needs to go beyond the end products. The researchers argue that "the rush to ban and demonize autonomous weapons or 'killer robots' may be a temporary solution, but the actual problem is that society is entering into a situation where systems like these have and will become possible."

Tero Karppi, one of the authors of the new paper, tells ZDNet, "To call something a Killer Robot instead of Autonomous Weapon is already a choice that will guide thinking and responses to these systems and how they are imagined. It draws on a larger imaginary of cultural products like science fiction movies and different discussions of how robots have been historically described."

The Campaign is not, for example, calling for a ban on robots like the one that the Dallas police used to kill a suspect, because a human remotely controlled the robot. So, as technology progresses and robots become commonplace, it's important to define exactly what defines autonomy. The widely-accepted definition is that autonomous robots are able to perform tasks without being controlled by a human. However, the University of Buffalo researchers have a nuanced view of autonomy.

According to Karppi, "Humans are designing these systems and they are designed to operate with humans in a human context, so when are humans not involved in their operations?" He suggests that ethical decisions could be coded into an autonomous weapon system's software. However, there remains an open question about who should decide what is ethical.

"We need to start thinking how algorithmic systems and artificial intelligence could be regulated," he says. "One way, as implicated by the campaign, is to ban the development of these systems on a nation state level. But again, we need to define what exactly needs to be banned. What are killer robots and what, for example, 'fully autonomous' means to people planning the regulations."

Karppi and his colleagues raise some interesting points, but there are even more questions to consider. Mary Wareham, coordinator of the Campaign to Stop Killer Robots tells us:

This attempt to apply an academic answer to the massive, multifaceted challenges posed by fully autonomous weapons might appeal to other academics, but it has little application in the real world. The authors appear to place too much confidence in technology to do things that are just not possible at this point, such as coding ethics into software. Legislation and policy is needed if we are to retain meaningful human control over weapons systems and especially over their targeting and attack functions. We're not sure why the authors of this report chose not contact the Campaign to Stop Killer Robots as they prepared it, but rather draw from our website.

Ouch. The bottom line is that this is an important debate that will need continue in the immediate future, since technology is rapidly becoming more powerful and autonomous.