The future of storage: 2015 and beyond

The competition between flash and hard disk-based storage systems will continue to drive developments in both. Flash has the upper hand in performance and benefits from Moore's Law improvements in cost per bit, but has increasing limitations in lifecycle and reliability. Finding well-engineered solutions to these will define its progress. Hard disk storage, on the other hand, has cost and capacity on its side. Maintaining those advantages is the primary driver in its roadmap.

Hard disks

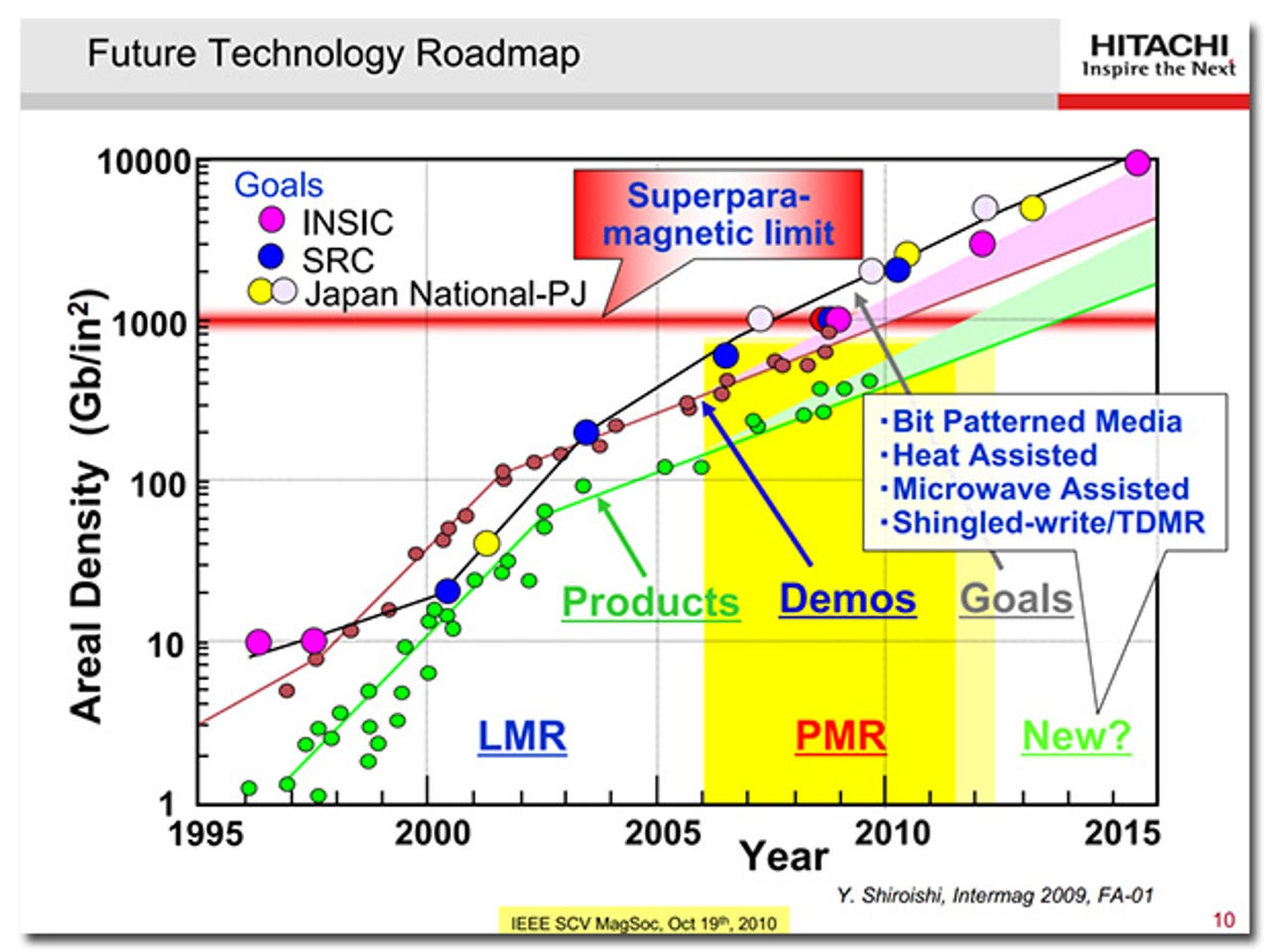

Hard disk developments continue to wring a mixture of increased capacity and either stable or increased performance at lower cost. For example, Seagate introduced a 6TB disk in early 2014 which finessed existing techniques, but subsequently announced an 8TB disk at the end of the year based on Shingled Magnetic Recording (SMR). This works by allowing tracks on the disk to overlap each other, eliminating the fallow area previously used to separate them. The greater density this allows is offset by the need to rewrite multiple tracks at once. This slows down some write operations, but for a 25 percent increase in capacity -- and with little need for expensive revamps in manufacturing techniques.

If SMR is commercially successful, then it will speed the adoption of another technique, Two-Dimensional Magnetic Recording (TDMR) signal processing. This becomes necessary when tracks are so thin and/or close together that the read head picks up noise and signals from adjacent tracks when trying to retrieve the wanted data. A number of techniques can solve this, including multiple heads that read portions of multiple tracks simultaneously to let the drive mathematically subtract inter-track interference signals.

A third major improvement in hard disk density is Heat-Assisted Magnetic Recording (HAMR). This uses drives with lasers strapped to their heads, heating up the track just before the data is recorded. This produces smaller, better-defined magnetised areas with less mutual interference. Seagate had promised HAMR drives this year, but now says that 2017 is more likely.

Meanwhile, Hitachi has improved capacity in its top-end drives by filling them with helium. The gas has a much lower viscosity than air, so platters can be packed closer together.

All these techniques are becoming adopted as previous innovations -- perpendicular rather than longitudinal recording, for example, where bits are stacked up like biscuits in a packet instead of on a plate -- are running out of steam. By combining all of the above ideas, the hard disk industry expects to be able to produce around three or four years of continuous capacity growth while maintaining price differential with flash.

Flash

Flash memory is changing rapidly, with many innovations moving from small-scale deployment into the mainstream. Companies such as Intel and Samsung are predicting major advances in 3D NAND, where the basic one-transistor-per-cell architecture of flash memory is stacked into three dimensional arrays within a chip. Intel, in conjunction with its partner Micron, is predicting 48GB per die next year by combining 32-deep 3D NAND with multi-level cells (MLC) that double the storage per transistor. The company says this will create 1TB SSDs that will fit in mobile form factors and be much more competitive with consumer hard disk drives -- still around five times cheaper at that size -- and 10TB enterprise-class SSDs, by 2018.

Another technology that's due to become more mature in 2015 is Triple-Level-Cell (TLC) flash. Original flash memory cells were often described as having one of two voltages stored in them, one for data 1 and another for data 0. This isn't strictly true: it's more accurate to think of the cells holding a range of voltages, with any voltage in one sub-range meaning 1 and any voltage in another sub-range meaning 0. These ranges could be quite wide and be widely separated, making it very easy for the surrounding circuitry to slap in good-enough voltages when writing and coping easily with sloppy outputs when reading.

MLC, multi-level cells, have four voltage ranges that correspond to 00, 01, 10 and 11 -- the equivalent of two single-level cells and thus double the density in the same space. This comes with considerable extra difficulty, as the support circuitry needs to be much more precise when reading and writing, and variations in cell performance as a result of production or aging are much more significant. Reads and writes get slower, lifetimes go down, errors go up. However, with double the capacity for the same size -- read cost -- such problems can be overcome.

TLCs have eight voltage levels per cell, corresponding to 000 through 111. This is only 50 percent more data than MLC, but the physics is considerably harder. Thus, although TLC has been around in some products for at least five years, it hasn't had the price/performance to compete.

The big difference for 2015 is the maturation of the driver technology to overcome TLC's speed, life and reliability issues. As with cutting-edge processors and communications, the key to economic deployment is expecting, characterising and designing for errors. One approach, by driver chip designer Silicon Motion, layers three basic error management systems.

The first is Low Density Parity Check (LDPC), which encodes data going into the memory in such a way that many errors encountered on reading can be detected and corrected in a mathematically reliable way -- and, crucially, without introducing unacceptable processing overhead. LDPC was invented in the 60s, but was impracticable with the hardware of the time; in the 90s, it started to be adopted and is now found in wi-fi, 10Gb Ethernet and digital TV. As such, it typifies the range of engineering challenges and techniques coming in from outside storage.

The LDPC engine also adds a system of tracking voltage levels within the TLC arrays. The electrical characteristics of the semiconductor structures in the memory arrays change in the short term by temperature and in the long term by aging; by adjusting to these instead of rejecting them, life can be extended and errors reduced.

Finally, the driver has an on-chip RAID-like mechanism that can detect unrecoverable errors from one page and switch to another.

This layering of technologies compensates for TLC's less desirable characteristics and makes it increasingly cost-effective. TLC still has some major issues that will limit its applicability, such as a much lower limit on writes during its lifetime, which may see it preferentially used for fast-access write-once archives, such as consumer data cloud storage.

Enterprise storage

The most successful enterprise storage strategy will continue to be one that uses both flash and hard disk: over the next five years, hard disks won't begin to approach flash performance, and flash won't begin to approach the capacity needed to store traditional enterprise data, let alone the huge increases predicted when the Internet of Things (IoT) starts to store information from the billions of connected devices we're promised.

Enterprise storage continues to move rapidly to a hybrid model, where similar techniques, architectures and developmental models are applied both within and beyond the enterprise's traditional boundary between hardware it owns and manages, and services it uses in the cloud.

For storage, that means a move towards distributed, virtualised systems -- taking advantage both of the software environments available to make large and flexible storage systems easy to manage and scale, and increases in storage interface and networking performance to break away from the traditional mix of NAS/SAN bulk storage systems and closely-coupled in-server storage for select, high-performance requirements.

There is an ongoing battle over what to call the move towards meshing multiple storage nodes connected to multiple servers, presenting a unified view to applications and management software. Whether it's called the 'software-defined data center', 'server SAN', 'software-only storage' or any of a number of vendor-specific terms, the move to virtualised distributed storage is being driven by cost, ease of use and speed of deployment. By identifying what class of application is using what class of data, such systems can automatically move both workload and storage to the optimum configuration, and make good use of closely-coupled ultra-fast flash storage or more distant, more capacious disks -- whether within an organisation or provided over the cloud.

Archival of rarely accessed information, massive data sets and HPC requirements will continue to need special attention, but we can expect the definitions of what makes these different from mainstream enterprise workloads to evolve -- and the applicability of mainstream storage for such tasks to improve.

Future storage

Further into the future, there's no sign of any new disruptive technology to break the flash/hard disk duopoly.

The major problem facing any radically different storage technology is the extremely competitive market for existing techniques. This is, in one sense, like a commodity market -- vast and operating at very low margins. This makes it hard for any new idea to scale up quickly enough to claw back research, development and manufacturing costs in a reasonable timeframe.

Yet the existing storage market is also quite unlike a commodity market in that it demands and gets continuous technological development through competition in two dimensions -- between drive manufacturers, and between solid-state and rotating media. That's a competitive landscape where every niche is exploited, so a newcomer must have some very significant advantage to be in with a chance.

Experience shows that this rarely, if ever, happens. Outside specialised areas such as long-term archival, rotating media and flash-based solid-state have seen off every rival, from the days of core store through bubble memory, holographic, ferroelectric RAM, polymer memory, phase-change memory and many more. Most of these have some advantages, realised or theoretical, over flash or disk, and many remain in development in some form. None has come close to establishing a mass market where money can be made. Even the most recent new technology, memristor storage, has no realistic roadmap that shows it taking significant market share in the next ten years -- by which time, on current form, both hard disk and flash storage will be far ahead on every metric.

Even without a revolution in the offing, the foreseeable future of storage is best described by its history: faster, safer, cheaper, more.