Searching for the perimeter in cloud security: From microservices to chaos

"If we could know exactly the laws of nature and the situation of the universe at the initial instant, we should be able to predict exactly the situation of this same universe at a subsequent instant. . . But this is not always the case; it may happen that slight differences in the initial conditions produce very great differences in the final phenomena; a slight error in the former would make an enormous error in the latter. Prediction becomes impossible and we have the fortuitous phenomenon."

-Henri Poincaré, "Science and Method," 1903

It was a gloomy, rainy Wednesday afternoon in San Francisco, Calif., on Nov. 15, 2017. A capacity crowd had gathered at one of the main ballrooms of the Hyatt Regency hotel to hear a man named Adrian Cockcroft. He is perhaps the world's foremost engineer of data centers for distributed processing. Netflix -- a service single-handedly responsible for well over one-third of traffic on the entire Internet --was his baby.

Now, he is Amazon AWS' vice president of cloud architecture strategy.

He speaks with buoyant optimism, tempered energy, and the sing-song lilt of a public TV cooking show host sharing his nuanced knowledge of herbs. But his subject matter is a technology called microservices.

It is a complex concept built upon the simplest of principles: That all of the functions of a business may be represented in code. Rather than compiling that code into colossal monoliths or reducing it to firewall-like rules for a BPM, he's demonstrated they can be deconstructed into interoperative modules.

Indeed, Cockcroft has begun professing the idea of further deconstructing microservices into small, independent functions. Imagine a universe where the superstrings that form quarks, which resonate into nucleons that reside at the center of atoms that form the basis of molecules, could all decouple themselves from the entire subatomic structure, with no visible damage to the universe, and the bonus being easier methods for working with energy, force, and solid matter. That's the power of Cockcroft's idea, at least when it's presented before an audience of developers who mostly know what he's talking about.

You'd think Cockcroft would have an intricate latticework in mind for the structure of this new information universe. The title of his talk is your first clue that he doesn't: "Chaos Architecture."

"Basically, you want to set it up with no single point of failure, you have zones around the world in regions, it is easy to do," explained Cockcroft (according to the QCon conference's official transcript). "You get this region, and you deploy multiple things. If you zoom in on that, you see that everything is interconnected, you have multiple versions of it. And you zoom into one of those and there are more versions, you have more regions, and so you are using lots and lots of replications at multiple layers as you go in."

Technically, Cockcroft's is not a security architecture. But that's not really the point: For decades, we've heard pleas that developers build information systems that are failure resistant and fault tolerant. The Netflix microservices model is the most successful effort to date at answering these pleas. AT&T engineers have spoken of it in awe. Microservices seeks to establish itself as secure from the outset.

"The key point here," he told his audience, "is to get to have no single point of failure.

"What I really mean. . . If there is no single point, it is a distributed system, and if there is no single failure, it has to be a replicated system. So we are building distributed, replicated systems, and we want to automate them, and cloud is the way that you build that automation."

If you watch this man speak for a half-hour or have a conversation with him, as I have, you may walk away feeling your world has been reshaped, and you've been gifted with insight into a unique and completely different perspective on how to approach IT security. You feel empowered, as if the answer could have been in front of you all along, and you can now start building not only fault tolerant systems but inspire people to manage them -- even to inject failure routines into those systems to help them learn how to heal, as Cockcroft has suggested.

Then, the moment after you avoid being struck by a city bus, the cold sting of reality hits you, like walking out of the theatre at the end of a great science fiction film (back when there were such things), feeling the exit door smack the side of your leg, and realizing we haven't yet really conquered the problem of gravity. You become intensely aware of the tremendous gulf between the warm and inviting ideal that has been entertaining you for the last little while, and the cold and complex reality in which you live.

The fortuitous phenomenon

Our next journey for ZDNet, Scale imagines the routes we would take to bridge that gulf. It begins at a place that at least sounds like the spot where we ended our last journey, like "the edge." It's called the perimeter, and we used to know where it was.

At least the information security industry could tell us where, especially in the wake of the Y2K debacle. After the world failed to end and countless bets were lost, we realized just the fear of the impact of a highly replicated calendar bug on the global economy may have been more devastating than the bug itself. At that time, most personal computers used cloned BIOS chips and essentially the same operating system. So, when someone found the bug in one PC, there was a sudden insecurity that all PCs -- and later, all computers -- could cease to function after midnight on New Year's Day in 2000. For people who had just learned what a virus was, had just seen a Y2K report on the evening news, and then just watched The Blair Witch Project, the bug may as well have been a virus for all the harm it could have caused.

Yet somehow afterwards, much of the PC-using world resumed clicking on unnamed attachments in their email messages, then wondering where their documents went.

Around 2002, the IT security industry began professing this idea: If a system could be built wherein all the components -- software, operating system, central processor, hard disk drive, network interface card -- could trust one another to work in a predictable, deterministic manner, all security needed to do to protect the integrity of that system was to seal off its entryway from the outside world and erect checkpoints at the gateways. It was the fortress security model, and a year after 9/11, just the thought of it made us feel better.

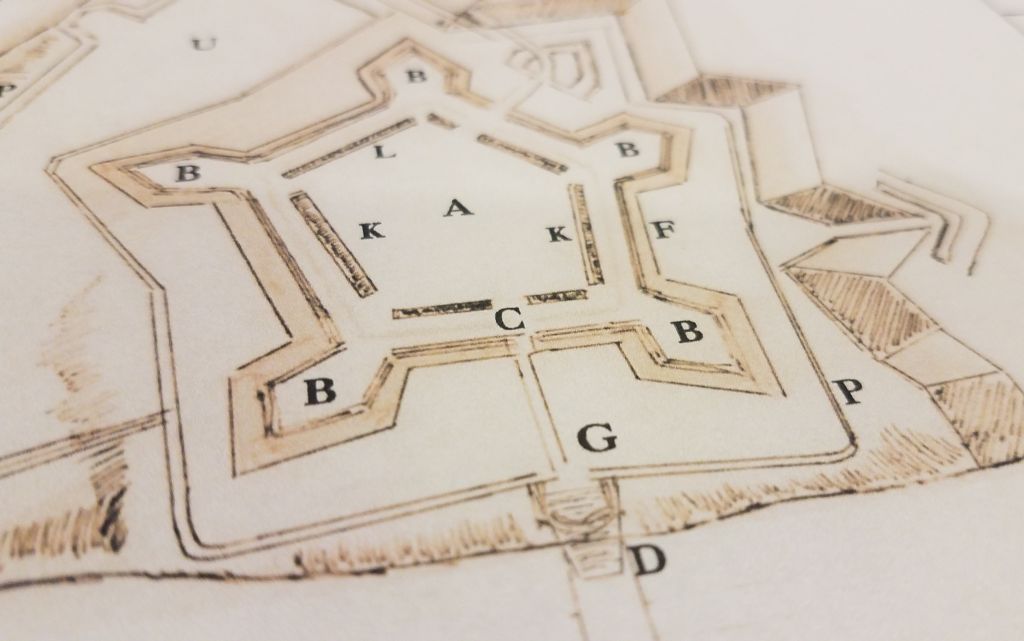

"In the software fortress model," wrote enterprise IT architect Roger Sessions in 2003, "security is the responsibility of four units working together. The wall keeps the hostile world at bay. The drawbridge allows controlled access from those few members of the outside world that this fortress is willing to trust. The guard makes sure that requests coming through the drawbridge are from authorized sources. And the envoy ensures that communications heading to other fortresses meet the security requirements of those fortresses' guards."

Our journey to find the perimeter begins here, at the drawbridge of Roger Sessions' fortress. Only it is 14 years later. The cloud has reshaped the world. Data centers are now virtualized on multiple planes of existence at once. Microservices are remaking applications into mutable, shape-shifting, enigmatic organisms that change size and shape at will, like a three-dimensional apple invading Edwin A. Abbott's 2D Flatland. PCs are gathering dust. Supercomputers travel around in people's pockets, supported wirelessly by hyperscale data centers that may be seen from space.

It is incumbent upon the engineers of these magnificent systems to adopt a new security model, and they're not sure what that might be. It is equally incumbent upon the experts in the IT security field to reconcile themselves with this new reality. And, at the moment, as they pick themselves up from being struck by a bus, they're not really sure what they're looking at.

"In a sense, we have to figure this out," admitted world-renowned security expert and IBM Resilient Systems CTO Bruce Schneier, speaking with ZDNet.

"This is bigger than your application. There's an Internet-enabled thermostat somewhere, and it triggers bits," Schneier said. "We're going to have to identify and authenticate lots and lots of things in the next few years. A sensor in car-to-car communications. It could be anonymous, but it's got to be authenticated. And probably, you're going to want some identification in case it goes rogue. It's hard. I don't have answers. But there's real value in the research in authenticating bundles of bits, however you want to characterize them. You want to know who or what produced the bits -- the provenance, every step of the way."

Decoupling

While very few enterprises have the luxury of being designed or redesigned as microservices from the ground up like Netflix or AWS, the decoupling of workloads from their underlying infrastructure is happening, on a number of levels simultaneously.

You're familiar with virtualization. Then there is containerization, which is the trend of making workloads portable across any infrastructure. At a deeper level, there's microservices. And in the realm that Adrian Cockcroft and his colleagues are exploring now, there's a deeper tier of functionalization that he's perfectly happy with calling "chaos." He knows full well that modern physicists use that term to describe the realm they themselves are entering now. And he also seems, at the very least, undeterred by such correlations as Stanford University would draw: "The big news about chaos," its Encyclopedia of Philosophy currently reads, "is supposed to be that the smallest of changes in a system can result in very large differences in that system's behavior."

"I think there's opportunity here," remarked Mark Nunnikhoven, Trend Micro's vice president of cloud research. "Distributed computing, microservices, even the push into serverless designs -- all this stuff breaks everything that we're used to and were comfortable with. And I think that's where a lot of people stumble. When we move from physical stuff into virtualizing the data center, we basically did the same thing. When we moved into the cloud, the first few steps, we were doing the same types of security things with tiny changes here and there. This is the first time, with this big push to distributed microservices, where we're really going, 'Hold on a second. This is a fundamental change in how we're doing it.' Not why we're doing things, but how we're doing them, needs to fundamentally change."

The fortress model could only work in a world where you knew for absolute certain where the domain of an enterprise's IT assets began and ended. If you knew where the gateway was, you could assign rules to it and have it enforce them. Not only would entry be restricted to those with specific passwords, or with other verifiable credentials, but their rights and privileges could be limited to discrete lists of applications.

Every component with which this security model was defined, is no longer a constant.

- Identity changes both its shape and its meaning. In the fortress, every active account was a user associated with a person. Something seeking access to a resource presented that user's personal credentials. Today, the cloud is full of functions, and the Internet of Things has, well, things. The model of trust demands that if these things are not bound to people, then they must be bound to something solid. And the cloud is not solid.

- Domain is no longer a rigidly constrained, contiguous territory of information assets. Applications reside in the public cloud -- at least, some of them. They may have access to data both inside and outside of enterprise data centers, all of which appears to belong to them, but none of which is guaranteed to reside with them. Containerized environments may reside partly in the public cloud, and partly on-premises. So wherever certain parts of data reside in one place and belong to someone else, it would be nice to be able to reliably tag it as belonging to some verifiable identity. (See "Identity" above.)

- Trust is no longer a binary state. The root of trust -- the thing whose ultimate identity is beyond question, certified by an incontrovertible source -- has been uprooted. There is still a base to which all certificates eventually refer, but the authority to declare that base a solid root has been diminished by both state politics and by corporate politics. Certificates are now valid until, suddenly, they're not valid. And that leads directly to the fourth erosion of constancy:

- Secrecy cannot be assured. It takes a trustworthy certificate to provide both the backing and the code to enable a verified user (with an irrefutable identity) to encrypt messages and sessions to someone else, and for that someone else to decrypt them. What's more, it's more difficult to effectively manage the keys with which traffic is secured, in a system where the domain for those keys may be entrusted to someone else anyway.

There is no wall, no drawbridge, and no guard. Gateways could be anywhere, and envoys could be anything. The perimeter of an enterprise's IT domain is, for now, purely in the mind of the beholder. It's more like one of those modern-day, more depressing, sci-fi movies where the human survivors all wear multi-colored war paint, bulldozers are pulled by mutant oxen, and the seat of the world's government is a disused convenience store on a forgotten interstate. It's from this opposite side of the gulf, where Bruce Schneier is pleading for anyone with good ideas, that you remember what Adrian Cockcroft looked like, and ask aloud, why is this man smiling?

"The devil's in the details, of course," Schneier told us. "It feels like, we're virtualizing what we do now. And my guess is, you start by virtualizing what we do physically, and then over the years, we figure out new things we can do that we never thought of before, because we were limited by the physical limitations, which we're no longer. . . Security is something [where] we can't do everything."

Reconnoiter

In the hopes of re-establishing some semblance of the mental mindset we used to employ to make us feel safer, there is a genuine effort under way to build a new security model that resembles the old model, at least when viewed from one angle. It is called the Software-Defined Perimeter (SDP).

In a world (to channel Don LaFontaine) without borders, and where not only networks and servers but software has become software-defined, SDP has the promise of re-establishing continuity for the security industry. In the second leg of this journey, we'll embark on a search for this new, and hopefully less ambiguous, security model.

At Waypoint #3, we'll explore how security engineers may yet resolve the problem of identity, in a world (there's Don again) where there are more things to be identified than people. And we'll conclude at a place where we ask if any form of artificial intelligence can help us resolve these issues in security, if you will, without assuming control for itself like another string of sci-fi sequels that never end well.

My promise to you is, we'll end on a note of genuine hope for the holidays. Until the next stage of our venture, hold tight.

Journey Further -- From the CBS Interactive Network:

- 8 speed bumps that may slow down the microservices and container express by Joe McKendrick, Service Oriented

- Cloud is the ignored dimension of security: Cisco by Asha Barbaschow, Security

- Cloud vulnerabilities are being ignored by the enterprise by Charlie Osborne, Zero Day

Elsewhere:

- Context: How Will Everybody Secure Microservices? [podcast] by Scott M. Fulton, III, The New Stack

- ShiftLeft promises to protect your code even when you ignore security threats by Ron Miller, TechCrunch

- Got Microservices? Consider East-West Traffic Management Needs by Ranga Rajagopalan, CTO, Avi Networks for Data Center Knowledge

The race to the edge:

Have hyperscale, will travel: How the next data center revolution starts in a toolshed

The race to the edge, part 1: Where we discover the form factor for a portable, potentially hyperscale data center, small enough to fit in the service shed beside a cell phone tower, multiplied by tens of thousands.

A data center with wings? The cloud isn't dead because the edge is portable

The race to the edge, part 2: Where we come across drones that swarm around tanker trucks like bees, and discover why they need their own content delivery network.

Edge, core, and cloud: Where all the workloads go

The race to the edge, part 4: Where we are introduced to chunks of data centers bolted onto the walls of control sheds at a wind farm, and we study the problem of how all those turbines are collected into one cloud.

It's a race to the edge, and the end of cloud computing as we know it

Our whirlwind tour of the emerging edge in data centers makes this much clear: As distributed computing evolves, there's less and less for us to comfortably ignore.