Watching Windows Vista decay

By definition, beta software is buggy. So, how buggy is Windows Vista? Microsoft has helpfully included a new tool for measuring stability and reliability in Windows Vista. It's called the Reliability Monitor tool, and you can find it in the Performance Diagnostic Console. (To run this tool, you can go the long way: Start, Control Panel, System and Maintenance, Performance Rating and Tools, Advanced Tools, Open Windows Diagnostic Console. Or you can just click Start, type Perfmon in the Search box, and click the Perfmon shortcut when it appears.)

I included screenshots of the Performance Diagnostic Console and the Reliability Monitor in my earlier post Vista Beta 2, up close and personal (the specific images are here and here). To switch to the Reliability Monitor, click its link in the left column of the Performance Diagnostic Console.

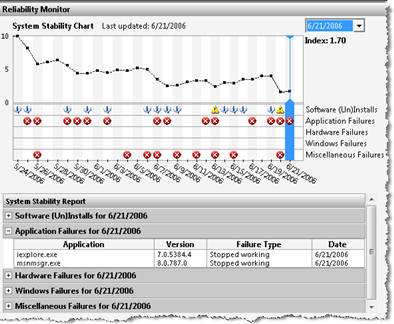

On one computer in my office, the Reliability Monitor paints a thoroughly depressing picture. See for yourself:

That line chart at the top represents four weeks' worth of ever-increasing instability, according to Vista's own tools. On May 24, after a clean install, the Stability Index was at 10; today, four weeks later, it’s at 1.70. On another computer in my office, the same picture is emerging. After a clean install on May 31, the index has slipped to 3.16 today.

Is Windows Vista really decaying right before my eyes? The short answer is, no. This is a crude measurement, to be sure, and it's misleading as well. The problems I’ve been experiencing (and which are logged in detail in the Reliability Monitor) are pretty much the same bugs, in Windows and in application software, occurring repeatedly, which is what you expect from a beta. So the inference that the system is somehow getting much less stable over time may not be accurate. In other words, my system stability was never a 10, and it’s certainly not a 1.70 now.

This is at least the second place within Windows Vista where some product designer decided that creating an arbitrary index number would be valuable. (The other is the much-criticized System Performance Rating, which looks at the details of your PC's parts and reduces the calculation to an overall rating on a 1-to-5 scale.) I'm not sure what the user is supposed to take away from this number, though. If my Stability Index slips below 5, is it time to do a complete reinstall? Is it really fair to conclude that my overall system stability dropped from a perfect 10 to 8.17 because Explorer crashed twice on May 25, or that it then slid all the way down to 5.77 the next day because OneNote 2007 Beta 1 stopped working twice (and hasn't failed since)? In fact, a quick scan of the details shows that both systems are fairly reliable overall; it's that Office 2007 Beta that is really the unstable actor.

If I had left my computer running and had gone on vacation for two weeks, the Stability Index would have probably risen. As near as I can tell, the algorithm gives a tiny boost to the Index number for any day where there are no crashes or application failures.

Don't get me wrong - I think the Reliability Monitor is a good idea and a potentially useful tool. Its real value is its ability to track and organize system events, such as software and driver installations, and display them in a format that allows you to identify correlations between failures and other system configuration events. The day-by-day display at the bottom makes it easy to see when a series of unpleasant events started, and you don't have to be a master troubleshooter to look at the list of Software (Un)Installs to zero in on a potential cause.

But that silly line chart at the top is just plain misleading. Microsoft should ditch it and the accompanying index number.