What's a poselet and why it matters to Facebook's facial recognition accuracy

Facebook artificial intelligence researchers and Berkeley's Ning Zhang have found a way to improve facial recognition algorithms by largely looking at everything but your face.

Word that Facebook's face recognition algorithms can figure out your identity even when a complete view is obstructed generated a good bit of buzz in tech circles via New Scientist.

Facebook, in a paper presented at the Computer Vision Foundation, earlier this month revealed that its facial recognition software had a 83 percent accuracy even when faces were hidden. The key to moving the accuracy needle was to use a new method that looks at a person's attributes and poses.

The social networking giant used a Flickr sample to prove its method. The general idea is that Facebook improved facial recognition by looking at cues, body parts, poses and context.

In its paper, Facebook's researchers noted:

Recognizing people we know from unusual poses is easy for us. In the absence of a clear, high-resolution frontal face, we rely on a variety of subtle cues from other body parts, such as hair style, clothes, glasses, pose and other context. We can easily picture Charlie Chaplin's mustache, hat and cane or Oprah Winfrey's curly volume hair. Yet, examples like these are beyond the capabilities of even the most advanced face recognizers.

While a lot of progress has been made recently in recognition from a frontal face, non-frontal views are a lot more common in photo albums than people might suspect. For example, in our dataset which exhibits personal photo album bias, we see that only 52% of the people have high resolution frontal faces suitable for recognition. Thus the problem of recognizing people from any viewpoint and without the presence of a frontal face or canonical pedestrian pose is important, and yet it has received much less attention than it deserves.

Part of the reason the issue hasn't received a lot of attention is that few companies handle the volume of photos that Facebook does. Facebook recently launched Moments, a standalone app that uses facial recommendations to organize photos. Rest assured Google is watching too with its machines.

Previously: Facebook's DeepFace: What would you do with it?

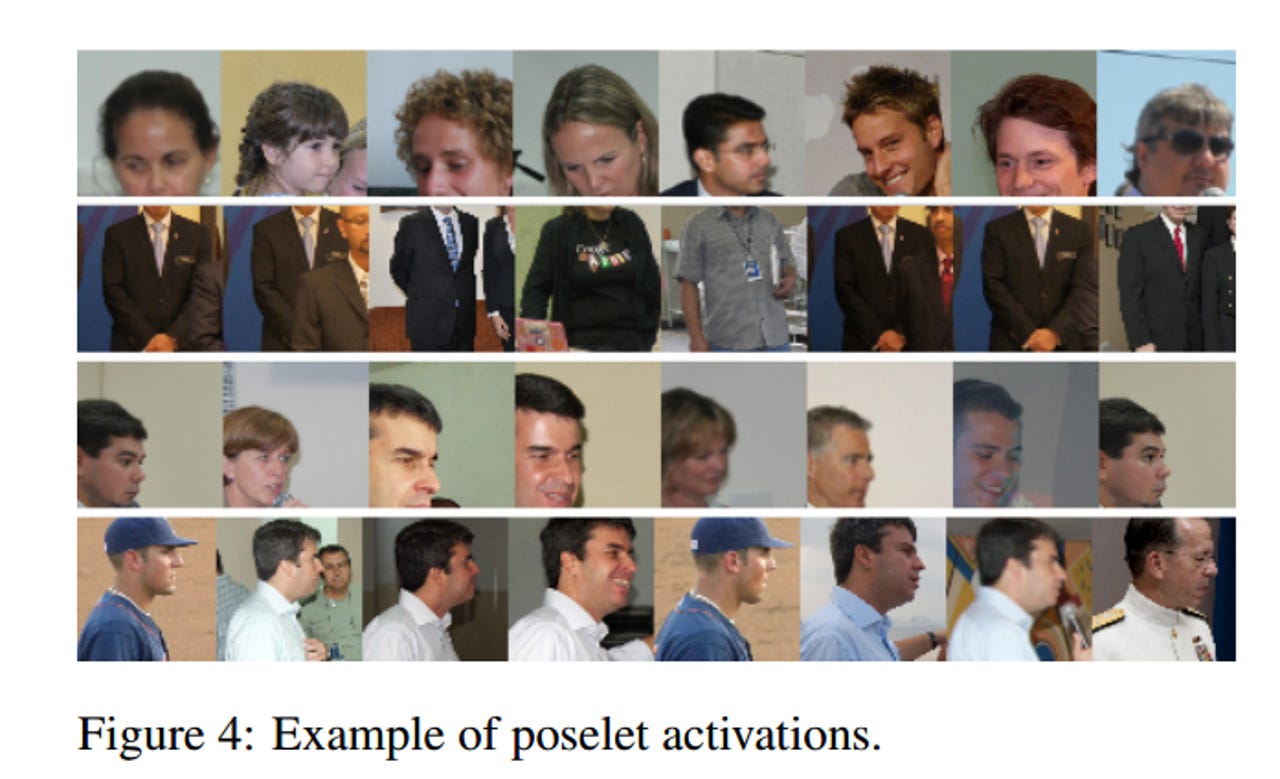

The linchpin to Facebook's facial recognition improvements is something dubbed a poselet, which is a classifier to detect pose patterns. The Facebook paper explains:

Poselets are classifiers that detect common pose patterns. A frontal face detector is a special case of a poselet. Other examples include a hand next to a hip or head-and-shoulders in a back-facing view, or legs of a person walking sideways.

A small and complementary subset of such salient patterns is automatically selected as described in...While each poselet is not as powerful as a custom designed face recognizer, it leverages weak signals from a specific pose pattern that is hard to capture otherwise. By combining their predictions we accumulate the subtle discriminative information from each part into a robust pose-independent person recognition system.

Take poselets, add faces and neural networks and accuracy improves dramatically. Once you add your social graph---which Facebook already has---you see the potential. Facebook at some point will recognize you just as easily as your family can.

More reading: