Why computers fail

Good failure data for PCs is hard to find: who knows how many times PC users are told to reinstall Windows? But in a recent paper, Bianca Schroeder and Garth Gibson of CMU found some surprising results in 10 years of large scale cluster system failures at Los Alamos National Labs.

Among the surprises: new hardware isn't any more reliable than the old stuff. And even wicked smart LANL physicists can't figure out the cause for every failure.

Special problems of petascale computing Despite the incredible performance of Roadrunner, LANL's new petaflop computer, the jobs it runs often take months to complete. With 3,000 nodes failures are inevitable.

What to do? LANL's strategy is stop the job and checkpoint. When a node fails they can roll the job back to the last checkpoint and restart, preserving the work already done - but losing the work done after the checkpoint.

Even using massively parallel high-performance storage the checkpoints take time away from getting the answer. Understanding Failures in Petascale Computers uses LANL's data to better manage the tradeoffs and to suggest new strategies.

But its the failure data itself - and what it suggests about our own computers - that I found most interesting.

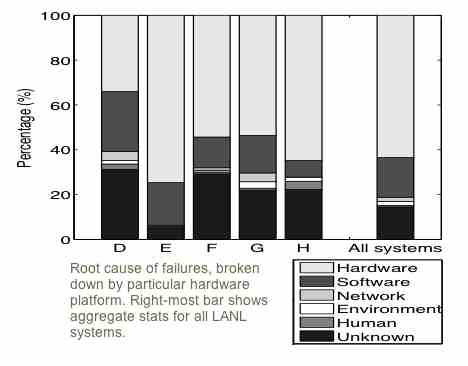

Failure etiology Hardware accounts for over 50% of all LANL failures - with software about 20%. Given all the PhD's at LANL you'd hope human error would be low on the list - and it is.

Here's the graph:

Is reliability improving? Nope. LANL hasn't seen any improvement over the years - even with hardware from a decade ago.

The key metric The research showed that

. . . the failure rate of a system grows proportional to the number of processor chips in the system.

Which is a big problem for massive multi-processor systems.

The Storage Bits take Extrapolating these results to our desktop systems is straightforward - with one big caveat: most desktop system crashes are software, not hardware.

Otherwise the Blue Screen of Death would be the No Screen of Death.

The biggest finding is that we shouldn't expect our system hardware to get more reliable. Improvements get balanced out by increased complexity.

Those of us with multi-processor systems can expect to see less reliability - though with just a few systems you won't see any trends. It's a classic "glass half full" situation: our systems won't get better, but al least they won't get worse.

Comments welcome, of course.