Will the real Elon Musk please stand up? Autonomous bots and synthesized speech in the public domain

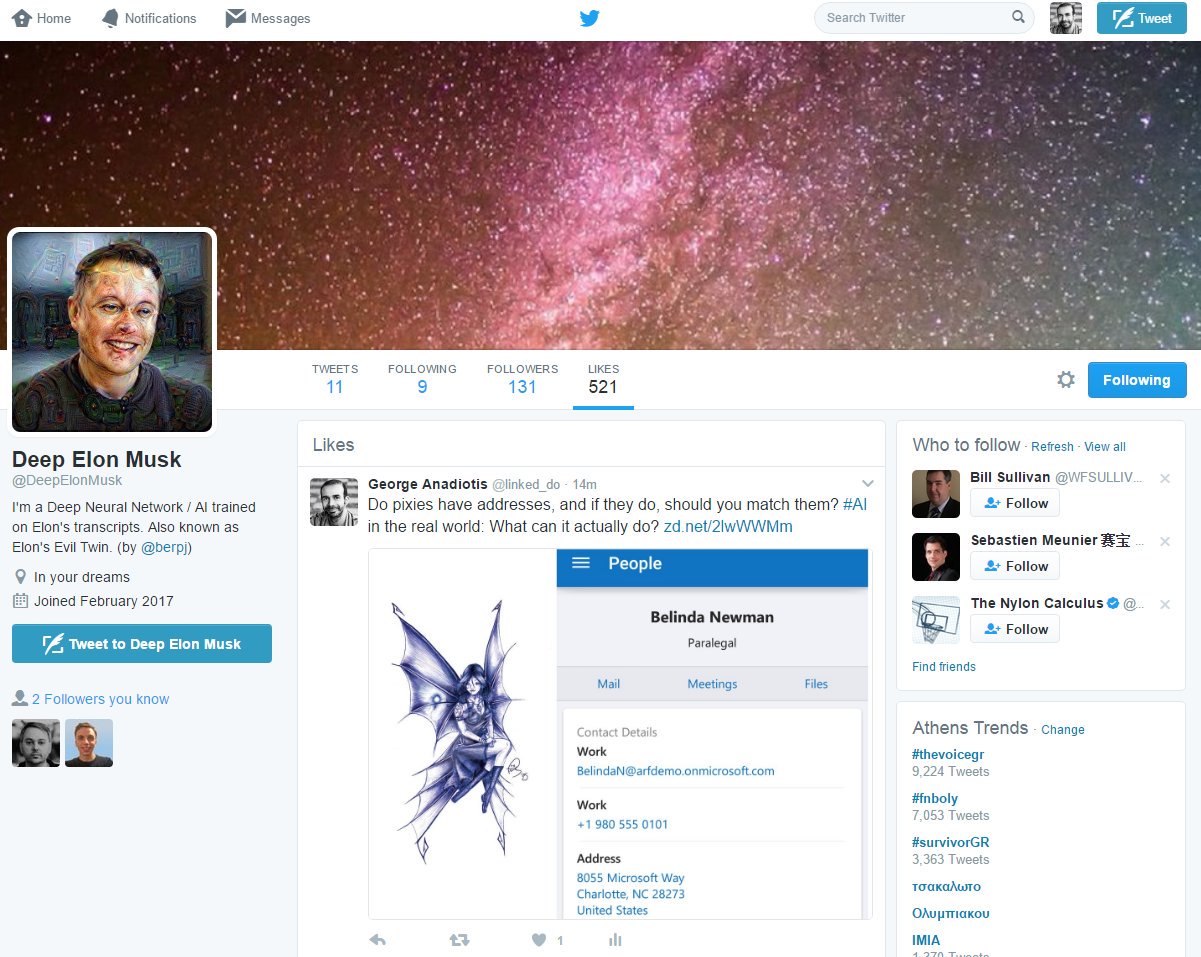

Do pixies have addresses, and if they do, should you match them?

You're probably thinking this is a weird question to ask, and it probably is, even more so taken out of context. It refers to the antithesis between the up-in-the-sky expectations and theories associated with artificial intelligence (AI) and the often mundane work required to make it work in the real world.

Deep Elon Musk exists, and it's not what you think it is

Deep Elon Musk

Either way, that sounds like the kind of question that could get you down a rabbit hole. So, when I tweeted that a while ago as a reference to a then published story on AI in the real world, I was not all that surprised to get a reply from Deep Elon Musk.

Deep Elon Musk is an AI twitter bot. At its core, it's an algorithm that learns how to write. It has been crafted using an LSTM Recurrent Deep Neural Network, and it's trained by having it read a lot of Tesla CEO Elon Musk's speeches over and over. You could say it has taken a life of its own.

Deep Elon Musk generates original tweets and interacts with other users on Twitter by liking their tweets. It does not go as far as having fully interactive conversations, but given it was only released in February 2017, and it's the creation of a lone AI enthusiast, that's already quite an achievement. Although it does not try to fool anyone, it probably could, as it certainly is more convincing than most bots out there.

Deep Elon Musk was created by PJ Bergeron, a software developer who built it to see if an AI could speak like a human being. He picked on the irony of Musk's own fear of AI, and having a rich corpus of Musk's public speaking available made training the bot easier.

But if someone you've never heard of was able to build something the Googles of the world would be proud of working solo in his basement with zero cost, then what are the actual Googles able to do, and what does this mean?

Welcome to the desert of the virtually real

Google introduced the ability to answer your emails for you in 2015, and there's a 10-percent chance emails you have received from your contacts were automatically generated. There's also good chances some of your online interactions with otherwise professional and/or charming counterparts were not exactly what you thought they were.

Bergeron built Deep Elon Musk because he could, and because he thought it would be fun. For the professional bot makers of the world, the motivation is different, and so is the potential impact.

If you can do it for Musk, you can do it for anyone, and the possibilities here are virtually endless: Think digital personal assistants that can become your social media clones for example, taking away the burden of online interaction.

You may think this is far-fetched, and many public relations professionals would agree with you. But that does not stop others from setting out to automate PR, or from creating virtual clones. For Bergeron, the hard part is that you have to get a big dataset to train your AI:

To create a "clone" of yourself you would have to write a lot. The solution could be to train your AI on a lot of different persons, then train it a bit on your own content to make it talk like you. So, first, learn how to write in English, then learn how to write like YOU.

And how would you feel about the idea of having your words put into someone else's mouth and having them read them out as if they were their own? Well, that is absolutely possible, again using deep learning (DL) expertise and public domain data.

Synthesizing Obama

A team at the University of Washington (UW) made a splash recently by publishing a paper on their work called Synthesizing Obama. The UW team, like Bergeron, used an LSTM Recurrent Deep Neural Network trained on public data.

The ability to synthesize speech using any person from publicly available footage is real, here and now. (Image: University of Washington)

Supasorn Suwajanakorn, the paper's primary author, points out that this has been shown to work well on many audio processing tasks such as speech recognition. He added that their approach was not based on it in its entirety, as their DL network is only a part of the whole pipeline.

As Suwajanakorn explained, the aspect that sets this work apart is the fact that this significantly lowers the bar to achieving such results, for a number of reasons: "The major difference is that we can produce convincing results by learning from just existing footage of a single person.

"Other work requires the person to be scanned in a lab and has to carefully construct a speech database consisting of many people saying pre-chosen sentences. This is what sets us apart and allows our technique to be scaled to anyone with much less effort."

As for the resources and know-how needed for this? Suwajanakorn said it took them about a year, and they needed a cluster of computers to download the data and a high-end machine for research.

Keep in mind, though, it was the first time this was done, and the UW team had to pay the price for paving the way. When asked if anyone who knows their way around machine learning (ML) and has access to moderate resources could do something like this, Suwajanakorn was positive.

You and what data science army?

The key difference with Bergeron's work is that in this case the training data was footage (Obama's weekly addresses to the nation), and the end goal was to have existing text (Obama's own speeches) synthesized.

But is there something stopping you from combining these approaches to create, say, fake news, AI pop stars, and Max Headroom on steroids? No, not really, argues Michal Kosinski.

Kosinski became somewhat of a household name by being credited with the creation of the psychographic model Cambridge Analytica allegedly used for online political campaigning for the Brexit and Trump campaigns.

Kosinski has developed ML algorithms that require very little in terms of required data and processing power to produce personal profiles with extraordinary accuracy. Allegedly, these profiles can predict your behavior more accurately even than your nearest and dearest people.

Kosinski presented some new results from his research in his CeBIT keynote a few months back. He showcased how using simple, readily available data points such as likes, comments, images, personal characteristics, and traits can be deduced with a high degree of accuracy. And he did that not using supercomputers in the cloud, but his own laptop.

His point? Even if you can successfully regulate the GAFAs of the world, you could never regulate lone wolfs, and there is so much more that is possible than what you think you know. The end of privacy is here; let's just deal with it.

Yes, it does take expertise and time to do this, but keep in mind the development process is itself being automated and can be expected to be commoditized, just like so many others before it. Soon, it may not take the metaphorical data science army to get to results like this.

When discussing potential uses of his work, Bergeron said he might pursue some of them. None of the nasty ones, granted. But there would not be much stopping him had he chosen to go for those.

"From the outside it always seems scarier"

So, no regulation, no control, no clear direction. Should we be concerned about what is possible with technology like this, and should we just trust the Googles of the world to not be evil?

Suwajanakorn's thesis revolves around these questions: What aspects of a person can you infer by just looking at their photos and videos? Can you model someone's persona and create a digital human that looks, talks, and acts just like them?

Suwajanakorn will be joining Google and plans to continue on this line of work, which was part of a broader project involving Samsung, Google, and Intel, although he said his move is independent of this. Does this sound equally awesome and worrisome?

Could it be that our capacity for technological progress is far greater than our capacity for social and ethical development, or that we should just stop and think for a while before marching on to new tech heights?

Suwajanakorn said this is a complicated issue and usually everything is not clear-cut: "I believe that researchers should take ethical concerns into account and public discussion and fear should be informed by technical research.

"I can see how from the outside it always seems scarier. There is a potential for misuse but I can see many ways that [this] can be prevented and many more challenges before we even reach that point."