Another take on Vista vs. XP benchmarks

As anyone who’s ever worked in a PC performance lab knows, the #1 rule of benchmarking is: Your mileage may vary.

I remembered that rule when I read my colleague Adrian Kingsley-Hughes’ epic account of his benchmark tests of Windows Vista SP1 versus Windows XP SP2 (Part 1 and Part 2). The first thing that struck me was how far apart his numbers were from those I was getting. In fact, I went back and redid all my tests to confirm that I hadn’t missed anything along the way. They checked out completely. On my test bed, with only one exception, Vista SP1 was consistently as fast as or faster than XP SP2, a result markedly at odds with Adrian’s findings.

I have no doubt that Adrian's tests and timings were accurate, just as mine were. So what’s the difference?

For starters, our test beds were very different:

- Adrian chose a desktop system with a first-generation Intel dual-core processor, the 3.4 GHz Pentium 950D. I chose a Dell Inspiron 6400 notebook with an Intel T2050 1.6 GHz Core 2 Duo processor. (I bought this system in December 2006, a few weeks after Vista was released to business customers. It originally came with XP SP2 installed on it, and I upgraded the system to Vista almost immediately.)

- I chose to use a dual-boot configuration, designing my tests carefully so that file copy operations with each OS were done between the same source and destination volumes to minimize the effects of disk geometry on performance. Adrian used separate hard drives for each OS and each file copy operation.

- I used a wireless 802.11g network connection. Adrian used wired Gigabit Ethernet connections.

- Neither of us performed any special optimizations to either configuration except to ensure that drives were defragmented.

For my test files, I chose the same two groups of files I had used in previous rounds of performance testing last year. The first consisted of two large ISO files, each containing the contents of a ready-to-burn DVD, with a total size of 4.2 GB. The second group is a collection of music files, just over 1 GB in size, consisting of 272 MP3 files in 16 folders.

As it turns out, the test bed I chose is one that matches nicely with a lot of real world business-class systems. Notebooks represent the majority of the PC market these days, and the 802.11g connection in this one is by far the most popular networking option on portable PCs. From a performance standpoint, it's neither a speed demon nor a slug. More importantly, this system's specs match those that Microsoft's engineers had in mind when they reengineered the file copy engine with Vista RTM and then with SP1. As Mark Russinovich notes in his detailed description of these changes, copies over high-latency networks such as WLANs are especially likely to benefit from the changes in Vista.

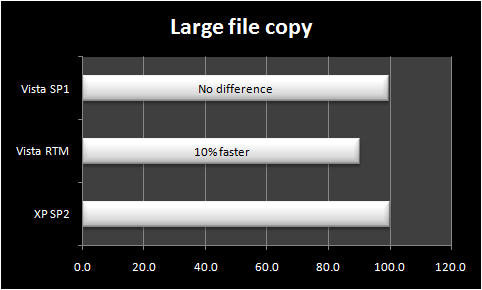

I ran each test multiple times and took the average of at least three tests. The graphs shown here are normalized, with Windows XP SP2 set to 100 and the results for Vista SP1 and Vista RTM charted proportionally.

Next -->

As you can see, several file operations were essentially identical between Vista SP1 and XP SP2 (other scenarios I tested but didn't chart, such as a copy from a local hard disk to an external USB drive, showed the same identical results). My numbers matched up with Adrian's only on the Zip test, which measured how long it took to stuff 1 GB of files into a compressed archive. Vista is slower at this task than XP.

Next -->

So, why did my results vary from those that Adrian reported?

One thing I noticed early on is that it’s easy to overlook the background tasks that Windows Vista performs immediately after the SP1 upgrade. These tasks are the same as those that occur after a clean install. When I first performed the small file copy test, for instance, I got wildly inconsistent results. Looking in Task Manager, which shows the amount of CPU usage associated with each running process, I didn’t see any active tasks. But as it turned out, the system was busy. I was able to explain the differences by looking at the Disk section in Vista’s Reliability and Performance Monitor, where I found that Vista’s Search Indexer, SuperFetch, and ReadyBoost were all using small but measurable amounts of disk resources as background tasks. The green lines indicate CPU and disk activity in the side-by-side graphs. Note that CPU usage is practically nonexistent, but each task was using a polite but measurable amount of disk resources; the collective impact was enough to steal as much as 10 MB/sec of disk performance.

When I re-ran the tests after making sure that these tasks were no longer running in the background, I got the consistent results shown here.

I agreed completely with a remark Adrian tossed off almost as an aside in the middle of Part 2 of his report:

[O]ddly enough, Vista SP1 felt more responsive [than XP SP2] to user inputs such as opening applications and saving files while the tasks were being performed (we tried this out on separate runs). Problem is that it’s darn hard to measure this end responsiveness without relying more on synthetic benchmarks.

My experience is the same. In fact, it appears that Vista’s designers have made a conscious choice to favor smooth, consistent performance over raw speed. The latter makes for more satisfying benchmarks, but it can also result in annoying performance glitches in day-to-day use.

So, was that the right design decision? Is file copying really a critical performance benchmark? If it takes me 10 seconds more or less to copy a group of files, I truly don't care. For mainstream business use, there's no practical difference between a job that takes 5:52 and one that takes 6:18, especially when the copy operation takes place in the background while I busy myself with other work.

Ultimately, the act of benchmarking file copy operations is distinctly unnatural. Both Adrian and I sat for hours clicking a stopwatch and staring at Windows Explorer windows as we waited for progress dialog boxes to close. We're both a little obsessive like that. Your mileage may vary.