Amazon's N. Virginia EC2 cluster down, 'networking event' triggered problems

Updated: Amazon Web Services is seeing connectivity, latency and errors in its Northern Virginia data center. The outage, which continued for hours Thursday, may highlight weaknesses in the company's availability zone architecture.

The outage is a big hassle for customers---like Quora, Foursquare and Hootsuite, which have bet on Amazon to host their services. For Amazon Web Services, the outages may raise questions. AWS is architected so regions back up each other. These "availability zones" are supposed to ensure redundancy, but failed in this case.

When you get an AWS computing resource it’s assigned by region. Regions include U.S. (east and west), EU (Ireland) and Asia Pacific (Singapore). These regions include at least three availability zones—a data center hub roughly speaking. AWS is architected so two availability zones can fail concurrently and data is still protected. Amazon’s aim is to eliminate any single point of failure, because IT fails all the time. AWS recommends that customers spread their assets around multiple availability zones in a region.

Amazon on Thursday explained why its availability zone failed:

We'd like to provide additional color on what were working on right now (please note that we always know more and understand issues better after we fully recover and dive deep into the post mortem). A networking event early this morning triggered a large amount of re-mirroring of EBS volumes in US-EAST-1. This re-mirroring created a shortage of capacity in one of the US-EAST-1 Availability Zones, which impacted new EBS volume creation as well as the pace with which we could re-mirror and recover affected EBS volumes. Additionally, one of our internal control planes for EBS has become inundated such that it's difficult to create new EBS volumes and EBS backed instances. We are working as quickly as possible to add capacity to that one Availability Zone to speed up the re-mirroring, and working to restore the control plane issue. We're starting to see progress on these efforts, but are not there yet. We will continue to provide updates when we have them.

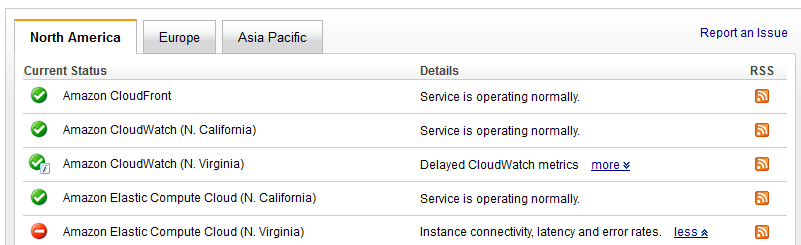

AWS' dashboard highlights a struggle at the moment. Here's the recap:

1:41 AM PDT We are currently investigating latency and error rates with EBS volumes and connectivity issues reaching EC2 instances in the US-EAST-1 region.

2:18 AM PDT We can confirm connectivity errors impacting EC2 instances and increased latencies impacting EBS volumes in multiple availability zones in the US-EAST-1 region. Increased error rates are affecting EBS CreateVolume API calls. We continue to work towards resolution.

2:49 AM PDT We are continuing to see connectivity errors impacting EC2 instances, increased latencies impacting EBS volumes in multiple availability zones in the US-EAST-1 region, and increased error rates affecting EBS CreateVolume API calls. We are also experiencing delayed launches for EBS backed EC2 instances in affected availability zones in the US-EAST-1 region. We continue to work towards resolution.

3:20 AM PDT Delayed EC2 instance launches and EBS API error rates are recovering. We're continuing to work towards full resolution.

4:09 AM PDT EBS volume latency and API errors have recovered in one of the two impacted Availability Zones in US-EAST-1. We are continuing to work to resolve the issues in the second impacted Availability Zone. The errors, which started at 12:55AM PDT, began recovering at 2:55am PDT

5:02 AM PDT Latency has recovered for a portion of the impacted EBS volumes. We are continuing to work to resolve the remaining issues with EBS volume latency and error rates in a single Availability Zone.

6:09 AM PDT EBS API errors and volume latencies in the affected availability zone remain. We are continuing to work towards resolution.

6:59 AM PDT There has been a moderate increase in error rates for CreateVolume. This may impact the launch of new EBS-backed EC2 instances in multiple availability zones in the US-EAST-1 region. Launches of instance store AMIs are currently unaffected. We are continuing to work on resolving this issue.

7:40 AM PDT In addition to the EBS volume latencies, EBS-backed instances in the US-EAST-1 region are failing at a high rate. This is due to a high error rate for creating new volumes in this region.

8:54 AM PDT We'd like to provide additional color on what were working on right now (please note that we always know more and understand issues better after we fully recover and dive deep into the post mortem). A networking event early this morning triggered a large amount of re-mirroring of EBS volumes in US-EAST-1. This re-mirroring created a shortage of capacity in one of the US-EAST-1 Availability Zones, which impacted new EBS volume creation as well as the pace with which we could re-mirror and recover affected EBS volumes. Additionally, one of our internal control planes for EBS has become inundated such that it's difficult to create new EBS volumes and EBS backed instances. We are working as quickly as possible to add capacity to that one Availability Zone to speed up the re-mirroring, and working to restore the control plane issue. We're starting to see progress on these efforts, but are not there yet. We will continue to provide updates when we have them.

10:26 AM PDT We have made significant progress in stabilizing the affected EBS control plane service. EC2 API calls that do not involve EBS resources in the affected Availability Zone are now seeing significantly reduced failures and latency and are continuing to recover. We have also brought additional capacity online in the affected Availability Zone and stuck EBS volumes (those that were being remirrored) are beginning to recover. We cannot yet estimate when these volumes will be completely recovered, but we will provide an estimate as soon as we have sufficient data to estimate the recovery. We have all available resources working to restore full service functionality as soon as possible. We will continue to provide updates when we have them.

11:09 AM PDT A number of people have asked us for an ETA on when we'll be fully recovered. We deeply understand why this is important and promise to share this information as soon as we have an estimate that we believe is close to accurate. Our high-level ballpark right now is that the ETA is a few hours. We can assure you that all-hands are on deck to recover as quickly as possible. We will update the community as we have more information.12:30 PM PDT We have observed successful new launches of EBS backed instances for the past 15 minutes in all but one of the availability zones in the US-EAST-1 Region. The team is continuing to work to recover the unavailable EBS volumes as quickly as possible.

These problems in AWS’ N. Virginia facility have led to other issues with its Relational Database Service and Elastic Beanstalk.