On the other hand (re: Java-based client-side computing).....

If you've been following this blog for the last couple of days, then you know that I've been exploring (here and here) the possibility that now may good time to re-examine the possibility of developing some sort of standard thin (or rich) client architecture. For all of its faults, AJAX is solving a very real problem and adding to Google Mail's usability. By standard, I mean, at bare minimum, a collection of three technologies that developers of Internet-based software can count on being there, on the client side, all the time: a browser (support for HTML, Javascript, etc.), a secure execution environment that's normally plug in-based (ie: a Java Virtual Machine or a Flash Engine), and removable storage (ie: USB key, Compact Flash or SmartCard) so that the last known state of your computing environment can securely persist on your key chain or in your wallet instead of on a heavy device you have to carry with you.

The posts have provoked some passionately argued comments that are for and against the idea of thin client computing and some have rightfully questioned the viability of Java on the client. That's because my blogs assume a best case scenario where Java is non-problematic, which it isn't. For example, citing a Java-based time-clock application from ADP, a ZDNet reader going by the alias of JPR75 wrote:

I can't imagine a Java based RDMS. Our hourly people at work use a web based time clock application by ADP. The app itself is very well constucted, but managing it (updating/changing employee clockings) is slooow. We have a broadband connection at work for the Internet which is quite fast, never-the-less, this app is slow.....Until we see faster web applications and faster Java (period), it scares me to think that everything could be Java and web based.

Based on his experience with the same application, another ZDNet reader, Justin James, concurs:

I had to use that app with one employer as well. Nothing like being marked "late" despite arriving 10 minutes early! It took about a week for management to discover that the application was completely unreliable for determining clock in/out times, as the Java applet was painfully slow to load and run.

Francois Orsini, who has been doing all the objection handling on behalf of Sun responded:

Saying that Java is slow is everything but factual - there are many apps out there running slow over the web without involving Java....Once JavaDB is started in the application JVM context, all interactions are local and extremely fast.

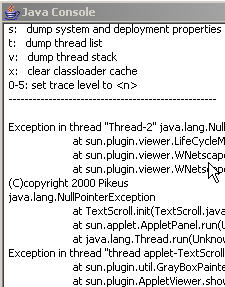

Bear in mind that "showing the console" is an option for the JRE that I have checked because I my preference is for a chatty system -- one that set to tell me what the heck is going on when it's resources suddenly get swallowed whole by some bursty application. Most users wouldn't see it (and it's unfriendliness). But in the larger context of the local execution environment question, the question of what is preloaded and ready to run is a big question. Preload nothing and there will be some delays upon first execution of some code (or plug-in). Have you ever noticed how slow your first PDF-formatted document to load is? But once Adobe's Acrobat Reader plug-in is loaded, subsequent PDFs load much faster. This is where that discussion about the client's girth comes in.

A relatively svelte client might consist of nothing but a browser and support for removable storage. If a user walks up to that client, the need for and type of local execution environment is determined at runtime and pulled in plug-in style off the user's USB key. But being able to dynamically load any runtime environment (Java, Flash, etc.) requires a lot of overhead. Overhead that translates into client girth. To eliminate that flexibility-driven overhead, the architecture could eliminate the flexibility and settle on one execution environment (ie: Java or Flash), embed that to the exclusion of others, an pre-load it to speed up load times. But which of these historically plug-in based technologies need to be in every thin client to guarantee a seamless and speedy Internet experience? Java? Flash? Acrobat? Real? Perhaps Java or Flash can accomodate Acrobat and Real formatted content.

That leads us to the next question which is what else needs to be preloaded. For example, are certain Java classes (eg: JavaDB for data persistence and synchronization) more likely to get used than others everytime a user sticks their USB key into some kiosk in an airport and should those classes be preloaded to speed up execution. The more preloads we pile into some standard thin client architecture, the lesser the chance we can continue to call it thin or even rich. Pretty soon, we're right back where we started with a full-blown OS.

My point is that the observations made by ZDNet's readers regarding the realities of thin or rich client computing are not to be dismissed. But, getting back to the glass being half full or empty, that doesn't mean the challenges are insurmountable. Architectural decisions would have to be made, prototypes built, and concepts proven. Take all the pushback on synch for example. I made the argument that JavaDB could be used to facilitate the persistence of user data within the context of a disconnected browser. The advantage of such persistence is that end-users could continue to work on their data and documents with browser-based applications in a thin client environment even if the browser wasn't connected to the Web (lack of such offline capability is one of the leading objections to moving away from thick clients to Web-based end-user applications). Then, when the connection returned, those locally persistent data and documents could be synched up with the user's central storage repository on the Internet.

Via AJAX programming, Google Mail has autosave for example. Justin James poo poos AJAX. But let's face it. For all of its faults, AJAX is solving a very real problem and adding to Google Mail's usability. But, suppose your connection goes down. Where do the autosaves go to? What happens if you click send? Thousands of people use GMail. Is it wrong to assume that just the same way they like autosave (I like it, I wish Wordpress had it), they might like the ability to work offline? No.

Now, you could take the glass is half-empty approach, dismiss both the need and the opportunity to innovate and do nothing. Or you could take the glass half full approach that Morfik's did when it applied its "web applications unplugged" approach to GMail with it's Gmail Desktop. Thanks to ZDNet reader MikeyTheK for pointing it out when he said "Morfik has a sample application called Morfik Gmail that I've been playing with. It allows me to take gmail on the road - on an airplane, or wherever, and use the gmail interface to handle all my gmail." Last September, Morfik's Dr. Martin Roberts was here on ZDNet extolling the virtues of his platform as well.

Other proof of concepts -- where someone saw the glass half full -- exist too. Julien Couvreur has been working on something he calls TiwyWiki which, like the aforementioned Gmail Desktop, is a Web app that works even when the Web is unplugged. Via email Couvrer wrote:

It can run entirely from the browser cache when you are disconnected and it will sync your changes when you go back online. The server isn't very smart about versioning and merging, but it's only a server limitation that could be addressed

TiwyWiki is actually one step closer to the world I'm thinking about vs. that of what Morfik has because it's relying on something that's relatively ubiquitous: Flash. With the exception one comment about his choice of Flash 8, most of the comments about his innovation (on his blog which describes the architecture in detail) are like rave reviews. But also in that email, Courvrer acknowledged the potential of something like a JavaDB to handle the persistence of data:

The client-side storage is primitive (get/set, implemented in Flash), and a richer storage (relational store?) would definitely help. From my experience so far, I'd say the synchronization issue is a much larger concern. In the thick client world, I have seen many database implementations and I'm sure we can migrate some of these to the browser world (using Java or Flash). But even in that world, I don't know of any good synchronization framework for occasionally disconnected applications.

Synch is a difficult problem. Having written an offline AJAX prototype (TiwyWiki), I can attest that the synchronization is most of the pain. Somewhat usable error handling of all the scenarios would come second. That's not to say that improvements in client-side storage wouldn't matter though: I'd love to see a richer API than [Flash's] getValue and setValue....Why not start a offline AJAX discussion group or mailing list?

Ask and you shall receive. Given the opportunity to collaborate, I'm guessing that these and other questions can be vetted and progress can be made in terms of innovation. Given the relevance of the discussion to mashups, I've established a forum called Offline AJAX and Web Apps Unplugged and am hosting it on Mashup Camp forum server. The invitation is open to Julien, Sun's Francois Orsini, Brad Neuberg, and anybody else who thinks they can contribute. Additionally, for those who join the conversation who would like it to continue it in the physical world (as well as the virtual), you're welcome to do so at the next Mashup Camp (Mashup Camp 2). It's free to attend and scheduled to take place in Silicon Valley on July 12. See the mashupcamp.com Web site for details on how to sign up.