Singularity: Technology, spirituality and the close box

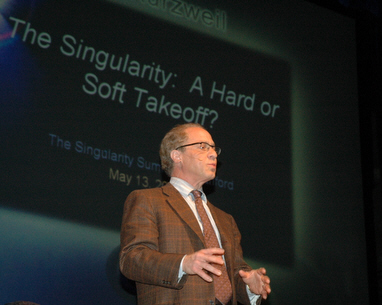

Ray Kurzweil responded to various critiques and questions, and stuck to his PowerPoint slides of datapoints that convey his Singularity theory. He emphasized that because of the accelerating pace of change, technology will be able to solve all problems, from the practical problems of climate change and energy efficiency to cutting poverty and disease On the software side, 10 to the 16th calculations per second should be sufficient to reach human level intelligence. and bridging the digital divide--but only through the scale the new technologies will bring.

On radical life extension--including immortality--mastering and reprogramming who we are in terms of health processes through biotechnology is on the horizon, Kurzweil said that biology and medicine in post information era are about reprogramming biology to eliminate health problems, he said. "Within 15 years add a year to life expectancy every year," he predicted, which supports the "real goal of life is to expand human knowledge."

He added that "creating communities is what holds people together and enhance human relationships, and I would like more time to partake of that."

See image gallery for a closer look at event's participants.

Kurzweil's notion of community includes the more extreme concept soft loading the contents of a brain onto a computing substrate. It's difficult to imagine the social dynamics of communities with computational intelligences as separate entities, full rendered proxies for a biological being or some merged entity with one million time the speed of human brain function.

"The whole uploading idea isn't necessary," Kurzweil said. "The idea is to pass the Turing test. We don't need your body and brain...we have this new better one in a computing substrate. We can observe and scan from inside and see what going in sufficient detail....One can make an argument that it is a different person, but this scenario not integral. We are talking about capturing strong AI, and it's becoming less narrow over time."Integrate the compute with who you are ...more convenience.

Kurzweil dismissed Hofstader's critique, saying that there are some limitations in the accelerating pace of technology, but they are "not very limiting."

As a final piece, the panelists were asked whether they thought the shift to Singularity would have a happy ending. The majority of panelists were optimistic, or weren't willing to guess. Predictions on when human level machine intelligence would exist ranged from 2029 (Kurzweil) to 2100 (Hofstader, although he said he is not a 'futurist'). Kurzweil also doesn't believe that even a world catastrophe, which would be painful of course, would disrupt the trends on which Singularity is based.

Eliezer Yudkowsky of the Singularity Institute for Artificial Intelligence doesn't believe that human level AI can be predicted, even if know how to build real AI, how much work is involved and which organization and people are doing it. "We have to be careful not to mistake our ignorance for knoweledge about it," said, adding that AI doesn't fit into a Moore's Law expression. "We need more public funding for specialists who can think about these things full time," Yudkowsky said.

Kurzweil responded that the hardware and software are two sides of the problem. On the software side 10 to the 16th calculations per second should be sufficient to reach human level intelligence. "It's a matter of getting to the right level of representation of brain regions...it's a fairly complex system but at level we can handle." Hardware will follow Moore's Law, continue to deliver cheaper, faster, smaller, cooler systems.

Author Bill McKibben, who was remotely connected to the Summit from his country home in the Adirondacks, advised the Singularists to slow down. "One of ways we fool ourselves is thinking the past a good predictor of future. It's a natural human tendency to extrapolate out. That's possible and how lots of people lose their shirts in the stock market. The other possibility is that there are threshold points at which point it makes sense to say enough for now. We are clearly in one of those points," McKibben said.

He went on to say that in the last 150 things have gotten faster, and physical and social disintegration aof the world is around us. "We are living in a society with increasing depression. In the real world, to the degree that it's possible to slow down the progress of technology change and instead think hard about how to summon human abilities and try to bring them to the fore."

Cory Doctorow made the important point that "hitting the close box quickly is going to be one of the more important skills."

McKibben also adress the intersection of Singularity and spirituality. "It is taking something that is a deep part of the human experience and somehow confusing quality with quantity. In the end, the spiritual response has a great deal to do in that there is something important about human mortality. It would be an interesting process, and to a certain amount in conflict [with ideas of Singularity]."

"Technology can empower us on the positive side," Kurzweil resoonded. "We can see both the promise and perils, but at same time social networks and blogs are democratizing. Some of true values of religion, such as the golden rule, we keep in mind. The story of the 21st century hasn't been written."

The most important inventions of the last century, according to McKibben, were the wilderness area and the practical invention of non-violence as a political technique--the unique human ability to reign oneself in. That statement drew the greatest applause of the day.

Kurzweil responded that free enteprise competition makes it hard to slow down, and he clearly wants to continue marching forward.

McKibben chimed in that governments could intervene. "If we decided that the endless consolidation of agriculture was producing results we don't like in ecological terms and human cost, we could pass series of laws to slow that down. We aren't beyond that possilbity--we still live in world in which we can make a difference."

Doctorow cited a characterization of Singularity as the "rapture of nerds," and that the attraction of Singularity is partly pyschological, transcending problems of the world, wiping the slate clean. Kurzweil countered that the idea of Singularity doesn't just emerge from anxiety. "It's applying knowledge to solve problems, and we have to reach to transcend the problems in real, rather than imaginary, ways."

It boils down to whether you believe in the Singularity concept as a scientific endeavor, and how it will impact individuals and society. It's not hard to believe in the substance of the science aspects of Singularity. The question about how it will change our species, society and public policy beyond the notion of increasing life span, pervasive virtual reality and smart agents to do our bidding in that realm or in the worst case scenario over time to enslave us. As McKibben suggests, the concepts of Singularity need to be part of the big conversation.

Kurzweil concluded the session: "It's a complex topic." Indeed...