Facebook and the "red square of doom"

Esteemed colleague Jason Perlow, good friend and self-confessed loud mouth of the east coast, blogged earlier on in regards to the Twitter fail whale, which has gripped so many people in perpetuation of their own tweets on popular social network site, Twitter.

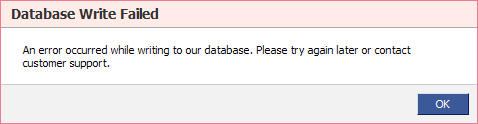

Facebook hasn't necessarily solved this problem, but at least it gives you a rough direction as to where the error is occurring. The "red square of doom", or RSOD for short - which works brilliantly if you're British because it's two swear words thrown into one - is Facebook's magical way of telling you something has gone catastrophically wrong.

With 200 million users now on the social network, officially the biggest single collection of people in the world's history, can the infrastructure behind the scenes handle everything going on?

Simple answer is yes; there are two important factors which I can think of. Everything within Facebook is stored on a multitude of different servers in huge data farms which, as you would imagine, need constant updates and repairs.

From August 2008 to present day 2009, it has increased its user base to another 100 million users, with more and more photos being uploaded every second. The server costs can be expected to run into the millions, with energy and air conditioning, backup and even simple running costs to consider.

But what makes Facebook tick? Too much to really go into without causing myself to stroke out.

By using memcached and other similar technologies, Facebook can serve over 200,000 UDP requests a second using 8 threads on 8 cores. (I won't pretend this isn't beyond me, because it makes my head hurt just thinking about statistics). According to Niall Kennedy's blog, Facebook has:

"...over 10,000 servers as of August 2008 according to Wall Street Journal coverage of a presentation by Jonathan Heiliger, Facebook's VP of Technical Operations. Facebook signed an infrastructure solutions agreement with Intel in July 2008 to optimally deploy "thousands" of servers based on Intel Xeon 5400 4-core processors in the next year."

The infrastructure behind such a mammoth machine is practically living and breathing by the sounds of it. I wouldn't be too surprised if it were impossible to define where the infrastructure begins and where it ends.

Ultimately, the Twitter fail whale will continue more and more because of the lack of business model not strategy, meaning it will continue to lose money as opposed to investing it back into the service. Facebook, with served advertisements, will continue to generate millions of dollars every year which can then be invested back into the infrastructure - making it better, faster, safer and more reliable.

Hopefully by then we shall see an end to the "red square of doom". Case closed.