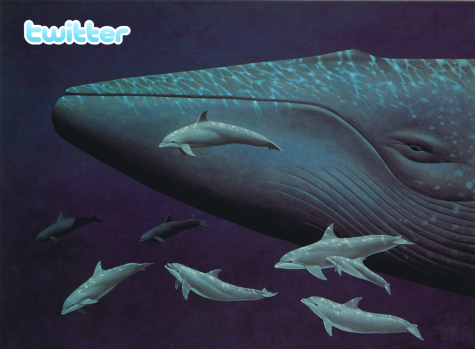

How to end the Fail Whales? With Blue Whales.

Twitter's "Fail Whale" logo is cute, but solving the popular service's availability and scalability issues will be paramount if the web is to rely on it as a communications medium.

So, I've been Twittering for a while. I was skeptical of the service at first, but now I've become a regular by updating my feed with posts about things going on in my daily life, funny links to stuff I enjoy, re-tweeting posts from friends and colleagues, as well as automatic direct article feeds from Off The Broiler and Tech Broiler. My life is just as meaningless and uninteresting as it was before, except now millions of people in the "Twitterverse" get to see it in real time.

Anyone who has been using Twitter's direct web interface will eventually encounter the Fail Whale -- the service's warning that the system is either undergoing maintenance or has exceeded its capacity. And if you've been using Twitter this week, you've probably also noticed that Fail Whales and system outages have been occurring with increasing regularity.

It's clear that Twitter's infrastructure and systems architecture is insufficient to handle current and future levels of demand. The issue is one of scalability and performance at the transaction level. At any given time, millions of rows of data are being inserted into Twitter's database and being read. These application performance characteristics are different from other large scale web applications such as Google's search engine, which are primarily read-intensive.

So can Twitter do the same thing as Google and build a large distributed cluster of systems, such as with Aster Data's "Beehive" or a few hundred racks of Kickfires to solve their performance, scalability and availability problems? In a word, no.

Twitter's problems cannot be solved in a massive scale out distributed systems manner. When you are dealing with systems that are hitting a live database and performing millions of row inserts at any given time, you are going to go beyond the limits of what off-the-shelf client/server hardware can accomplish.

Before I joined IBM in September of 2007, I had just completed a project for a large government agency that needed a system that could accomplish approximately 5,500 database row inserts and reads per second, or approximately 18 million transactions per week. And as a customer requirement, we had to do it with COMMODITY HARDWARE. Read as, Intel Chips and Linux.

The heart of the "beast", as those of us referred to it, was comprised of 4 Unisys ES7000-based Oracle 10g RAC nodes, each using 256GB of RAM and sixteen dual-core Intel Xeon processors, and the most sophisticated, high-performance Cisco 10Gbps Ethernet switching that money could buy which was available between all the main clustered and load balanced tiers of the system -- Web, J2EE Application Servers, Messaging Queue and Database.

For storage, we used the highest-end EMC Symmetrix DMX chassis which could be purchased at the time with four dual-port 4Gigabit fiber channel host-bus adapters per database node going into a redundant fiber switch network using EMC PowerPath I/O software for Red Hat Linux 4, and lots of redundant spindles on the SAN to max out the IOPS on 60 Terabytes of disk. Still, with all of this insanely expensive x86 server, storage and networking hardware, we were just about able to accomplish 18 million transactions per week, which was about 10 percent over the original design requirements of the customer. That wasn't a lot of room to spare.

Suffice to say I'm not going to tell you what this system cost in total, but we were well within the peak of the performance envelope of what could be done with "off-the-shelf" hardware, and I'm not going to lie and say a Unisys ES7000 is an "off the shelf" x86 box, because it 'aint. The ES7000 uses proprietary bus architecture improvements that allow it to vastly exceed the performance of most x86 server systems. But even a tweaked-out client/server systems architecture like the above would probably not be adequate enough to run Twitter smoothly either.

No, there's only one systems architecture that will solve the Fail Whales once and for all. As much as I hate coming off like an IBM commercial -- cue the blue background and jazz music on Tech Broiler -- the solution to the Fail Whale is a Blue Whale -- an IBM System Z. A Mainframe. Or two. Preferably in a SYSPLEX configuration.

Why mainframes and not say, mid-range systems such as the IBM pSeries 595, the HP Superdome or even Sun's M9000?

Well, if you were going to build strictly a high-performance client-server database application where most of the transactions are reads, I'd say yes. And if you wanted to spend a hell of a lot of money and were religiously committed to client/server architecture for solving any particular problem, any of these would be good candidates. The problem, however, is that Twitter is doing all those simultaneous row inserts while it is doing reads, which is murder on CPU and on IOPS. As such, using a distributed systems approach, you would eventually run into massive scalability problems, and the build out and maintenance costs using these types of high-end UNIX systems would also be cost prohibitive.

So while indeed a mainframe is no trivial purchase either, it can be purchased in a modular and incremental fashion and continue to be built out and scaled out depending on the number and type of "engines" that are needed to run on it. Unlike the mid-range UNIX systems listed above, an IBM System z mainframe has specialized processors for database-intensive work, such as the zIIP(s) (System z Integrated Information Processor)which offload database query activities from the main system processors, the IFLs and the CPs. There's a darned good reason why mainframes still rule the roost at banks and other large institutions that need to process transactions at ginormous volumes, because even mid-range systems can't keep up with the type of loads we're talking about here.

Additionally, running on a mainframe provides you with an integrated bus architecture and I/O backplane that allows you to do an end-run around the issue of network communication layers between web tier, app tier, middleware and database tier in the Client/Server model, which is likely where many of Twitter's performance bottlenecks are occurring. Twitter is also re-writing much of it's core functionality in the Java-compatible Scala language, which might benefit similarly to offloading application processes with the System z's zAAP (z Application Assist Processor) as databases do with the zIIP processor(s).

Does Twitter need the Blue Whale in order to put down the Fail Whale? Talk Back and Let Me Know.

Disclaimer: The postings and opinions on this blog are my own and don’t necessarily represent IBM’s positions, strategies or opinions.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=97d2b8aa-3a7c-4655-ad8b-658871a27eae)