Reports: SQL injection attacks and malware led to most data breaches

With companies investing more resources into ensuring their networks and employees are protected against the very latest threats, some are clearly overlooking the most basic threats, usually requiring simple or average attack sophistication on behalf of the cybercriminal.

Let's review the reports detailing the true impact of SQL injections and malware in the context of data breaches.

- UK Security Breach Investigations Report - An Analysis of Data Compromise Cases - 2010

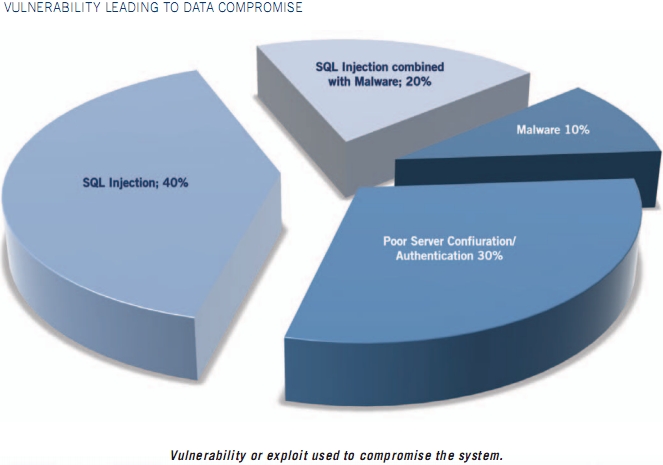

7Safe's recently released Breach Report for 2010, states that based on the analysis performed by their forensic investigations, 40% of all the attacks relied on SQL injections, with another 20%, a combination of SQL injection attacks and malware. Not only was the source of the attack external in 80% of the cases, but also, a weakness in a web interface was exploited in 86% of the cases, with the majority of affected companies operating in a shared hosting environment.

- See how Chinese hackers and botnet masters launch massive SQL injection attacks using public search engines: Massive SQL Injection Attacks - the Chinese Way; SQL Injection Through Search Engines Reconnaissance; Massive SQL Injections Through Search Engine's Reconnaissance - Part Two

- Trustwave's Global Security Report 2010

Trustwave's Global Security Report for 2010, offers similar insights related the use of SQL injections (third position in the initial attack entry list) for obtaining unauthorized access to payment card information. The report makes an interesting observation, stating that based on their analysis in 81% of the cases the compromised computers were managed by a third-party, compared to the 13% self-managing themselves.

What malware types were the attackers relying on? Memory parsers (67% of the cases), followed by malware using keylogging (18% of the cases) and network sniffing (9%) of the tactics, and 6% of the cases using credentialed malware, also known as ATM malware (Diebold ATMs infected with credit card skimming malware).

- Related posts: Scammers caught backdooring chip and PIN terminals; Scammers introduce ATM skimmers with built-in SMS notification; Microsoft study debunks profitability of the underground economy; CardCops: Stolen credit card details getting cheaper

- The Poneman Institute - Cost of a Data Breach

Despite that the report emphasizes on recommendations and includes valuable metrics to be considered in a cost-benefit analysis, it also states that based on their research, data breaches due to malware attacks doubled from 2008 to 2009, with the cost of a data breach due to a malicious attack higher than the cost of breaches caused by negligent insider or system glitches. Interestingly, it also states that notifying affected customers right away costs more than delaying the notification. That's of course from the perspective of the customer, not the affected customer whose financial data may have already been abused for fraudulent purchases, depending on the data breach in question.

- Verizon's 2009 Anatomy of a Data Breach Report

How do cybercriminals known that these corporations are susceptible to such easily, and often exploitable in a point'n'click fashion flaws? Are they shooting into the dark, or do they rely on some kind of methodology which assumes that the low hanging fruit is an inseparable part of every thought to be secure network? Here are some of key issues to consider:

- The KISS (Keep It Simple Stupid) principle within the cybercrime ecosystem

A cybercriminal that doesn't have a clue about what he's doing -- government sponsored/tolerated cyber spies and cyber warfare units are an exception although the KISS principle still applies -- would spend months preparing, possible investing huge amounts of money into buying a zero day vulnerability into a popular web browser in an attempt to use it in gaining access to the company's network. A pragmatic cybercriminal, would on the other hand be "keeping it simple", and would logically assume that there's a right probability that the company overlooked the simplest threats, which he can easily exploit.

- Go through related posts: Research: Small DIY botnets prevalent in enterprise networks; Research: 80% of Web users running unpatched versions of Flash/Acrobat; Secunia: Average insecure program per PC rate remains high

With such a realistic mentality, and due to the fact that the cost of executing these attacks is so small, intentionally or unintentionally he comes to the conclusion that the perceived level of security within an organization, appears to be misleading. In this case, complexity is fought with simplicity, starting from the basic assumption that technologies are managed by people, and are therefore susceptible to human errors which once detected and exploited could undermine the much more complex security strategy in place.

The same mentality is applicable to a huge percentage of the "botnet success stories" over the past few years. Instead of assuming that the millions of prospective victims have patched their operating systems (Does software piracy lead to higher malware infection rates?), next to all the third-party software running on their hosts, and start look for ways to obtain the much desired zero day vulnerability, a cybercriminal would basically assume that the more client-side vulnerabilities are added to a particular web malware exploitation kit, the higher the probability for infection.

And sadly, he'd be right.

- The role of automated web application vulnerability scanning in the process of achieving a (false) feeling of security

A report "Analyzing the Accuracy and Time Costs of WebApplication Security Scanners" published earlier this month, indicated that Point and Shoot, as well as Trained scanning performed with the scanners, missed 49% of the vulnerabilities they were supposed to detect. The report also pointed out that the scanners that missed most of the vulnerabilities, also reported the highest number of false positive, the worst possible combination. Clearly, what's more dangerous than insecurity in general (Review of Web Applications Security and Intrusion Detection in Air Traffic Control Systems), is the false feeling of security.

Remember China's much-speculated "unhackable OS" Kylin, which was perceived as a threat to the offensive cyber warfare capabilities of other nations, who've spent years building them on the basis of known operating systems? Just like any other operating system, it's weakness is in the balance -- or the lack of such -- of usability vs security, in this case it's the insecurely configured web applications that would allow the attackers to reach the level of usability offered to legitimate users.

Not by investing resources into looking for OS-specific flaws, but by exploiting the "the upper layers of the OSI Model". It's not just more cost effective, it's just that sometimes the attackers keep it simple.

Why do you think companies neglect the simplest threats, which are also the ones with the highest risk exposure factor due to their ease of exploitation? What do you think?

TalkBack.