PS3 chip powers world's fastest computer

Some scoffed at the 8 PS3 supercomputer. But not the scientists at Los Alamos National Labs. They used the idea to build a 1 petaflop computer named Roadrunner - the world's fastest. Here's how.

1,000 trillion floating point operations per second Fine-grained simulation of aging nuclear weapons is the new computer's ultimate gig. They couldn't just string 14,000 PS3's together - who'd believe the results?

Besides, it's American to want something better - and way faster.

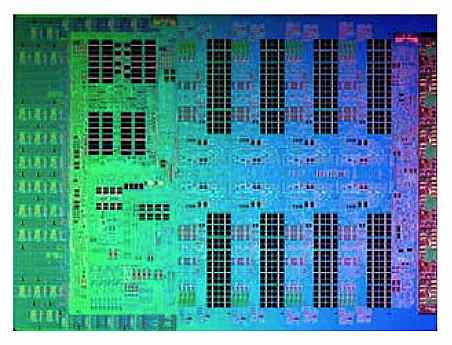

First they built a new Cell Broadband Engine The new version of the PS3 chip - called a PowerXCell 8i Processor - features 8x faster double-precision floating point and over 25 GB/sec of memory bandwidth. That is the building block of a new and really honking compute node.

Each compute node consists of 2 dual-core AMD Opterons and 4 PowerXCell 8i's. Each Opteron has a fast connection to 2 PowerXCells enabling a theoretical 25x boost in floating point performance over a stock Opteron.

No one mentioned how much RAM they gave each PowerXCell, but the chip can address 64 GB of RAM, so each compute node could easily support 264 GB of RAM (4x64GB + 2x4GB or more on the Opterons). With over 100 GB/sec of memory bandwidth.

We'll take 3,250 of them That's about how many nodes are in the completed Roadrunner. They're interconnected by a standard - for HPC clusters - Infiniband DDR network.

Infiniband is a switched fabric interconnect featuring microsecond latencies and data rates of 2 GB/sec for 4x-DDR. That's about as much as you can get out of a PCI-Express x8 bus anyway.

Update: I learned more about the storage infrastructure behind Roadrunner - 2,000 terabytes of file server - and wrote it up in my other blog StorageMojo.

The money quote:

Roadrunner currently has about 80TB of RAM, roughly 24 GB per compute node. That works out to about 4 GB RAM per processor.

The jobs these machines run are huge. A simulation can run 6 months or more. Depending on criticality a job gets checkpointed every hour or maybe once a day.

The Panasas installation at LANL, begun in 2003, is currently 2 PB. Assuming an average of 500 GB drives, that means 4,000 disk drives.

Big computers require big storage. End update.

Software is problem The hardware specs are drool-worthy, but without the right codes it is just an expensive furnace. As the best single article on Roadrunner I found explains:

For the Cell, the programmer must know exactly what's needed to do one computation and then specify that the necessary instructions and data for that one computation are fetched from the Cell's off-chip memory in a single step. . . . IBM's Peter Hoftstee, the Cell's chief architect, describes this process as “a shopping list approach,” likening off-chip memory to Home Depot. You save time if you get all the supplies in one trip, rather than making multiple trips for each piece just when you need it.

The programmers optimized codes for a variety of applications, including radiation and neutron transport, molecular dynamics, fluid turbulence and plasma behavior. With the optimized codes they got a real-world 6-10x performance boost over the standard Opterons.

The Storage Bits take Back when I was hawking vector processors a Gigaflop was considered respectable. A couple of decades later and we have a machine 1 million times faster. Cool!

We won't be able to shrink feature sizes forever though, so architecture and bandwidth will be key to further speed-ups. Hopefully that time is still a few decades away.

Comments welcome, of course.