The disk error mystery

You'd think that after 50 years and many billions sold, disk drives would be well understood. And you'd be wrong. Take the case of the outer-track errors.

Thanks to zoned bit recording the bit density of each track is roughly constant across the disk. But more errors occur in the outer tracks - and on some drives on the inner tracks too. What could be going on?

Latent sector errors (LSE) are errors that are undetected until you try to read the data and then the drive says "oops!" In the landmark study An Analysis of Latent Sector Errors in Disk Drives (pdf), researchers found that 8.5% of all nearline disks like the ones most of us consumers user are affected by latent sector errors.

If, like me, you use more than 10 drives, you probably have a drive with LSE. Maybe even two. And if a drive has 1 LSE, it is much more likely to have others.

Deep dive In a deeper analysis of the same data, Understanding latent sector errors and how to protect against them researchers found an interesting anomaly:

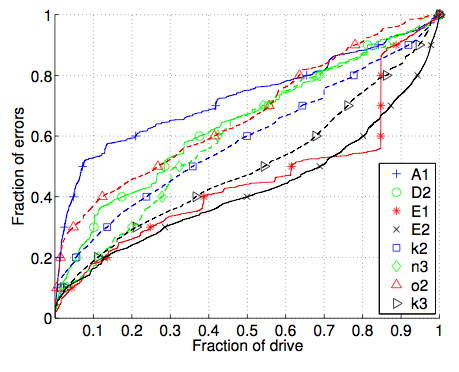

The first part of the drive shows a clearly higher concentration of errors than the remainder of the drive. Depending on the model, between 20% and 50% of all errors are located in the first 10% of the drive’s logical sector space. Similarly, for some models the end of the drive has a higher concentration.

Here's the graph from the paper:

Error location on various drive models. Capital letters denote SATA drives, lowercase SAS & FC drives.

We speculate the areas of the drive with an increased concentration of errors might be are areas with different usage patterns, e.g. filesystems often store metadata at the beginning of the drive.

Sounds reasonable. But later they note:

In particular, a possible explanation . . . might be that these areas see a higher utilization. . . . [But in other research at Google there was no] correlation between either the number of reads or the number of writes that a drive sees (as reported by the drive’s SMART parameters) and the number of LSEs it develops.

Which is it? Disk drives are busy boxes. Possible explanations are:

- Poor data. Maybe the Google data isn't fine-grained enough to discern workload-related LSEs.

- Wobbly outer tracks. Lower block numbers usually map to outer tracks where linear velocity is highest. Rotational vibration might cause LSE to cluster on outer tracks.

- Start/stops. Spinning up a drive is wearing: cold bearings; motor stress; maybe even head wear until fly-height is reached.

- Lube migration. Disk platters are lubricated to keep them smooth and to minimize wear. This layer can migrate to the outer tracks over time, where it would increase head fly height, making bits harder to read.

The Storage Bits take At present the mystery remains. But the implications for RAID arrays are important.

The 1988 RAID paper assumed that disk drive failures and errors are uncorrelated. But we now know that isn't correct.

Disk failures tend to occur together. LSE - which can kill a RAID 5 recovery - also tend to cluster on particular drives, particular places on drives, and at particular times. Failures are way more correlated that we suspected 20 years ago.

Few desktops should use RAID. If you do use an external SATA RAID 5, make sure you have a reliable backup because chances are good you'll need it. RAID 6 is the way to go when using large SATA drives.

Modern disk drives are amazing precision devices that make fine Swiss watches look as delicate as a strip mine in comparison. Yet we don't understand everything about them.

Kudos to companies like NetApp who support research into disk behavior. As more of the world's data resides on disks, the more important this research becomes.

Comments welcome, of course.