GUIs: The computing revolution that turned us into cranky idiots

June 2013 is almost at an end, and we've seen the passing of two major software development conferences, Apple's WWDC and Microsoft's Build, both of which have demonstrated and issued preview releases of three major client/device operating systems, Mac OS X "Mavericks", iOS 7, and Microsoft Windows 8.1.

In all three cases, significant user interface changes have been introduced, and there's been no lack of whining and complaining by certain groups of end users that those changes are for the worse.

In Microsoft's case, the Preview release of Windows 8.1 has generally been heralded as an improvement, since the balance of the radical changes to the operating system occurred in Windows 8, but there will almost certainly be some people who regard any sort of change as negative.

Since the dawn of personal computing, the cycle of resistance to change has been never ending. To understand the nature of this resistance to change, we have to go back to the very beginning.

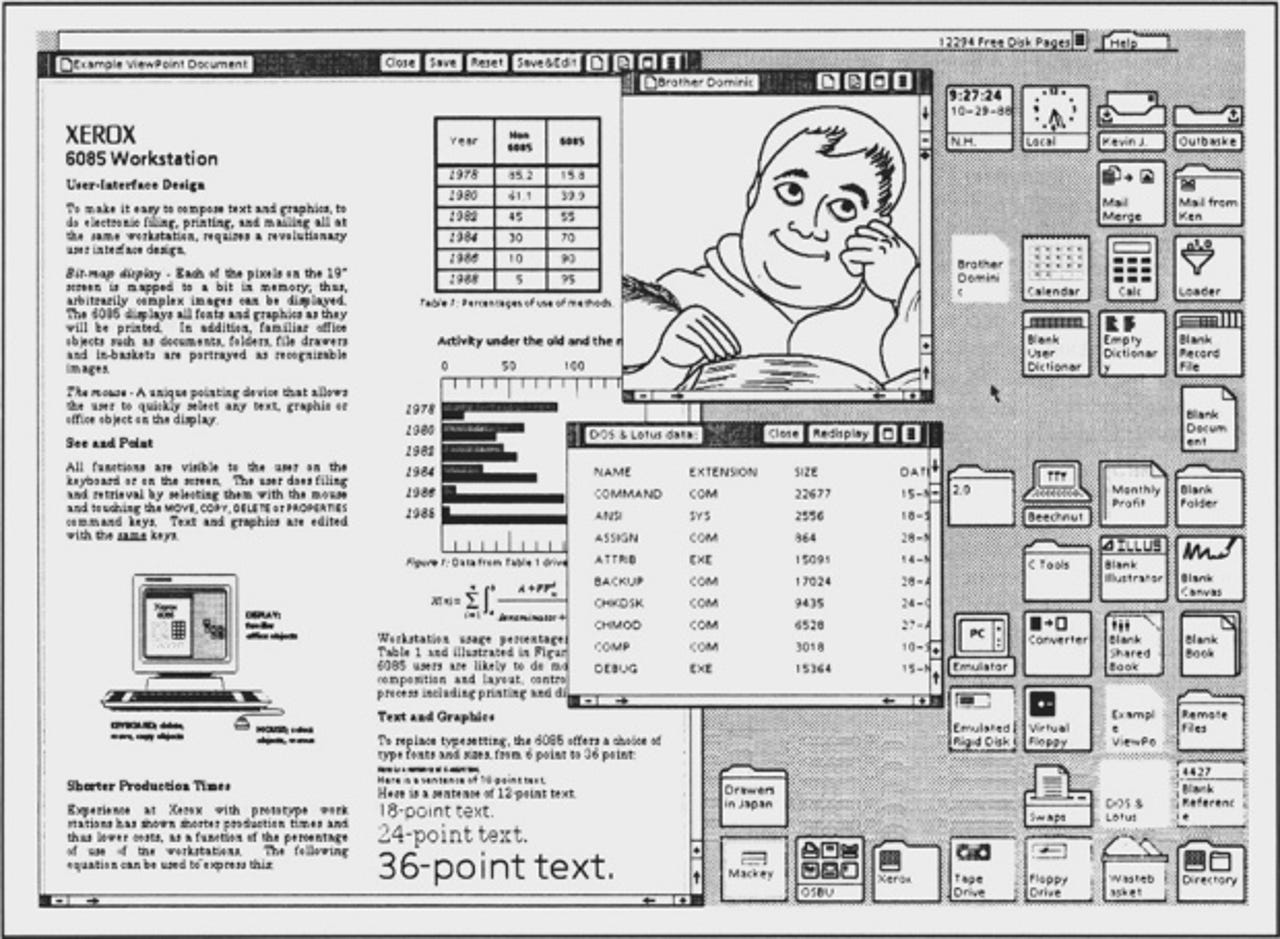

The first Graphical User Interface (GUI) to be used in experimental form was Xerox's Alto workstation in 1972, a machine so far ahead of its time that its innovations would not be experienced by end users in the general population until 12 years later, in the form of Apple's original Macintosh computer.

Xerox further developed what it learned about Alto into Star workstation, which was commercially released in 1982, a full two years before the first Macintosh (and the ill-fated Lisa) shipped.

The Star system was so advanced that it could even network with other stations over Ethernet, something that would be practically unheard of until a good five years later, on Novell's Netware 2.x and IBM/Microsoft's LAN Manager platform. Star was so expensive and so poorly marketed, however, that only a very small number of these systems were actually sold.

If you consider that a typical IBM PC cost about $2,000 and a Star was about $16,000 in 1982 dollars, it's easy to understand why this system failed so miserably in the marketplace.

Despite being a commercial failure, what Xerox developed for the Alto and the Star at its Palo Alto Research Center in the early 1970s has stuck with us for 40 years — the idea of a visual "Desktop" paradigm that uses windows, icons, menus, and pointers, also commonly referred to as "WIMP" by user interface designers.

Until the advent of GUIs, PCs, minicomputers, and mainframe terminals were operated by command line interfaces (CLIs). This required memorizing frequently complex syntax to get even the most simple tasks done.

In that time period, PC applications themselves used menu-driven interfaces, but were entirely text based, and the use of things like mice and pointers was practically an alien concept to most people.

While CLIs are still used heavily today, and remain a very powerful tool in modern operating systems such as Windows, UNIX, and Linux, they are primarily used by systems professionals in order to batch automate operations through scripting, and are no longer the primary interface that end users interact with in order to do routine things like move and delete files, or start programs.

Once the Mac made its debut in 1984, there was an utter explosion in the rise of personal computing through the rollout of GUIs on other computing platforms. Microsoft followed with Windows 1.0 in 1985, which was originally an add-on product for MS-DOS.

It should be noted that the introduction of Windows 1.0 was considered a radical change itself on PCs, considering that many users were actually quite accustomed to the command prompt and text-based applications.

Although the Macintosh's release in 1984 was a landmark for the commercialization of the GUI in personal computers, it wasn't until 1990, with the introduction of Windows 3.0, that the concept of the GUI truly began to take hold with the general population of PC end users.

Change is never easy for users to accept, even if you are introducing new technologies that are supposed to make their lives easier or the computing experience simpler.

After Windows 3.0, use of GUIs utterly exploded in computing. Text-based applications made way for graphical-based applications. The need for the end user to understand how the command line worked as well as other arcane things about PCs began to decrease more and more over time, and, as such, the basic competencies required to operate a personal computer also reduced substantially.

The net result? More and more people became comfortable with using computers. And thus, the PC industry exploded.

There were, of course, other attempts to create better GUIs. IBM tried to market OS/2 with its Presentation Manager GUI, which in its second version offered pre-emptive multitasking as well as an object-oriented system.

Many industry pundits and technologists (myself included) believed that OS/2 2.0 was a superior system to Windows 3.x.

However, IBM did a terrible job of marketing the product, and due to the overwhelming penetration of Windows into the PC marketplace, end users didn't bite on it and developers were slow to write applications for it.

Additionally, heavy PC resource requirements to run IBM's "superior" OS optimally (16MB of RAM was expensive in those days, around $600) left OS/2 to become something of a niche system for highly-specialized vertical market applications. Eventually IBM did optimize the software to run well in 4MB of RAM, the standard for Windows PCs at the time, but it was too late.

Steve Jobs, after leaving Apple in 1985, went on to create the NeXT computer, released in 1988, which like OS/2, featured a sophisticated graphical object-oriented operating system.

The systems were extremely expensive ($6,500) and targeted toward a vertical market (education). The company ceased producing hardware in 1993 to focus on operating system software for x86 and UNIX workstations, which it also greatly failed to successfully market as well.

Had it not been for Apple's declining health in the mid-1990s — facing imminent financial ruin with its products growing stagnant and having experienced multiple failed attempts at creating a next-generation operating system of its own — requiring desperate measures on the part of the company to "Think Different", NeXT would have probably also been relegated to the dustbin of history.

Without Apple's intervention in NeXT, Steve Jobs would have been remembered fondly as the guy who co-founded and ran a fledgling computer company in the 1980s, flopped spectacularly with NeXT and broke into the CGI films market with Pixar.

As we all know, NeXT and its intellectual property in the form of the OpenStep operating system, Objective-C development tools and the WebObjects programming framework was purchased in 1995, and became the basis for Mac OS X as well as iOS.

Again, NeXT's failure to win over large groups of end users, and Apple's own unwillingless to take drastic measures to improve its products until it was almost too late, are prime examples of resistance to change.

Of course, overall cost of moving to a new platform or an environment can be a huge limiting factor, as in the case of the Alto, the Star, OS/2 and also NeXT. But in recent times, cost has rarely factored into the equation, as platforms have been highly competitive in price as of late.

And as we have seen even with free and open-source end-user desktop platforms like Ubuntu Linux, and open-source GUIs like GNOME and KDE, absence of cost does not equate to mass adoption.

Once you increase the size of any user population, the more they get used to doing something, the harder it is to move them into the next evolutionary phase. This resistance occurs every single time a UI has to be revamped to address fundamental changes in user behavior or overall improvements in the underlying technology platform.

We saw this, for example, with the introduction of the Start Menu in Windows 95, which was utterly pervasive in Windows until 2012. Mind you, Windows 95 was an extremely successful operating system, but the migration to using the new GUI from Program Manager did not go without its own share of people kicking and screaming along the way.

GUIs have not remained stagnant, of course. With the introduction of mobile computing, smartphones, and tablets, interfaces have needed to change out of the necessity to facilitate the underlying platform, the applications, and use cases.

There are now hundreds of millions of computing devices in the wild, all using GUIs.

Because the form factors are so different from what we have been traditionally using on the desktop and in laptops, the GUI needs to evolve, particularly as we are seeing the inevitable movement toward platform singularity or the "one device".

We are also moving toward the use of devices that need to be optimized for touch and for lower power consumption, and applications that need to maximize the use of screen real estate and use resources in the cloud.

And, almost certainly, along with the use of these inexpensive, lower-power devices, we are also facing the inevitable deployment of complex applications that run remotely in the cloud. Including the most demanding ones traditionally limited to extremely powerful and very expensive workstation hardware.

Because of this, simplification is now the latest trend in UX design changes, across all of the major platforms, which is also attracting its share of resistance and ire.

We live in an interesting time, one in which the previous era in computing intersects with the next, and the most difficult adaptation of end-user computing habits must take place.

The GUI, almost magical in its design, has made computing accessible to all and completely pervasive in our society, but with it brings a curse of resistance to change and a complacency that comes from taking ease of use for granted.

After 40 years of personal computing, the end user has become a simpleton, no longer requiring specialized knowledge to operate the system. In effect, he's been transformed into a cranky idiot who becomes angry when even the smallest change requiring learning new habits is introduced.

Resistance to this change is inevitable. But we all have to go through it eventually, this cranky idiot included.

Has the explosion in GUIs over the last 40 years made change and end-user acceptance more and more difficult through each successive generation? Talk back and let me know.