Intel targets exascale computing with new technologies

Many-core processors, power-saving chips and advanced memory technologies will help Intel reach its goal of developing an exascale computer by 2018, its chief technology officer has said.

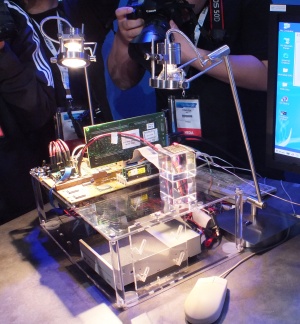

Intel's Near-Threshold Voltage Processor (NVP), codenamed Claremont, is able to operate at below 10 milliwatts when dealing with light workloads. Photo credit: Jack Clark

The chipmaker has set its sights on building a computer capable of an exaflop — one thousand petaflops, roughly a hundred times faster than the world's leading supercomputer as of June — by 2018. The supercomputer would consume less than 20 megawatts of power; this equates to a power efficiency improvement of about 300 times over today's supercomputers, Justin Rattner, Intel's chief technology officer, said on Thursday at the Intel Developer Forum.

"The challenge here is generating that much computing power within a modest 20 megawatt-power budget. Today a petaflop[-scale] computer is burning something between five and seven megawatts," he said. "If we scaled that up it'd [consume power] in the gigawatt range and I'd need to buy everyone a nuclear reactor."

To achieve its goal Intel will need to bring in new memory, processor and interconnect technologies to drive performance while lowering overall power consumption, Rattner said.

Prototype chip

He unveiled a prototype chip — the Near-Threshold Voltage Processor (NVP), codenamed Claremont — which is able to operate at below 10 milliwatts when dealing with light workloads and can scale up to full power when needed. In its current form, the chip, which is based on an old Pentium design, can be powered by a postage stamp-sized solar panel.

"What you observe as you come down to the threshold voltage is the energy efficiency climbs very rapidly as you get to just above threshold [voltage]," he said in a briefing on Wednesday. "The theoretical benefit is in the range of eight to nine x [over nominal operation], which is a substantial improvement in energy efficiency by any measure."

The technology allows Intel to "improve the dynamic operating range of the processor," he said, and holds promise for boosting the efficiency of future processors.

"Rather than having the processor go to sleep and then recover the system [this technology means Intel can] just take it right down to threshold and let the processor run slowly," he said. "When there's work to do, [it can] immediately bring it up in voltage and frequency and let it do whatever needs to be done, whether that's servicing an interrupt and then going back to ultra low-power operation."

Intel aims to use the techniques learnt in building Claremont to create ultra-efficient processors in the future, Rattner said, though it is unlikely the technology will make its way into an actual Intel product until the latter half of the decade.

In a briefing with journalists on Wednesday Rattner admitted that the technology poses many challenges, especially when it is developed via contemporary 14nm and 22nm processes. This is because the variability in the quality of the transistors can cause problems for chips operating at near threshold voltage and Intel will need to develop techniques to deal with this.

Hybrid Memory Cube

Rattner also demonstrated a low-latency Nand flash memory technology called the Hybrid Memory Cube. The cube is a four-high stack of DRAM chips with a logic die sitting underneath it. Rattner demonstrated the technology on stage, feeding information to the processor at a rate of 1TBps.

Intel's Hybrid Memory Cube is a type of low-latency Nand flash memory technology. Photo credit: Jack Clark

"The logic die is absolutely critical because the higher performance transistors in the logic process are able to drive the vertical access and data lines which are in the DRAM stack very quickly," he said on Wednesday. "At the same time those transistors are able to realise a very low-voltage, very high-performance memory bus to the processor."

The Hybrid Memory Cube is another crucial technology if...

...Intel is to build exascale computers, he said. If implemented, it will grow the amount of storage available for in-memory computation — an important feature for supercomputers that typically deal with massive datasets that need to be rapidly fed through to thousands of processors.

The cube comes out of a collaboration between Intel and its long-term flash and RAM development partner Micron, Rattner said.

"One of the biggest impediments to scaling the performance of servers and datacentres is the available bandwidth to memory and the associated cost," Bryan Casper, a design engineer for Intel, wrote in a blog on Thursday. "As the number of individual processing units ("cores") on a microprocessor increases, the need to feed the cores with more memory data expands proportionally."

Putting a logic die beneath the DRAM increases the efficiency with which data can be routed to the processor, he said. He compared it to "building a high-speed subway system" beneath the memory.

Knights Ferry

Beyond changes to chip power and memory technologies, Intel is mounting an aggressive push into heterogeneous processors via its Knights Ferry many-integrated core (MIC) processor. The MIC architecture allows for many tens of cores — Knights Ferry has 32 and its successor, Knights Corner, is expected to have around 60 — to work on simple sums for large, parallel applications.

Rattner gave an update on what Intel's partners have been making of Knights Ferry.

He said that Cern, Europe's premier research centre for high-energy particle physics, had been using Knights Ferry MICs to process data generated by the Large Hadron Collider. He demonstrated the difference in speed between a non-MIC computer and a MIC computer at processing data. The MIC one did the computations around five times faster than the non-MIC.

"We can put very advanced and dense workloads on the architecture," a Cern spokesman said on Thursday.

One problem Intel has been dealing with, Rattner said, is the perception that programming applications for heterogeneous processors, such as Knights Ferry, is difficult. "The way it's been portrayed you'd think you'd have to be some kind of freak to code these machines," he said.

Intel already faces competition in the many-integrated core processor space from chip start-up Tilera, which introduced a 100-core processor in June.

Rattner demonstrated a programming framework — Parallel Extensions for Javascript, codenamed River Trail — which lets web applications make use of multi-core processors to speed applications by executing code in parallel.

"River Trail brings the processing power of Intel's multi-core CPUs and their vector extensions to web applications," Stephan Herhut, a research scientist for Intel Labs, wrote in a blog on Thursday. "With River Trail it will finally be possible to stay in the browser even for more compute-intensive applications like photo editing."

Get the latest technology news and analysis, blogs and reviews delivered directly to your inbox with ZDNet UK's newsletters.