Virtualizing the Enterprise: An overview

Virtualization has its roots in the mainframe era of the 1960s, and is particularly associated with IBM, whose CP-67/CMS was the first commercial mainframe operating system to support a virtual machine architecture in 1968. (CP stands for Control Program, while CMS stands for Console Monitor System; CP created the virtual machines, which ran the user-facing CMS.)

PC-era virtualization kicked off in 1987 with Insignia Solutions' SoftPC, which allowed DOS programs to run on Unix workstations. A Mac version that also supported Windows applications appeared in 1989, followed by SoftWindows bundles containing SoftPC and a copy of Windows. Another notable PC virtualisation pioneer was Connectix, whose Virtual PC and Virtual Server products were acquired by Microsoft in 2003 and re-released in 2006 and 2004 respectively. VMware, the current market leader in virtualization, released its first product, VMware Workstation, in 1999.

VMware Workstation is still widely used today, but the company's most significant releases were arguably its 2001 server virtualisation products — GSX Server and ESX Server. GSX Server is an example of a Type 2 (or hosted) hypervisor that, like the Workstation product, runs on top of a conventional operating system. ESX Server is a more efficient Type 1 (or 'bare metal') hypervisor that runs directly on the underlying hardware. From these beginnings, VMware has evolved a market-leading ecosystem of virtualization products based around its vSphere platform.

Virtualization has become the key technology underpinning 'cloud-era' IT infrastructure because the ability to deploy virtual instances of servers, desktop PCs, storage devices and network resources allows existing hardware to be utilised efficiently, and makes for much better manageability, flexibility and scalability.

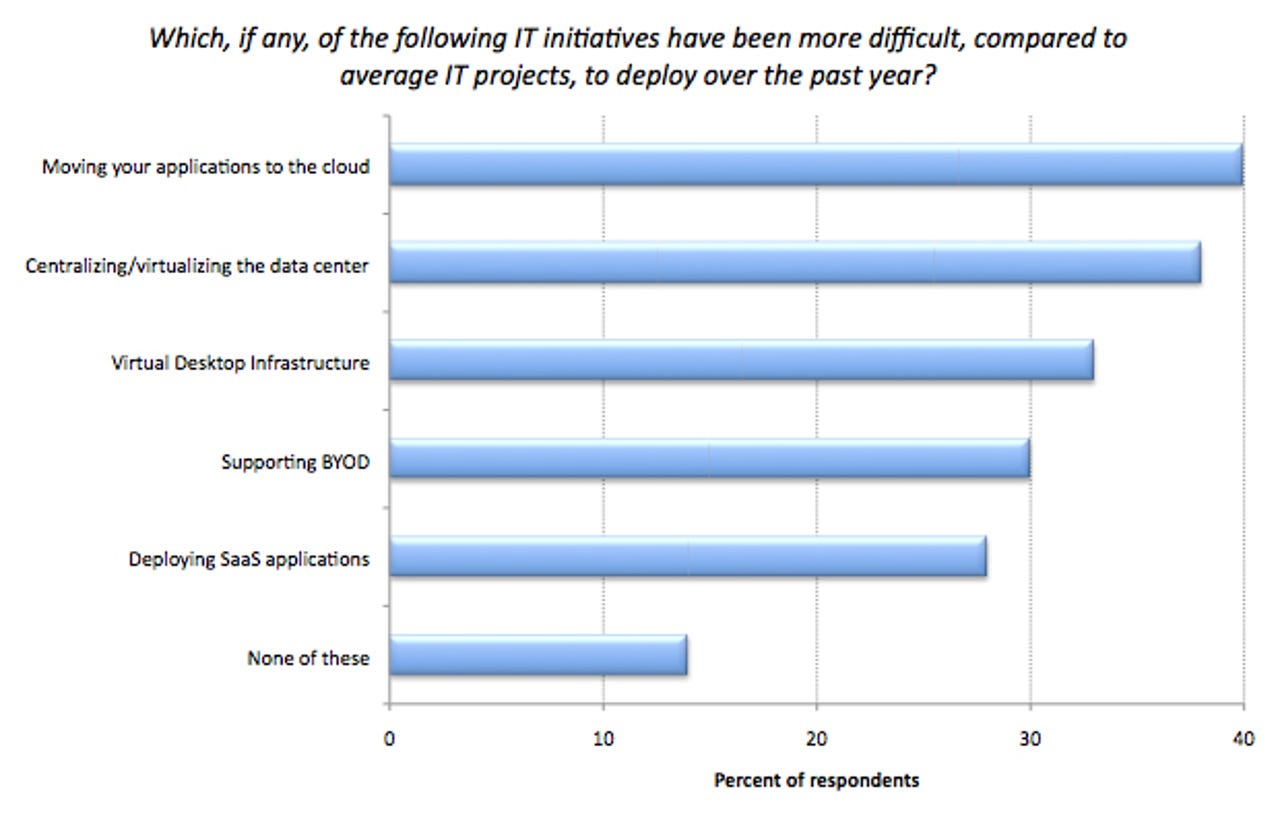

Before we look at detail at the main areas where virtualization plays a key role, it's worth noting a key finding from the recent Cisco 2013 Global IT Impact Survey, which canvassed 1,321 IT professionals in 13 countries. When asked which were the most difficult IT projects to deploy over the past year, the top three cited directly involve virtualisation: moving applications to the cloud; centralizing/virtualizing the data center; and VDI (Virtual Desktop Infrastructure):

Virtualisation may be the foundation of modern IT infrastructure, but today's IT professionals evidently find it challenging to get to grips with on the ground.

Even though virtualization is a specialist IT discipline, it's a little surprising to find that, in a July 2013 study sponsored by a group of Cisco partners, 40 percent of knowledge workers and 54 percent of non-IT workers had not even heard of it. Moreover, 80 percent of decision-makers (senior management, VPs and SVPs) were unaware of how virtualization will benefit their businesses, and only 34 percent of workers believed they were using virtualization in their workplaces.

Server virtualization

Server virtualization makes perfect sense both for in-house data centres and for service providers in the public cloud. In both cases, utilising servers to maximum efficiency and having the ability to scale virtual server provision rapidly as workload demand ebbs and flows is vital to keeping costs down and (if you're a service provider) revenues up.

A recent survey by market research company Vanson Bourne on behalf of networking vendor Brocade shows the level of penetration of server virtualization in company data centres. This study canvassed 1,750 IT decision-makers and office workers in companies ranging in size from 500 to over 3,000 employees in Europe (UK, France, Germany) and the USA:

Brocade's survey found that, on average, companies currently virtualize 46 percent of their servers, with more than half virtualizing at least 40 percent of their servers. In two years' time, the respondents expect 59 percent of their servers to be virtualized, on average, with three quarters of companies virtualizing at least 40 percent of their servers. Although it's well established, server virtualization is clearly still on the rise.

Another recent survey, conducted by storage virtualization specialist DataCore, canvassed 477 IT professionals worldwide, from a broad range of company sizes and industry sectors, and found VMware (vSphere) to be the clear leader in server virtualization software, followed by Microsoft (HyperV) and Citrix (XenServer):

Gartner's latest Magic Quadrant for x86 Server Virtualization Infrastructure (June 2013) backs up this finding, placing VMware and Microsoft in the 'leaders' quadrant, Citrix and Oracle as sole occupants of 'visionaries' and 'challengers' respectively, with Parallels and Red Hat in the 'niche players' corner:

Parallels' core market is service providers aimed at SMEs, while Red Hat Enterprise Virtualization is most often used in organisations with an existing Linux-based IT infrastructure.

Storage virtualization

Storage has always been important, but in the era of Big Data and real-time business analytics, it's becoming a core component of enterprise IT, and one in which virtualization has an increasingly important part to play. The key advantage of storage virtualization, as in other spheres, is to free IT managers from orchestrating multiple units of proprietary hardware by inserting a layer of software — a storage hypervisor — that creates easily manageable virtual 'pools' of efficiently utilised network storage.

These days, companies use a wide range of storage hardware, including high-end, mid-range and low-end hard disk arrays, solid-state and hybrid drives and flash memory on PCIe cards. The ability to manage these heterogeneous resources via software that can easily create and migrate virtual disks, and automatically match server workloads to the most appropriate type of storage, is highly desirable. Tiered virtual storage solutions also make vital business tasks like backup and disaster recovery a lot more manageable.

DataCore's recent State of Virtualization survey found that, of the 477 respondents, 66 percent (315) did not use external storage hypervisor software (such as DataCore's SANsymphony-V) to manage their storage. However, the 34 percent that did offered multiple reasons why they felt it was a good solution:

Reflecting the changing storage market, Gartner has recently (March 2013) combined a number of segmented Magic Quadrants into one, which covers General-Purpose Disk Arrays. The 'leaders' quadrant contains a sextet of familiar companies: EMC, NetApp, Hitachi, IBM, Dell and HP:

All of these disk array vendors offer storage virtualization solutions — EMC's VPLEX and NetApp's Data ONTAP are examples. Other storage companies worth following are flash specialists like Fusion-io, LSI and Violin Memory.

Network virtualization

The latest IT infrastructure component to come under the virtualization spotlight is the network. Network virtualization, or Software-Defined Networking (SDN) as it's generally known, decouples the control layer from the physical hardware that routes the network packets. SDN makes it easier for IT managers to configure heterogeneous network components and allows network traffic to be shaped to ensure that, where possible, demanding workloads get the required bandwidth, low latency and quality of service.

SDN will often work in conjunction with another developing technology, network fabrics, which are designed to allow any node on the network to connect to any other over multiple physical switches. Some vendors see SDN and network fabrics as tightly integrated, while others — including the Open Networking Foundation (ONF), curator of the OpenFlow SDN protocol — view them as independent.

According to Brocade's June 2013 survey, both network fabrics and SDN are technologies for the future, with only around a fifth of companies currently using them — although 60 percent or more are investigating or planning to investigate network fabrics and SDN:

A broadly similar picture emerges from Cisco's Global IT Impact Survey, just under half of whose respondents are currently evaluating SDN. Nearly all of these evaluators are planning to implement the technology within the next two years:

Both Cisco and Brocade asked their survey respondents about the drivers for investing in software-defined networking, revealing a broad range of perceived benefits:

Software-defined networking is likely to make an impact first in the data centre, but is also applicable to campus networks and enterprise WANs. This market is too embryonic for specific vendor comparisons to have been made by market research firms like Gartner (which is still in the process of defining its taxonomy). However, most of the leading data center network infrastructure vendors are now offering SDN solutions. Recent Plexxi-sponsored research forecasts that the SDN market will be worth $35 billion by 2018, representing 40 percent of all network spending. The same April 2013 study estimates that there are 225 SDN companies, from a starting point of zero in 2009. Network virtualization is clearly in the establishment phase, but is already experiencing rapid growth.

Desktop virtualization

Desktop virtualization involves running virtual machines, each with their own desktop OS instance, on a server and accessing them from remote client devices (PCs, notebooks, thin clients, tablets or smartphones) over local- or wide-area networks. There are several delivery models, ranging from cloud-hosted desktop-as-a-service, to managed hosting, to on-premises deployment.

Advantages of desktop virtualization compared to traditional desktop computing include data security (data is stored in the data centre rather than on the local device), manageability, wide device support (you can run a virtual desktop on a BYOD tablet or a smartphone if you want to), easier backup and disaster recovery, and lower operational costs (client device troubleshooting, for example).

Disadvantages include the need for an always-on network connection with high enough bandwidth and low enough latency to deliver an acceptable desktop experience for users (particularly if they need to use graphically demanding applications), licensing issues (particularly if you're running Windows) and — if you're considering an in-house deployment — the capital cost of server, storage and networking infrastructure.

Before desktop virtualization came to prominence there was, and remains, the Terminal Services model, in which multiple remote desktop sessions are hosted by a single server operating system. With Windows Server 2012, Microsoft supports three flavours of desktop virtualization: Personal Desktops (each user gets their own 'stateful' virtual desktop); Pooled Desktops (multiple users share a 'stateless' virtual desktop) and Remote Desktop Sessions (formerly known as Terminal Services). Advantages of the terminal services model include rapid application deployment, security and low support costs.

Desktop virtualization is another technology that most businesses are just getting to grips with (remember, it came above BYOD and deploying SaaS application in the list of 'difficult' IT projects in Cisco's Global IT Impact Survey quoted earlier). In DataCore's March 2013 State of Virtualization survey, 55 percent of organisations had not undertaken a desktop virtualization project and only 11 percent had virtualized more than a quarter of their desktops:

As well as VMware (View), Citrix (XenDesktop) and Microsoft (Windows Server 2012), leading desktop virtualization vendors include Desktone, Unidesk, Virtual Bridges, Quest Software (now owned by Dell), MokaFive, NComputing, Oracle and Red Hat:

Application virtualization

Application virtualization decouples the installation of an application from the endpoint that's running it. This can be done via the desktop or server virtualization solutions described above, or by using application streaming technology: in the latter case, packaged applications are stored a central server, downloaded over the network and cached on the client. Generally speaking, only a small percentage of an entire application needs to be delivered to the client before it can launch, with additional functionality being delivered on demand.

Whatever the delivery model, application virtualization makes for much easier app deployment and management compared to installing and maintaining local installations on multiple endpoints. This is especially true when it comes to tricky areas like licensing and, on client endpoints, application conflicts.

In DataCore's State of Virtualization survey, nearly one in five (19 percent) of respondents reported that all of their mission-critical applications would be virtualized by the end of this year, with over half (58 percent) saying that 80 percent or more would be virtualized. Heading the hit-list is a trio of Microsoft enterprise stalwarts: SQL Server, Exchange and SharePoint:

Leading application virtualization vendors include Cameyo, Citrix (XenApp), Evalaze, Numecent, Novell (ZenWorks), Microsoft (App-V), Roozz, Spoon (formerly Xencode), Symantec (Endpoint Virtualization) and VMware (ThinApp).

Conclusion

Today's server, storage, network, desktop and application virtualization technologies provide IT managers with the means to create flexible, scalable, manageable and secure infrastructure that utilises the underlying hardware to maximum efficiency. Some of these technologies — notably software-defined networking — are in the early stages of development while others, such as server virtualization, are mature and widely deployed.

IT professionals may still be getting to grips with virtualization in many businesses, and their users and senior management may be a little hazy on the technology and its benefits, but it's here to stay and is undoubtedly the future of business IT.