Busting deceptive benchmark charts (AMD this time) is necessary, but ultimately is not a solution

Yesterday, my fellow blogger George Ou (with whom I've sparred over the benchmarking practices of both Intel and AMD) referred to a set of comparative performance charts published by AMD as "criminal". Reminiscent of when I referred to Intel's benchmarking behavior as felonious, George wrote:

.....this latest round of deceptive benchmarks is so outrageous that it’s criminal. On AMD’s “Barcelona” performance page, AMD shows the following fictitious and outdated information. Apparently some of these misleading numbers are even showing up on Wall Street Journal advertisements...

His accusations have provoked a thread of comments (114 of them and growing at last count) many of which take George's side, and a whole bunch of others that take George to task over a publication "timing" issue. Wrote CFKane:

- Please double-check your facts before making wild accusations. Those graphs have not been posted just now after they announced the launch frequencies of 2.0GHz, but have been available on AMDs web site for a long time. Just google for the url and you'll see that people have been discussing them since May.

Both George and the ZDNet readers who are ganging up on him have legitimate points. If for example, the charts and data were published months ago at a time when the newer data George refers to wasn't yet available (be it in press briefings, WSJ ads, or whatever), I could see why readers might call George's accusations into question. But if that's the case, was George really out of line? I think not (hold that thought....as crazy as that sounds, I'll tell you why in a sec). If, as George says, AMD is publishing benchmark data regarding a product that doesn't yet exist (or that can't be independently validated by a third party benchmarking organization), then it doesn't matter when the benchmark was published. It's simply not a good idea to publish that data.

The truth is that George, his supporters, and his critics have the sort of discriminating eyes that most consumers of the benchmark data in question do not. In other words, whereas George & Co. can pour through the data and weed out the hype, the chance that others less capable of analyzing the raw data will be misled is very good. Benchmark presentations shouldn't require such discriminating eyes. Not just to understand the timing of the data but also to discover where apples are being compared to oranges (too often the case). If this particular flare up around benchmarking is evidence of anything, it's that, given the current approach to publishing and policing benchmarks, confusion is guaranteed.

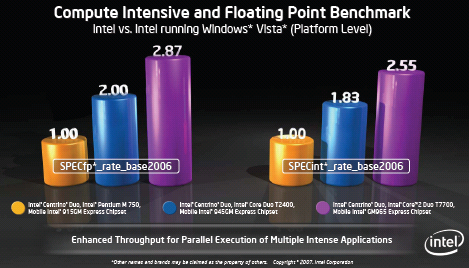

This was my message to Intel when I last took it to task over benchmarking best practices which is why I was actually pleasantly surprised this past May when, during a roll-out of the latest Mobile Core 2 Duo processors, the company wisely elected to not only stick to the most recently published benchmark data, in an attempt to keep apples from being compared to oranges, it ended up comparing its newer processors to its older processors rather than to mobile processors from its competitors. An example of one of the charts shown that day appears below and the entire presentation is available online.

While Intel doesn't get excused for its other benchmarking violations, this was one situation where it probably recognized what those of us with the discriminating eyes would have recognized had Intel attempted to compare the new mobile offerings to something from AMD: the comparisions might have shown Intel flattening AMD, but the credibility of the results based on timing and availability of test data would have been dubious at best. Taking the high road and pointing out as best as it possibly could (it's really hard to do real apples to apples comparisons) the improvements that Intel's newer technology represented over its older gear was the right thing to do.

Still, a problem exist. No best practices are documented which means chip vendors and other companies looking to benchmark their wares are on their own to figure out what the high road is and what the best practices are. Here for example are three best practices that might have kept the latest George Ou controversy from erupting:

- Outdated benchmark charts are not only footnoted with the date they were published (AMD's charts refer to the dates that data was plucked from SPEC.org), but are also watermarked with with the word "outdated" once new data becomes avaialable. An industry standard definition for "outdated" would have to be determined.

- Current promotional pages (eg: the official performance page for AMD's Barcelona) should always reflect the newest data available. It's true that this could mean keeping a great many pages up to date, constantly checking to see if maybe some page contains some outdated data that could end up misleading someone. But on the same token, it's not good PR for customers to be misled.

- Benchmark charts should never include speculative data regarding unavailable product that can't be independently verfied by a third party.

Is AMD the bad guy? Or is it really us? What I mean by "us" is that we as an industry have been pretty laissez-faire when it comes to benchmark publishing practices. In the 90's we had large, well-funded, independent benchmarking organizations like ZD Labs that made it difficult if not impossible for vendors to purposely or inadvertently mislead customers, analysts, Wall St., etc. Today, most of those organization are gone and what's left are a few watchdogs like George who, like him or not, is at least drawing attention to a problem: Each of the various benchmarking organizations (eg: SPEC.org) may have their special requirements for publishing benchmarks But that doesn't deal with the broader issue of when and how those benchmarks get used in the context of other benchmarks. The resulting damage -- again, whether purposeful or inadvertent -- is material to a great many communities. Customers. Competitors. Researchers. Wall St. .... etc.

This isn't just an AMD/Intel problem. It's for anybody publishing benchmarks be it chipmakers, system makers, operating system makers and even companies like Apple that claim a certain amount of battery life for their devices using scenarios that aren't even remotely close to those found in the real world.

It's time for the industry to recognize that the longer it takes to get together and solve this problem, the longer the entire industry is actually disrespecting itself. For thing to continue the way they are going doesn't benefit anybody. It's not good for customers being misled. It's not good for vendors that get nailed to the wall for deceptive practices. It's not good for other people whether they're recommending stock strategies or purchasing decisions. No matter how you look at it, it's a no win situation. One that must be corrected.