IT leader Cognizant evolves AI beyond 'hill climbing'

"Deep learning is neither deep, nor is it learning," says Babak Hodjat, the vice president of projects for "Evolutionary AI" at IT services giant Cognizant Technologies.

Hodjat's critique is part of a fascinating exploration of AI taking shape at IT services firm Cognizant Technology Solutions, a twenty-five-year-old company based in Teaneck, New Jersey that last year made nearly $16 billion in revenue serving some of the biggest companies in the world.

For years, this IT giant has talked about "digital transformation," something that is large and significant but also something hard to get one's mind around because it very often seems vague and undefined.

And then in December, Cognizant gave a whole new grounding and precision to that digital work by acquiring certain assets from an eleven-year-old AI startup Sentient Technologies. The company, co-founded by Hodjat, has been pursuing a thrilling line of work in what's called "evolutionary computation," where many algorithms, including conventional artificial neural networks, can be tested in parallel for "fitness," to select an optimal network to perform a task.

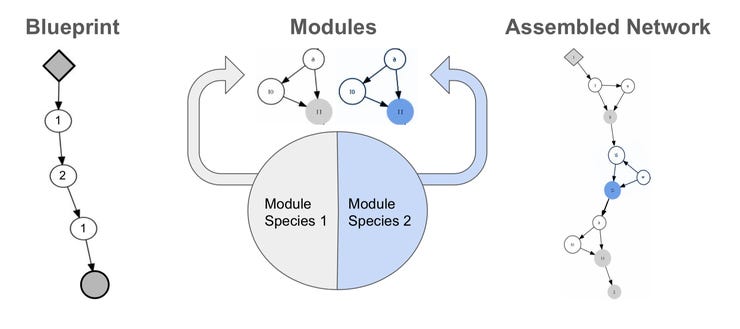

How Cognizant's LEAF technology assembles layers of a neural network by trying out different kinds of neural network "modules" inside of a blueprint.

Also: Intel's neuro guru slams deep learning: 'it's not actually learning'

To Hodjat, who came over to Cognizant along with the acquisition of the intellectual property, the work goes well beyond the optimization of mainstream deep learning. Deep learning focuses on optimizing the "parameters," or "weights," of a neural network, via what is known as stochastic gradient descent, through a process known as backpropogation.

"It's all just hill climbing," says Hodjat of deep learning, in an interview with ZDNet. He is making a critique that has been leveled against the field for decades, as far back as the criticisms of MIT scientists Marvin Minsky and Seymour Papert.

The "hill," in this case, is the geometric slope of values that backpropagation is navigating in order to find the optimal value, the peak of the hill. (Terminology can be a bit slippery here. In the common deep learning vocabulary, gradient descent is not going up the slope to an optimal point, it is trying to find the lowest "energy" point in a slope, which is often referred to as a global minimum. The nuances are important, but in a sense, the terms are complementary.)

"Back-prop is expensive at many levels," says Hodjat of that energy function, echoing critiques by others such as Mike Davies of chip maker Intel. It doesn't match the efficiency of biological learning, he contends.

Also: China's AI scientists teach a neural net to train itself

In contrast, in human development, "A human child observes and learns about something without having seen it before, with very low energy."

Hodjat runs a team of fifteen people out of Cognizant's San Francisco offices. The Evolutionary Innovation business is part of Cognizant's broader "Cognizant Digital Business," where Hodjat reports to Karthik Krishnamurthy, VP of Markets. The Digital unit consists of over thirty thousand people in Cognizant offices around the world.

With a total workforce at Cognizant of over 270,000, the Evolutionary AI group is just a tiny speck, in a sense, but it is also the tip of the spear for AI: The innovations from evolutionary computation will feed the digital operations broadly, Cognizant hopes.

Cognizant Technology Solutions executives, from left, Bret Greenstein, head of AI, Karthik Krishnamurthy, head of Cognizant Digital Business, and Babak Hodjat, co-founder of Sentient Technologies and head of "Evolutionary Innovation," at the company's "Collaboratory" facility on 42nd Street in Manhattan.

Also: Can IBM possibly tame AI for enterprises?

On a recent afternoon, Hodjat and Krishnamurthy, and another deputy of Krishnamurthy's, Bret Greenstein, who is the head of AI at for the Digital business, sat down in the playful offices of what Cognizant calls the "Collaboratory," a workspace on 42nd Street in Manhattan.

The Collaboratory is designed to be a bit more like a startup environment, with couches in a living room-type setting, to explore a kind of work more familiar to millennial and "digital native" employees than the traditional office culture of Cognizant.

While Krishnamurthy has been with Cognizant for twenty years, Greenstein joined about six months ago, and was a lifelong IBM'er before.

"In terms of serious investment in AI, data science, and machine learning, we've been at this for the last five years," says Krishnamurthy. "Prior to that, Cognizant had been doing analytics for a long time."

AI can be the subject of vast debates, just like the kind between Facebook AI guru Yann LeCun and Intel's Davies, Greenstein notes. But for Cognizant to help its customers, such debates are less important than exploring all the options. "There are some cases where process automation is sufficient for clients, and some where it needs to be much more adaptive and learning."

Also: Appian CEO Calkins draws dividing line between AI and human ability

Enter Hodjat and Sentient. The most recent example of their work is contained in a paper put out by Hodjat a week ago, "Evolutionary Neural AutoML for Deep Learning," which is posted on the Bioarxiv pre-print server. It was co-authored with Risto Miikkulainen, a University of Texas professor of computer science who serves as CTO under Hodjat.

The paper describes a computer system called "CoDeepNEAT," which assembles layers of neurons of an artificial neural network by mixing different types of function in each layer, such as the convolutions of a convolutional neural network (CNN), or the "cell" of a typical recurrent neural network (RNN).

CoDeepNEAT begins with a network composed randomly of these layers, and it sets multiple designs in competition with one another in typical machine learning training. A supervisory program runs those multiple networks in parallel on Microsoft Azure, and determines the relative "fitness" of each proposed network, based on how they each do on the "validation" phase after training. In that way, many generations of possible networks can be tested to find an ideal combination of layers that lead to an optimal neural network.

On benchmark tests, including automatically rating the "toxicity" of Wikipedia comments, or classifying chest x-ray images, the CoDeepNEAT approach "handily beat" the performance of some very well-established methods for "discovering" neural networks, such as Google's "AutoML" and Microsoft's "TLC" system, says Hodjat, with evident pride.

Also: AI startup Petuum aims to industrialize machine learning

In a sense, CoDeepNEAT is an AutoML mechanism. "We are working with groups in Google Brain on these problems, we talk on joint papers," Hodjat notes.

Presentation by Babak Hodjat on the virtues of "evolutionary computation" for problem learning.

A key element of CoDeepNEAT, Hodjat points out, is that the multiple networks can be compared across multiple different tasks, simultaneously, rather than the typical deep learning approach of training a single network on a single task such as a classifier.

That's important because the multi-multi-task training reveals not only which networks do better, but which are most efficient to produce a given test result. Searching hundreds, perhaps thousands of possible combinations is an approach unique to evolution, Hodjat points out, which can lead to better solutions than optimizing on a single network via hill climbing.

"Evolution brings in the notion of creativity and traversal of the search space, it is not stochastic gradient descent, and it is not hill climbing," says Hodjat. While there is a "fitness function" that is optimized, it doesn't involve back-propagating errors to improve weights of a single network. Rather, CoDeepNEAT balances "novelty search" and exploiting what is discovered by it.

"Suppose you have been to Palo Alto," Hodjat offers as an analogy, "and you want to go to Chicago. You can put a little pin in the map for Palo Alto, to mark a place from which you start. As you move through a map, you have these markers of places that guide you where to move forward or backward or around." A classic example in machine learning is the problem of an AI "agent" trying to get from one room in a 2-D map to another room. Early in the task, the agent is helped by emphasizing novelty, striking out in directions it has never been.

Also: Google ponders the shortcomings of machine learning

"If you make good progress toward your objective, you might downplay novelty and then switch back to it if you get stuck in local optima," says Hodjat.

"As new knowledge comes in, you change your view of the world," he explains. In contrast, "Deep [learning] networks don't operate that way."

How CoDeepNEAT balances novelty search with exploitation is the subject of several patents already granted or applied for by Hodjat and his team, the "secret sauce," as they say.

Productizing all this will take Cognizant in new directions, armed with new capabilities. Sentient came to Cognizant with a product called "LEAF" that bundles all this technology together into a production system.

LEAF can be used to help a company figure out "how do I run my business better," says Greenstein. A customer will come to Cognizant with a prediction problem, say, to improve customer conversions, or improve quality or reduce cost in a product.

"We can go in and affect your prediction rates by twenty to thirty percent," says Greenstein. "We can augment that process for the client, it's something no one else can do."

Cognizant is in "late conversations" with two clients to use the technology, a packaged consumer goods company, and an insurance company.

"It is high value for sure," says Greenstein. "We expect definitely more than a double-digit premium" over rates Cognizant typically charges, he maintains.

With major IT resources behind LEAF, Cognizant may not just make money, it may lead the charge in "industrializing" a form of AI, making it more approachable and usable outside of just the mega-cap tech companies that have dominated the field such as Google and Facebook.

Must read

- 'AI is very, very stupid,' says Google's AI leader (CNET)

- How to get all of Google Assistant's new voices right now (CNET)

- Unified Google AI division a clear signal of AI's future (TechRepublic)

- Top 5: Things to know about AI (TechRepublic)

A question at the end of the day, one perhaps not immediately of concern to Cognizant, is whether evolutionary computation of the LEAF sort will lead to "artificial general intelligence," or AGI, the supposed Holy Grail of machine learning.

Hodjat knows something about creating computer systems that seem to capture some aspects of natural understanding. He is best known for work years ago that ultimately led to the Siri assistant technology that runs on Apple's iPhone. That project was done while he was working for a software maker called Dejima, Inc., under contract to legendary research firm SRI International.

"I'm not a big fan of AGI," says Hodjat. "It is a myth we strive toward, we don't even know what it means, but it is aspirational." Ultimately, he says, intelligence is "population-based," it is "a population of intelligent yet independent minds that are collaborating and often competing, and the emergent behavior of these actors is us." The generalizability of human knowledge, the ability to "apply our intelligence very broadly," emerges as a phenomenon of the many actions of humans learning across many tasks.

But all that is a challenge for another day. For the time being, Hodjat's boss is satisfied with how LEAF will enhance Cognizant's toolkit for practical purposes.

"AI is a piece of technology that leverages algorithms that learn and produce output that is more accurate and more scalable than what we have been doing traditionally," says Krishnamurthy. "We can leverage it to really help clients solve problems."

Previous and related coverage:

What is AI? Everything you need to know

An executive guide to artificial intelligence, from machine learning and general AI to neural networks.

What is deep learning? Everything you need to know

The lowdown on deep learning: from how it relates to the wider field of machine learning through to how to get started with it.

What is machine learning? Everything you need to know

This guide explains what machine learning is, how it is related to artificial intelligence, how it works and why it matters.

What is cloud computing? Everything you need to know about

An introduction to cloud computing right from the basics up to IaaS and PaaS, hybrid, public, and private cloud.