Meta's AI luminary LeCun explores deep learning's energy frontier

So-called energy-based models, which borrow from statistical physics concepts, could lead to deep learning forms of AI that make abstract predictions, says Yann LeCun, Meta's chief scientist.

Three decades ago, Yann LeCun, while at Bell Labs, formalized an approach to machine learning called convolutional neural networks that would prove to be profoundly productive in solving tasks such as image recognition. CNNs, as they're commonly known, are a workhorse of AI's deep learning, winning LeCun the prestigious ACM Turing Award, the equivalent of a Nobel for computing, in 2019.

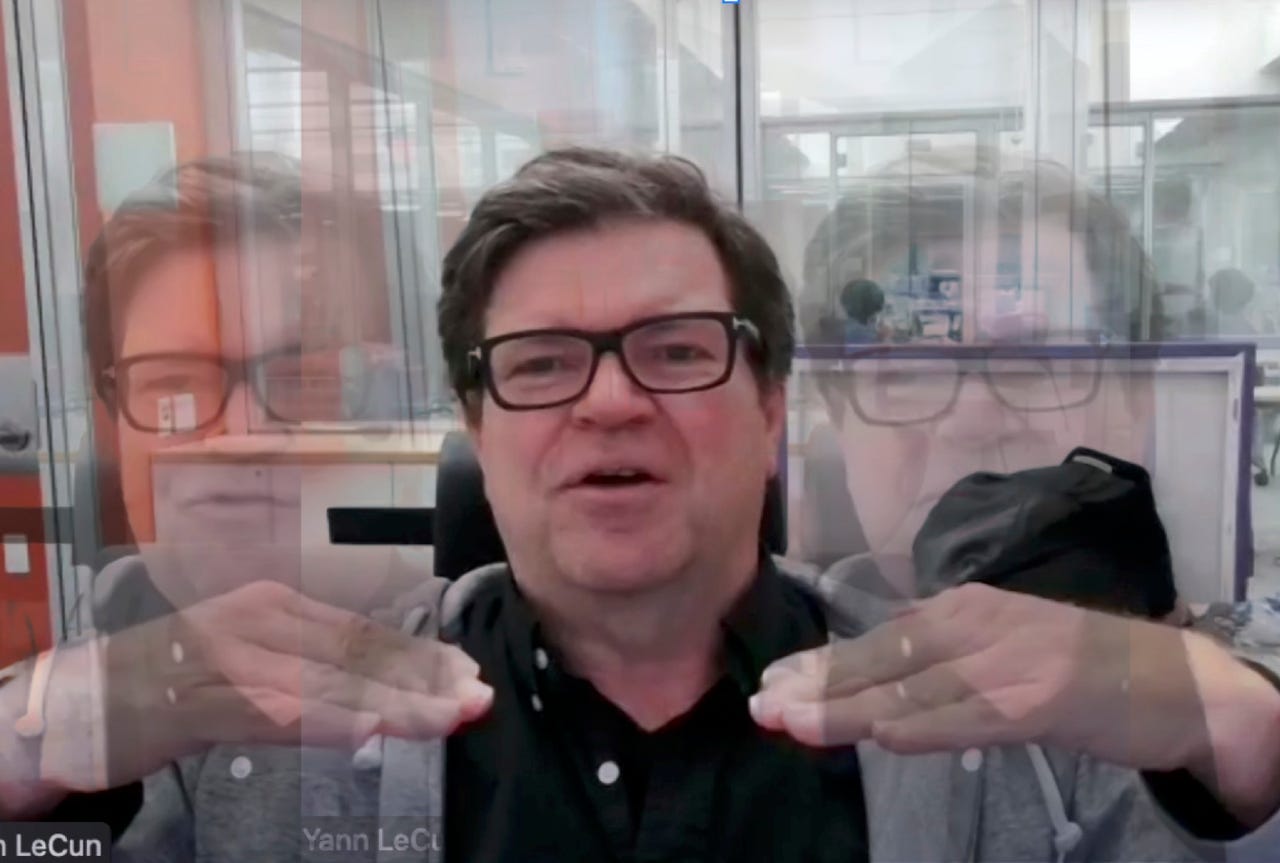

These days, LeCun, who is both a professor at NYU and chief scientist at Meta, is the most excited he's been in 30 years, he told ZDNet in an interview last week. The reason: New discoveries are rejuvenating a long line of inquiry that could turn out to be as productive in AI as CNNs are.

That new frontier that LeCun is exploring is known as energy-based models. Whereas a probability function is "a description of how likely a random variable or set of random variables is to take on each of its possible states" (see Deep Learning, by Ian Goodfellow, Yoshua Bengio & Aaron Courville, 2019), energy-based models simplify the accordance between two variables. Borrowing language from statistical physics, energy-based models posit that the energy between two variables rises if they're incompatible and falls the more they are in accord. This can remove the complexity that arises in "normalizing" a probability distribution.

It's an old idea in machine learning, going back at least to the 1980s, but there has been progress since then toward making energy-based models more workable. LeCun has given several talks in recent years as his thoguhts on the matter were developing, including a talk at the Institute for Advanced Study in Princeton in 2019, reported on by ZDNet.

More recently, LeCun has described the current state of energy-based model research in two papers. "Barlow Twins," published last summer with colleagues from Facebook AI Research and VICReg," which was published in January with FAIR and France's Inria at the École normale supérieure.

There are intriguing parallels to quantum electrodynamics in some of this, as LeCun acknowledged in conversation, though that is not his focus. His focus is on what kinds of predictions can be advanced for AI systems.

Using a version of modern energy-based models that LeCun has developed, what he calls a "joint embedding model," LeCun believes there will be a "huge advantage" to deep learning systems, namely that "prediction takes place in an abstract representation space."

Also: Jack Dongarra, who made supercomputers usable, awarded ACM Turing prize

That opens the way, LeCun argues, to "predicting abstract representations of the world." Deep learning systems with abstract prediction abilities may be a path to planning in a general sense, where "stacks" of such abstraction prediction machines can be layered to produce planning scenarios when the system is in inference mode.

That may be an important tool to achieve what LeCun believes can be a unified "world model" that would advance what he refers to as autonomous AI, something capable of planning by modeling dependencies across scenarios and across modalities of image, speech, and other inputs about the world.

What follows is an edited version of our conversation via Zoom.

ZDNet: First things first, to help orient us,you've talked recently about self-supervised learning in machine learning, and the term unsupervised learning is also out there. What is the relationship of unsupervised learning to self-supervised learning?

Yann LeCun: Well, I think of self-supervised learning as a particular way to do unsupervised learning. Unsupervised learning is a bit of a loaded term and not very well defined in the context of machine learning. When you mention that, people think about, you know, clustering and PCA [principle component analysis], things of that type, and various visualization methods. So self-supervised learning is basically an attempt to use essentially what amounts to supervised learning methods for unsupervised learning: you use supervised learning methods, but you train a neural net without human-provided labels. So take a piece of a video, show a segment of the video to the machine, and ask it to predict what happens next in the video, for example. Or show it two pieces of video, and ask it, Is this one a continuation for that one? Not asking it to predict, but asking it to tell you whether those two scenes are compatible. Or show it two different views of the same object, and ask it, Are those two things the same object. So, this type of thing. So, there is no human supervision in the sense that all the data you give the system is input, essentially.

ZDNet: You have given several talks in recent years, including at the Institute for Advanced Study (IAS) located in Princeton, New Jersey, in 2019 and, more recently, in a February talk hosted by Baidu about methods for what is called energy-based approaches to deep learning. Do those energy-based models fall into the self-supervised segment of unsupervised learning?

YL: Yes. Everything can be as assumed in the context of an energy-based model. I give you an X and a Y; X is the observation, and Y is something the model is supposed to capture the dependency of with respect to X. For example, X is a segment of video, Y is another segment, and I show X and Y to the system, it's supposed to tell me if Y is a continuation of X. Or two images, are they a distorted version of each other, or are they completely different objects? So, the energy measures this compatibility or incompatibility, right? It would be zero if the two pieces were compatible, and then, in some large numbers, they are not.

Also: High energy: Facebook's AI guru LeCun imagines AI's next frontier

And you have two strategies to train the energy-based models. The first one is, you show it compatible pairs of X, Y, and you also show it incompatible pairs of X, Y. Two segments of video that don't match, views of two different objects. And so, for those [the incompatible pairs], you want the energy to be high, so you push the energy up somehow. Whereas for the ones that are incompatible, you push energy down.

Those are contrastive methods. And at least in some contexts, I invented them for a particular type of self-supervised learning called "siamese nets." I used to be a fan of them, but not anymore. I changed my mind on this. I think those methods are doomed. I don't think they're useless, but I think they are not sufficient because they don't scale very well with the dimension of those things. There's that line; All happy couples are happy in the same way, all unhappy couples are unhappy in different ways. [Tolstoy, Anna Karenina, "Happy families are all alike; every unhappy family is unhappy in its own way."]

It's the same story. There are only a few ways two images can be identical or compatible; there are many, many ways two images can be different, and the space is high-dimensional. So, basically, you would need an exponentially large number of contrastive samples of energy to push up to get those contrastive methods to work. They're still quite popular, but they are really limited in my opinion. So what I prefer is the non-contrastive method or so-called regularized methods.

"There are only a few ways two images can be identical or compatible; there are many, many ways two images can be different, and the space is high-dimensional."

And those methods are based on the idea that you're going to construct the energy function in such a way that the volume of space to which the value you give low energy to is limited. And it can be interpreted by a term in the loss function or a term in the energy function that says minimize the volume of space that can take low energy somehow. We have many examples of this. One of them is integral sparse coding that goes back to the 1990s. And what I'm really excited about these days is those non-contrastive methods applied to self-supervised learning.

ZDNet: And you have discussed, in particular, in your talks, what you call the "regularized latent variable energy-based model," the RLVEB. Are you saying that's the way forward, the new convolutional neural nets of the 2020s or 2030s?

YL: Well, let me put it this way: I've not been as excited about something in machine learning since convolutional nets, okay? [Laughs] I'm not sure it's the new convolutions, but it's really something I'm super-excited about. When I was talking at IAS, what I had in mind was this regularized latent variable generative model. They were generative models because, what you do, if you want to apply it to something like video prediction, you give it a piece of video, you ask it to predict the next segment of video.

Now, I also changed my mind about this in the last few years. Now, my favourite model is not a generative model that predicts Y from X. It's what I call the joint embedding model that takes X, runs it through an encoder, if you like, a neural net; takes Y, and also runs it through an encoder, a different one; and then prediction takes place in this abstract representation space. There's a huge advantage to this.

First of all, why did I change my mind about this? I changed my mind because we didn't know how to do this before. Now we have a few methods to do this that actually works. And those methods have appeared in the last two years. The ones I am pushing, there are two, actually, that I produced; one is called VIC-REG, the other one is called Barlow Twins.

ZDNet: And so what progress do you think you might see along this line of reasoning in the next five to ten years?

YL: I think now we have at least an approach that can carry us toward systems that can learn to predict in abstract space. They can learn abstract prediction at the same time as they can learn to predict what's going to happen, over time or over states, in that abstract space. And that's an essential piece if you want to have an autonomous intelligent system, for example, that has some model of the world that allows you to predict in advance what's going to happen in the world because the world is evolving, or as the consequences of its actions. So, given an estimate of the state of the world and given an action you're taking, it gives you a prediction for what the state of the world might be after you take the action.

ENERGY-BASED MODEL: "VICREG," short for "Variance-Invariance-Covariance Re-Gularization For Self-Supervised Learning," is LeCun's latest take on the energy-based neural network architecture. A set of images are transformed in two different pipelines, and each distorted image is sent into en encoder that essentially compresses it. Then, a projector, also known as an "expander," unpacks that compressed representation into a final "embedding," the Z-dimension. it is the similarity between these two embeddings, invariant to their distortion, that allows the program to find the appropriate low-energy level where something is identified.

And that prediction also depends on some latent variable you cannot observe. Like, for example, when you're driving your car, there's the car in front of you; it could brake, it could accelerate, turn left or turn right. There's no way for you to know in advance. So that's the latent variable. And so the overall architecture is something where, you know, you take X and Y, initial set of video, the future video, embed them in some neural net, you have two abstract representations of those two things. And in that space, you are doing one of those latent variables, energy-based predictive models.

The point is, the model now is predicting abstract representations of the world. It's not predicting all the details of the world, many of which can be irrelevant. So, you're driving this car on the road; you might have an ultra-complex portion of the leaves of a tree on the side of the road. And there's absolutely no way you can predict this, or you don't want to devote any energy or resources to predict this. So this encoder might, essentially, eliminate that information before being asked.

ZDNet: And do you foresee, again, in the next five to ten years, some specific milestones? Or goals?

"So, how do you get a machine to plan? If you have a predictive model of the world... then you can have the system imagine its course of action, imagine the resulting outcome."

YL: What I foresee is that we could use this principle -- I call this the JEPA architecture, the Joint Embedding Predictive Architecture, and there is a blog post on this, and there is a long paper I'm preparing on this -- and what I see from this is that we now have a tool to learn predictive models of the world, to learn representations of percepts in a self-supervised manner without having to train the system for a particular task. And because the systems learn abstract representations both of X and Y, we can stack them. So, once we've learned an abstract representation of the world around us that can allow us to make short-term predictions, we can stack another layer that would perhaps learn more-abstract representations that will allow us to make longer-term predictions.

That would be essential to get a system to learn how the world works by observation, by watching videos, right? So, babies learn by, basically, watching the world go by and learn intuitive physics and everything we know about the world. And animals do this, too. And we would like to get our machines to do this. And so far, we've not been able to do that. So that, in my opinion, is the path towards doing this, using the joint embedding architecture, and inspecting them in a hierarchical fashion.

The other thing it might help us with are deep learning machines that are capable of reasoning. So, a topic of debate, if you want, is that what deep learning has been good at so far is perception, you know, things that are, here is an input, here is the output. What if you want a system to basically reason, plan? There's a little bit of that going on in some of the more complex models, but really not that much.

And so, how do you get a machine to plan? If you have a predictive model of the world, if you have a model that allows the system to predict what's going to happen as a consequence of its actions, then you can have the system imagine its course of action, imagine the resulting outcome, and then feed this to some house function which, you know, characterizes whether the task has been accomplished, something like that. And then, by optimization, possibly using gradient descent, figure out a sequence of actions that minimizes that objective. We're not talking about learning; we're talking about inference now, planning. In fact, what I'm describing here is a classical way of planning and optimal control for model-predictive control.

The difference with optimal control is that we do this with a learned model of the world as opposed to a kind of hard-wired one. And that model would have every variable that would handle the uncertainty of the world. This can be the basis of an autonomous intelligence system that is capable of imagining the future, planning a sequence of actions.

I want to fly from here to San Francisco; I need to get to the airport, catch a plane, etc. To get to the airport, I need to get out of my building, go down the street, and catch a taxi. To get out of my building, I need to get out of my chair, go towards the door, open the door, go to the elevator or the stairs. And to do that, I need to figure out how to decompose this into to millisecond by millisecond muscle control. That's called hierarchical planning. And we would like systems to be able to do this. Currently, we are not able to really do this. These general architectures could provide us with those things. That's my hope.

ZDNet: The way you have described the energy-based models sounds a little bit like elements of quantum electrodynamics, such as the Dirac-Feynman path integral or the wave function, where it's a sum over a distribution of amplitudes of possibilities. Perhaps it's only a metaphorical connection, or perhaps there is actually a correspondence?

YL: Well, it's not just a metaphor, but it's partially different. When you have a latent variable, and that latent variable can take a bunch of different values, typically, what you do is cycle through all the possible values of this latent variable. This may be impractical. So, you might sample that latent variable from some distribution. And then what you're computing is the set of possible outcomes. But, really, what you're computing, in the end, is some cost function, which gives an expected value where you average over the possible values of that latent variable. And that looks very much like a path integral, actually. The path integral, you're computing the sum of energy over multiple paths, essentially. At least in the classical way. In the quantum way, you're not adding up probabilities or scores; you're adding up complex numbers, which can cancel each other. We don't have that in ours, although we've been thinking about things like that -- at least, I've been thinking about things like that. But it's not used in this context. But the idea of marginalization over a latent variable is very much similar to the idea of summing up over paths or trajectories; it's very similar.

ZDNet: You have made two rather striking assertions. One is that the probabilistic approach of deep learning is out. And you have acknowledged that the energy-based models you are discussing have some connection back to approaches of the 1980s, such as Hopfield Nets. Care to elaborate on those two points?

"Consciousness is a consequence of the limitations of our brains. If we had an infinite-sized brain, we wouldn't need consciousness."

YL: The reason why we need to abandon probabilistic models is because of the way you can model the dependency between two variables, X and Y; if Y is high-dimensional, how are you going to represent the distribution over Y? We don't know how to do it, really. We can only write down a very simple distribution, a Gaussian or mixture of Gaussians, and things like that. If you want to have complex probability measures, we don't know how to do it, or the only way we know how to do it is through an energy function. So we write an energy function where low energy corresponds to high probability, and high energy corresponds to low probability, which is the way physicists understand energy, right? The problem is that we never, we rarely know how to normalize. There are a lot of papers in statistics, in machine learning, in computational physics, etc., that are all about how you get around the problem that this term is intractable.

What I'm basically advocating is, to forget about probabilistic modeling, just work with the energy function itself. It's not even necessary to make the energy take such a form that it can be normalized. What it comes down to in the end is, you should have some loss function that you minimize when you're training your data model that makes the energy function of things that are compatible low and the energy function of things that are incompatible high. It's as simple as that.

ZDNet: And the connection to things such as Hopfield Nets?

YL Certainly Hopfield Nets and Boltzmann Machines are relevant for this. Hopfield Nets are energy-based models that are trained in a non-contrastive way, but they are extremely inefficient, which is why nobody uses them.

Boltzmann Machines are basically a contrastive version of Hopfield Nets, where you have data samples, and you lower the energy of them, and you generate other samples, and you push their energy up. And those are slightly more satisfying in some way, but they don't work very well either because they're contrastive methods, and contrastive methods don't scale very well. They're not used either, for that reason.

Also: Meta's 'data2vec' is a step toward One Neural Network to Rule Them All

ZDNet: So, are the regularized, latent variable energy-based models really to be thought of as Hopfield Net 2.0?

YL: No, I wouldn't say that.

ZDNet: You have made another rather striking assertion that there is "only one world model" and that consciousness is "the deliberate configuration of a world model" in the human brain. You have referred to this as perhaps a crazy hypothesis. Is this conjecture on your part, this crazy hypothesis, or is there evidence for it? And what would count as evidence in this case?

YL: Yes, and yes. It's a conjecture, it's a crazy idea. Anything about consciousness, to some extent, is conjecture, and crazy idea, because we don't really know what consciousness is in the first place. My point of view about it is that it's a bit of an illusion. But the point I'm making here, which is slightly facetious, is that consciousness is thought of as, kind-of, this sort-of ability that humans and some animals have because they are so smart. And the point I'm making is that consciousness is a consequence of the limitations of our brains because we need consciousness because we have this single, kind-of, world model engine in our head, and we need something to control it. And that's what gives us the illusion of consciousness. But if we had an infinite-sized brain, we wouldn't need consciousness.

There is at least some evidence that we have, more or less, kind of a single simulation engine in our head. And the evidence for this is that we can basically only attempt one consciousness task at any one time. We concentrate on the task; we sort of imagine the consequences of our actions that we plan. You can only do one of those at a time. You can do multiple tasks simultaneously, but they are sub-consciousness tasks that we have trained ourselves to do without thinking, essentially. We can talk to someone next to us while we're driving after we have practiced driving long enough that it's become a subconscious task. But in the first hours that we learn to drive, we're not able to do that; we have to concentrate on the task of driving. We have to use our world model prediction engine to figure out all possible scary scenarios of things that can happen.

ZDNet: If something like this is conjecture, then it doesn't really have any practical import for you in your work at the moment, does it?

YL: No, it does, for this model for autonomous AI that I've proposed, which has a single configurable world model simulation engine for the purpose of planning and imagining the future and filling in the blanks of things that you cannot completely observe. There is a computational advantage to having a single model that is configurable. Having a single-engine that you configure may allow the system to share that knowledge across tasks, things that are common to everything in the world that you've learned by observation or things like basic logic. It's much more efficient to have that big model that you configure than to have a completely separate model for different tasks which may have to be trained separately. But we see this already, right? It used to be, back in the old days at Facebook -- when it was still called Facebook, the vision we were using for analyzing images, to do ranking and filtering, we had specialized neural nets, specialized convolutional nets, basically, for different tasks. And now we have one gigantic one that does everything. We used to have half a dozen ConvNets; now, we have only one.

So, we see that convergence. We even have architectures now that do everything: they do vision, they do text, they do speech, with a single architecture. They have to be trained separately for the three tasks, but this work, data2vec, it's a self-supervised approach.

ZDNet: Most intriguing! Thank you for your time.