Tailoring networking to the cloud

Ten-gigabit Ethernet network switch vendor Arista Networks has been something of an industry poster child since its inception five years ago. It took its time producing its first products, but is now expanding into the UK.

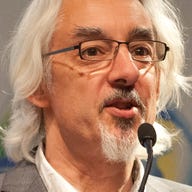

The company is led by chief executive Jayshree Ullal [pictured], who once headed Cisco's datacentre-networking division. Arista was set up by Ullal, along with chairman Andy Bechtolsheim, a co-founder of Sun, and chief scientist David Cheriton, also an alumnus of Sun and Cisco.

Funding comes from Bechtolsheim and Cheriton, so the company, an early 10Gb Ethernet player, has had the independence to develop technology at its own pace.

When Ullal was in London recently, ZDNet caught up with her to talk about the company's European expansion plans, the future of high-speed networking and how high-performance computing (HPC) is becoming mainstream.

Q: What brings you to London?

A: We started in the US, and our business is heavily weighted towards the US but, just as I believe that 2010 is the year of 10Gb Ethernet, I also believe 2010 is the year of our expansion in Europe. We're building channels and partners in the UK — we have seven to 10 partners across Europe, the Middle East and Africa, and we've just created a UK subsidiary.

You play heavily in the cloud-computing market. Where is the cloud-computing trend going?

You have to look at what happened to processors. They went from simple CPUs that got faster but also became multicore devices with tremendous capacity. Two years ago, 20 percent of servers in the datacentre were [HPC], and the rest were enterprise. Now that ratio is 50:50.

[That change in ratio] is because virtualisation has reduced the number of servers you need. But also, multicore web-facing applications have grown. So cloud computing is more about web-facing applications needing dense computing and multicore capabilities.

To do cloud computing well, you need a good networking infrastructure. Some networking vendors, including my former employer, have taken the view that whatever works in the enterprise world can be brought to the cloud world.

This is where Arista's approach diverges. Instead of focusing first on building a 10Gb switch, we focused on building a purpose-built architecture on which you can then build a 10Gb switch. We spent the better part of four or five years building the software, the Extensible Operating System.

We probably didn't entirely know what we were designing for at the time, but we did know there were five properties of cloud computing that we had to tailor for networking: scale, low latency, high resilience, extensibility and openness, which allows you to run tools on the switch rather than taking up CPU on the server. All these issues are unique to cloud.

You have to look at the key applications that drive the traffic, which is where you see the bifurcation between the classic 1Gb datacentre and a more performance-intensive datacentre. For example, traffic between client and server used to be 80:20 server to client, but now it's an equal distribution.

As a result, we think 10Gb is a healthy market, with [market analysts] Dell'Oro and IDC reports telling you there were 10 million ports sold in 2009, going to 13 million in three years.

You say this is the year of 10Gb. Why?

It's to do with the rise of faster, denser CPUs and the availability of cost-effective controllers and [network interface controllers]: a 10Gb ecosystem. And then there are...

...key applications, such as high-frequency trading, life sciences, biological DNA molecule development, advanced imaging, HPC verticals such as oil and gas, education and research.

What are the key challenges for datacentre managers today who are seeking to move to next-generation facilities?

Most important is just the construction of a datacentre — they have to worry about power, cooling and so on, but the next thing is to ask: do they go for a classic datacentre, or do they think about the combination of their storage, server and network in a different way from the past?

From a people point of view, you need a general practitioner. They need to understand what the server scaling looks like — same with storage and networking.

It's about understanding the traffic patterns. For example, one of the most widely adopted applications for us is high-frequency trading. We used to say latency doesn't matter, but now you can lose millions of dollars for each microsecond of latency. InfiniBand was the only technology that could achieve this, but now 10Gb Ethernet can do it too.

How will you compete with Cisco? Won't their Unified Computing approach lock you out?

We don't compete with them — Cisco's Unified Computing approach is about moving into the server business, and is a big play against HP and so on. We work with anyone's servers. Servers are very cost-conscious purchases with lots of factors. There's no overlap with Cisco: their focus is as one-stop shop with complete integration; ours is best-of-breed product.

Would you like Cisco to buy you?

Not particularly. We have our own value proposition and differentiation. Along the way, if things happen, that's very different from planning for things to happen.

Your blog talks a lot about high-performance computing and about datacentres/cloud. Are you splitting your forces by attacking two markets simultaneously?

They're the same market in different aspects. HPC has a heavy legacy of latency and web computing, but enterprise datacentres are hybrids.

Our sweet spot has been in HPC, but our expanding product line and road map is changing that.

HPC's need for low latency is our bread and butter, but the line between the HPC datacentre and the classic datacentre is getting thinner; they're not different audiences but different applications.

If this is the year of 10Gb, when will the year of 100Gb be?

I think 100Gb will be a relevant niche through to 2012 — there won't be mainstream 100Gb deployments for five to 10 years.

As a result of installing 10Gb, you'll start to need faster interconnects of either 40Gb or 100Gb. We have customers who are building multiple aggregated links already, so you'll see that happen.

But native 100Gb is still very expensive from an optics point of view — we need to see radical improvements in cost performance, and technology miniaturisation and design. There's a lot of work going into DWDM and optical wavelengths to improve this, but it's five to 10 years out.

Can you see 100Gb Ethernet going over copper?

The laws of physics are already challenging putting 10Gb over existing Cat-6 or Cat-7, so I think you'd need a new cable — Cat-8 or Cat-10 maybe — but fibre will be so widespread, so it may not matter. It's not a last-mile technology but an uplink technology and, for that, fibre is fine.