Teradata’s journey into the cloud

Teradata turned 40 years old this year, putting it in the ranks of a small group of IT household names such as IBM, SAS, and Oracle that were either born or (in IBM's case) endured the evils of polyester and disco. When the company was formed, the notion of a terabyte of data was practically unimaginable. Fortunately, when someone put up a slide showing how the frontiers of storage have passed from terabyte to gigabyte, petabyte, and zetabyte, we breathed a sigh of relief that Teradata didn't feel the need to change its name to keep current.

To recap, Larry Dignan summed up the headlines from last week's annual Teradata Universe event, with the highlight in our mind being Teradata's embracing of a cloud-native architecture and business model.

Teradata has just seen a major changing of the guard, marked by ascent earlier this year of Oliver Ratzesberger as CEO, and major changes to the rest of the C suite. To us, the significance is that this is the first time in decades that Teradata has been led by a technologist rather than a businessman.

We'll start with a fact that hasn't changed. Teradata defines its addressable market as what it terms "Megadata Companies," meaning the organizations with the knottiest analytic problems based on complexity and scale. In other words, it's not competing with the MySQLs, PostgreSQLs, Redshifts, or Snowflakes of the world for the broader market of data mart of midsized data warehousing workloads. That places Teradata on a collision course with Cloudera. Hold that thought.

Another thing that hasn't changed is the company's attempts to wean itself off hardware – it's a message that has waxed and waned in the years since Teradata first introduced its '"open systems" platforms back in the 1990s. Until now, that was a tough sell, because the types of workloads that Teradata excels in – extremely complex SQL with numerous joins, across up to terabytes (or more) of data, and with support for very high concurrency – required specially optimized hardware.

Teradata used to sell specialized appliances that were geared for the variations in workloads: compute-intensive, IOPS-intensive, and/or high-concurrency. With the latest generation of hardware branded IntelliFlex, those variations could be configured through software. There's still Teradata-specific features like Bynet interconnects, but with trends such as faster Ethernet, we expect that the playing field with commodity hardware will eventually level.

But the even better news is that the cloud may finally make the whole issue academic: settle once and for all that Teradata is a software company. And that's where this year's chapter in the journey comes in. The cloud-native architecture of Teradata's platform, now called Vantage, separates data from compute and offers native support for object storage as just another tier. Teradata is hardly the only player to embrace cloud-native architecture. In fact, we just talked about SAP making the same shift with HANA. And by the way, so is Cloudera and Hadoop. As we've previously noted, cloud object storage is becoming the de facto data lake.

And with compute and storage separated, that clears the way for Teradata to start offering consumption-based pricing. So, if you're using Teradata's managed cloud service, there's no more complex Tcore pricing; instead, you pay like you would for any cloud-managed service; by amount of storage, and then only the compute that you use. That's an important step toward making Teradata easier to do business with, and embracing pay-as-you-go pricing, will make the service far more accessible.

We'll take this a step further. As part of the current Vantage release is support for containerization. The next logical step will be extending support to Kubernetes. For Teradata, that would open a path to creating – and potentially managing – private clouds for customers leery about moving their workloads into the public cloud. We wouldn't be surprised if Teradata adds Kubernetes support next year.

Behind these developments is, as noted above, the growing parity of commodity hardware. But it also speaks to the increasing variety of cloud compute instances. For instance, on AWS, you can now select from a portfolio of EC2 instances that are optimized for compute, memory, high-speed processing, or storage – just as in the old days, you chose appliances from Teradata optimized for compute, IOPS, or mixed workloads. You also have a variety of storage options. Meanwhile, cloud providers are adding specialized processors tailored for specific workloads, such as the TPUs optimized for TensorFlow workloads on Google Cloud. Sealing the deal, cloud provider backplanes are becoming sufficiently fast to offer "good enough" alternatives to the highly optimized infrastructure of traditional Teradata appliances.

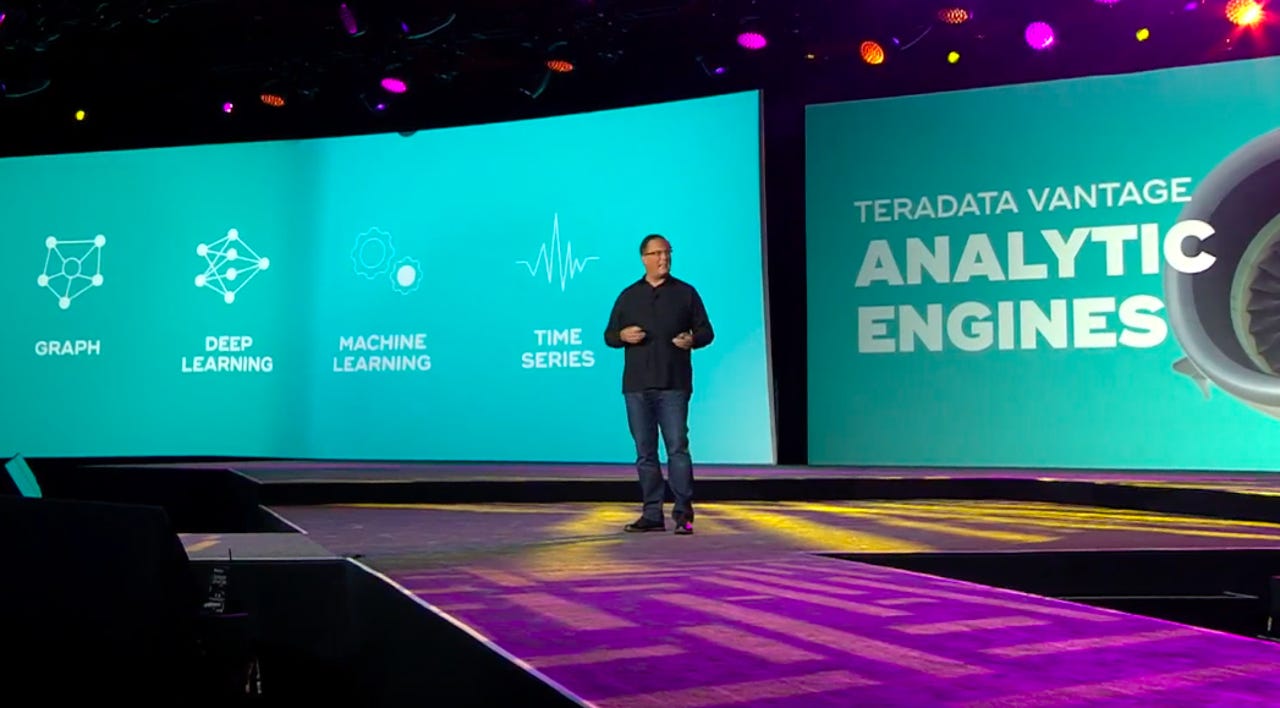

The other key trend is the positioning of Vantage as, in effect, a multi-workload platform. And, as noted above, that's where it collides with Hadoop. Key to Vantage's development is that it is fruition of the Aster Data Discovery platform. At the time, Teradata kept the Aster platform separate from the mother ship, targeting it to organizations working with Big Data analytics. The result was confusion across the Teradata base as to whether Aster would become strategic or pigeonholed. Aster's contribution was the hundreds of libraries that extended SQL to support approaches like MapReduce, graph, machine learning, and R and Python programming. Without Aster, the Vantage platform would not have been as well rounded.

Of course, Teradata is not alone in making the relational database more extensible. While traditionally, common practice was extracting data or tables onto the laptop and then viewing and analyzing data in frameworks such as Pandas, increasingly, database vendors are bringing R and Python processing directly into the database. The advantage is eliminating the need to copy data (and wind up with multiple versions of the truth), not to mention taking advantage of the database's own execution engines to scale the running of models.

Today, Microsoft supports Python and R processing in-database with SQL Server 2019 via user-defined functions; Oracle is now adding R and Python APIs for running models in-database; while IBM supports running Go, Ruby, Python, PHP, Java, Node.js, and Sequelize, among other languages in Db2. The ability to use Jupyter notebooks for developing and deploying models to run in-database have also become table stakes. Teradata's differentiator is offering a rich portfolio of analytic libraries that are optimized for the database.

The result is that Teradata believes that it has a more credible alternative for organizations that have struggled with Hadoop. It has announced a Hadoop Migration program that includes a multiphase engagement of assessment, planning, and implementation. It emerges alongside the promotion of "relational data lakes" by the likes of SAP. The guiding notion is that, given the large existing skills base of SQL developers, making SQL extensible to support some of the new programmatic analytics approaches will be more practical for organizations that lack adequate skills bases in Python or R. Not surprisingly, an impromptu expo floor session introducing the new Hadoop migration program drew a standing room only crowd.

And it's come in the wake of Hadoop's midlife crisis that has seen the merger of Cloudera and Hortonworks, and HPE's fire sale acquisition of MapR. It's become fashionable to decry Hadoop as dead, and unfortunately, Teradata has been inconsistent in its messaging about Hadoop. In some slides, it refers to Hadoop as "dying technology." Yet, its practitioners on the ground acknowledge that Hadoop will continue playing a role, and by the way, Teradata still has a partnership with Cloudera. Teradata needs to clean up its Hadoop messaging and be more positive. Relational data lakes are well suited for organizations where SQL skills predominate. As long as Teradata positions Hadoop migration as better matched to the skillset of its customer base, that should avoid the unnecessary noise and distraction that its inconsistent messaging will throw in its path.