When this new technology arrives, cellphone cameras are not gonna suck

There's a difference between snapshots and photographs. Cellphone and PDA cameras are good for snapshots, but most of these devices kind of suck at taking certain types of photos.

Admit it. Whether iPhone, BlackBerry, or just your garden variety Samsung, your cellphone camera ain't the greatest.

Why is this? Not because of lack of pixels, resolution, etc., but because most of these mobile devices save power because their flashes are either not as robust as in digital cameras, or are absent altogether. This state of affairs makes it really difficult to take an image in one light.

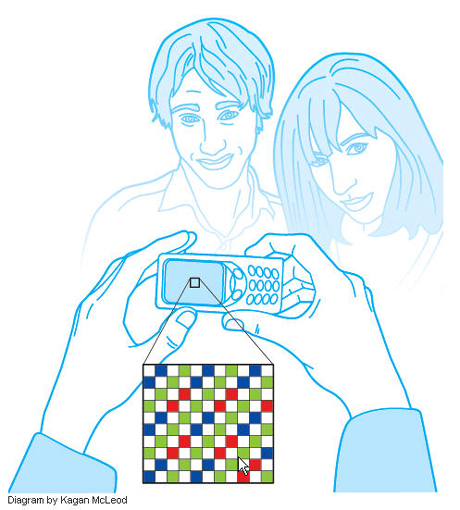

There are also issues with the way cell cameras detect the light they can see. Image sensing detects light with an array of green, blue and red pixels. Each of these sees one color, and ignore the two-thirds of incoming color from other light.

But now, as Alex Hutchinson of Popular Mechanics tells us, there's a new sensor from Kodak that adds a panchromatic or “clear” pixel which detects all wavelengths of visible light, making it much more sensitive to the overall light level.

This mix will render these new sensors as much as four times as sensitive to low-light conditions as present technology does. A sensor inside a cameraphone could, then, use a particular low power pixel pattern to reconstruct the image from incoming light data.

Greater light sensitivity of the type I have just referenced could also result in more rapid shutter speeds. This capability would reduce the blur in action shots that so many of us who use cellphone cameras grouse about today.

The first prototype devices carrying these designs are expected early next year.

But hey, it's almost next year now!