'Skinput' turns the body into an input surface

It seems as if just about anything can now be turned into an interactive display. As fellow blogger Mary-Jo Foley writes, Mobile Surface from Microsoft Research can transform any surface, such as a coffee table, into a gestural finger input canvas with a mobile device and a camera-projector system.

That's exciting, but there may be times when tables or flat surfaces are not present. Your skin, however, is always with you, so why not turn yourself into a touchscreen?

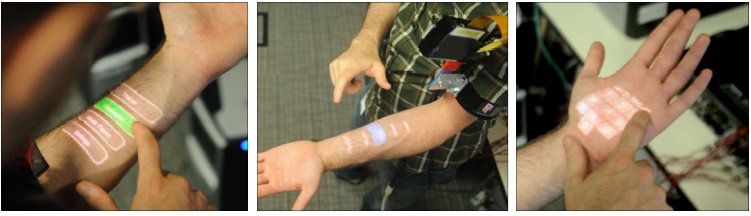

Enter Skinput. Chris Harrison at Carnegie Mellon University in Pittsburgh, Pennsylvania, along with Dan Morris and Desney Tan at Microsoft's research lab created a system that allows users to use their own hands and arms as touchscreens by detecting the various ultralow-frequency sounds produced when tapping different parts of the skin. Skinput uses microchip-sized "pico" projectors to allow for interactive elements rendered on the user's forearm and hand.

An armband houses the projector along with an array of sensors which collect the signals generated by the skin taps and then calculates which part of the display you want to activate.

The result is an always available, naturally portable body interface that is fairly robust. The acoustic detector can detect five skin locations below the elbow with an accuracy of 95.5%, which corresponds to a sufficient versatility for many mobile applications, according to the researchers.

The researchers extol the concept in a recent paper (PDF):

Appropriating the human body as an input device is appealing not only because we have roughly two square meters of external surface area, but also because much of it is easily accessible by our hands (e.g., arms, upper legs, torso). Furthermore, proprioception – our sense of how our body is configured in three-dimensional space – allows us to accurately interact with our bodies in an eyes-free manner. For example, we can readily flick each of our fingers, touch the tip of our nose, and clap our hands together without visual assistance. Few external input devices can claim this accurate, eyes-free input characteristic and provide such a large interaction area.