Weeks later, Facebook stops censoring member

Facebook has stopped censoring at least one of its members after she contacted me and I published two articles about the issue. Unfortunately, Facebook took days to fix the problem and get back to me, which is a very rare occurrence for the company.

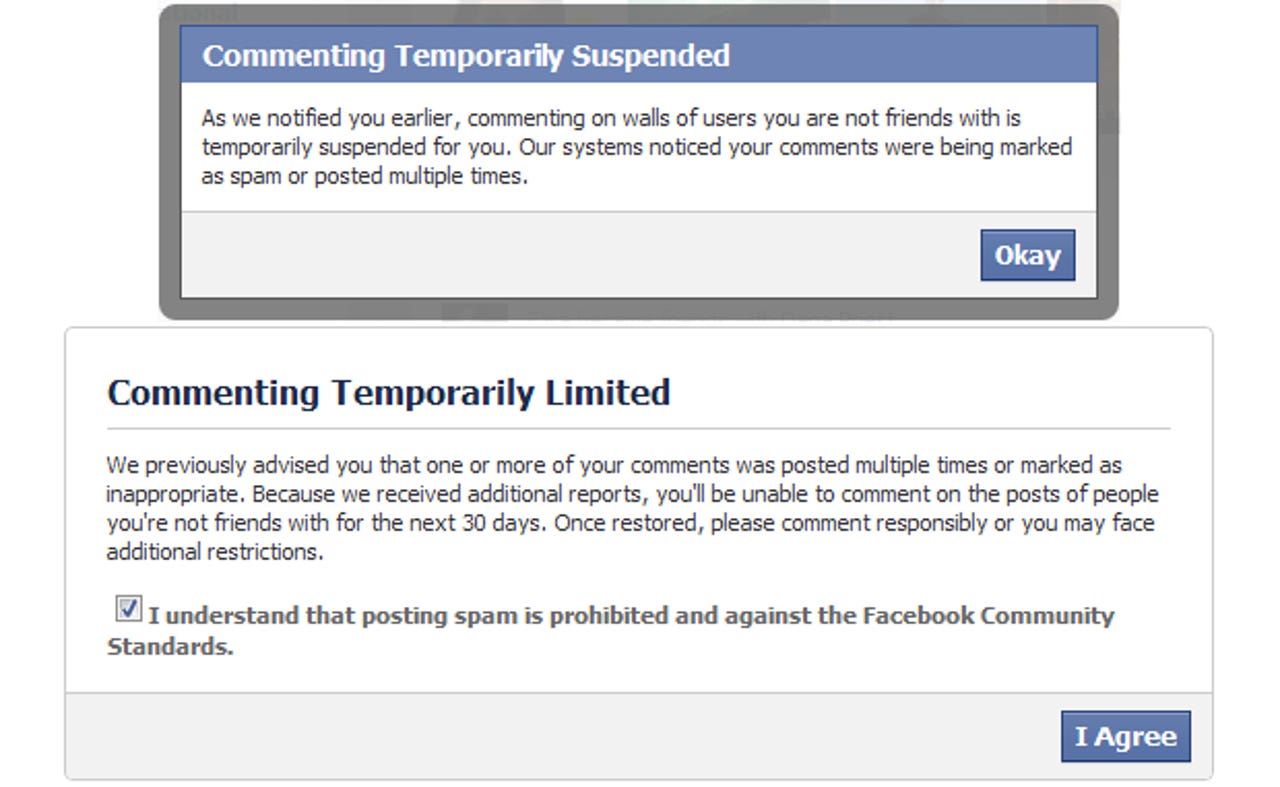

On Saturday, I wrote an article titled "Facebook censors members after unjustly labeling them spammers" in which I described how Facebook user Rima Regas was being censored by the social network. I shot off the usual e-mail to Facebook PR, and added a CC for Facebook public policy manager Fred Wolens.

I usually get a very quick response when I ask for a statement on sensitive issues. Last time was just the weekend before, and Facebook got back to me within the hour. Then again, that story was about technical evangelist Robert Scoble, so the response was very quick. This time, Facebook did not get back to me on Saturday, nor on Sunday.

On Monday, the issue got worse and I wrote an article titled "Facebook extends censorship ban to a month." I once again asked for a statement. Facebook did not get back to me on Monday. On Tuesday, I got an e-mail from Wolens telling me my messages had landed in his spam folder. That's fine, but what about the rest of the PR team that got my e-mail?

I did not get anything from Facebook on Wednesday.

On Thursday (today), I asked Wolens why I still hadn't received a response. Minutes after Facebook announced it was going public on Friday, Wolens called me.

He told me that the company had improved its spam detection algorithms. He said that in this case, the actual comment was not spammy, but because Regas was posting very quickly and frequently, she was marked as a spammer. He talked about how Facebook's systems are a constant work in progress.

I asked for a statement, again. Third time's the charm:

To protect the millions of people who connect and share on Facebook every day, we have automated systems that work in the background to maintain a trusted environment and protect our users from bad actors who often use links to spread spam and malware. These systems are so effective that most people who use Facebook will never encounter spam. They're not perfect, though, and in rare instances they make mistakes. This user was blocked from commenting due to deprecated spam classifier, and we have already made the necessary adjustments to our system to make sure we don't repeat the same mistake in the future.

I contacted Regas to let her know she's been unblocked. Here's her response: "Yay! I'm out of jail! I was just able to post to Jonathan Capehart and Chris Cilliza's pages."

As I outlined in my previous articles, I shouldn't have to do this. Why does there have to be a middle-man for issues like this one, and the countless other ones I've covered?

I get that no spam system is perfect, and that's okay. What's not okay is Facebook users having no way to fight back when the algorithms fail.

Facebook needs a due process. Facebook needs a proper communication channel between its staff and users. Facebook needs to do more.

See also: