Apple's macroscalar architecture: what it is & what it means

What in heck is Apple's macroscalar processor? And why should we care?

Apple got a new patent on something called a macroscalar processor this month. You may have seen a write-up on it that quoted the application and stopped there. Because no one knows what it is and why you would want one. But I know.

Macroscalar Macroscalar is NOT a term of art in computer science - although a quick Bing search will find that it is occasionally used in other disciplines by academics eager not to be understood. Let's break it down.

Macro means - in a word of 1 syllable - big. Macroeconomics explains the big gears of the economy. Macro lenses make little things big by getting close to them. Office macros make big, complex actions into 1 command.

Scalar means a single value, as opposed to a vector. A vector processor takes a vector of values, say

10 14 666 22 84 53 45 25 14 47 91

and with 1 instruction performs the same operation on all of them, such as multiplying by a single scalar value or another vector.

When you have masses of data to massage - such as in imaging - vector processors speed things up. Which is why the companies that built vector processors for a living got killed by Intel and GPUs.

Scalar OTOH, means a single value. So what the heck is a BIG single value processor?

Parallel processing loops As the Background of the Invention section in the patent explains, rising clock frequencies aren't translating into higher performance because most consumer software isn't optimized to use longer pipelines.

While consumers purchase newer and faster processors with deeper pipelines, the vast majority of software available is still targeted for processors with shorter pipelines. As a result of this the consumer may not realize the full processing potential of a new processor for one to two years after its release, and only after making additional investments to obtain updated software. . . . (I)t is questionable how efficiently deeper pipelines will actually be utilized.

This is important because as CPUs hit Moore's Wall, more parallelism is the chief way to get more performance - and deeper pipelines are a chief way to achieve instruction-level parallelism.

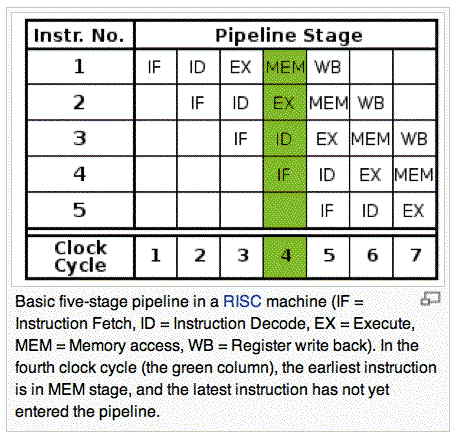

What is a pipeline? Skip this if you already know. As defined in Wikipedia an instruction pipeline is a technique used in the design of computers and other digital electronic devices to increase their instruction throughput (the number of instructions that can be executed in a unit of time).

Since a good portion of a typical program's time is spent in loops, optimizing loops for parallel execution is a popular form of speed up. Loop unrolling and auto-vectorization are 2 common techniques, but they don't work with data-dependent loops whose length depends on their results rather than a fixed number of iterations.

The invention The macroscalar processor addresses this problem in a new way: at compile-time it generates contingent secondary instructions so when a data-dependent loop completes the next set of instructions are ready to execute. In effect, it loads another pipeline for, say, completing a loop, so the pipeline remains full whether the loop continues or completes. It can also load a set of sequential instructions that run within or between loops, speeding execution as well.

The macro piece is the large number of program registers required so that all or most possible instruction paths are already loaded into CPU registers for fast execution. Instead of maintaining 1 pipeline, the architecture effectively maintains parallel pipelines, loading them and then switching between them to maximize loop performance.

Much of the patent is focused on compiler coding needed to take advantage of the macroscalar architecture. Essentially the compiler needs to analyze the work flow and understand where run-time decisions will be made so the processor can know when it needs an alternate pipeline.

The Storage Bits take Apple is, among other things, a fabless semiconductor company. They design, but do not build, their own processors, such as the dual-core A5 in the iPad 2.

The macroscalar architecture, well implemented, offers higher performance for a given clock-speed and lower energy consumption. Both are valuable in mobile devices.

Since Apple provides its own compilers as well as designing CPUs, it is uniquely positioned to offer a complete macroscalar solution to its large band of iOS developers, further widening the price/performance gap between it and the iPad wannabes.

Is it a breakthrough? It could be if the efficiencies it promises can be realized in practice. We'll have to see just how good Apple's compiler engineers are.

Comments welcome, of course. Macroscalar is another way to throw transistors at the problem of speeding up computers. I like it.