Intel-backed startup Paperspace reinvents dev tools for an AI, cloud era

The world of integrated development environments, or "IDEs," rich programming tools that bring together many capabilities, hasn't seen much action in the years since artificial intelligence and cloud computing engulfed programming generally.

One intriguing startup based in Brooklyn, New York, could change that.

Paperspace, founded three years ago, Tuesday announced it has received $13 million in venture money from investors Battery Ventures, SineWave Ventures, Sorenson Ventures, as well as the investment arm of chip giant Intel, bringing its total haul to date to $19 million. Existing investor Initialized Capital also joined the round.

The money will go to refining and extending Paperspace's main product, "Gradient," a kind of mash-up of tools designed especially for programming machine learning neural networks. The Intel involvement is especially interesting, as Intel has in the last couple of years beefed up on various AI projects to rejuvenate its processor lineup.

Also: Fast.ai's software could radically democratize AI

Co-founder and chief executive Dillon Erb spoke with ZDNet about the quite-primitive programming environment of today's machine learning development.

The problem Paperspace is addressing, he says, is akin to the early days of Web development. "It's not hard to find compute today," says Erb, referring to the ready availability of GPU farms on Amazon and TPU installations in Google's Google Cloud.

"The missing part is software, just like in the early days of Web development. This is a serious problem that a lot of people are facing; we have seen first-hand the challenges people have to even develop a single [AI] model."

A data scientist can implement 15 lines of Facebook's PyTorch machine-learning framework, and "have access to cutting-edge research," he points out. But, then, "for all the other code necessary to put it together, you end up home-rolling it yourself, digging up a bunch of open-source tools and hacking it together."

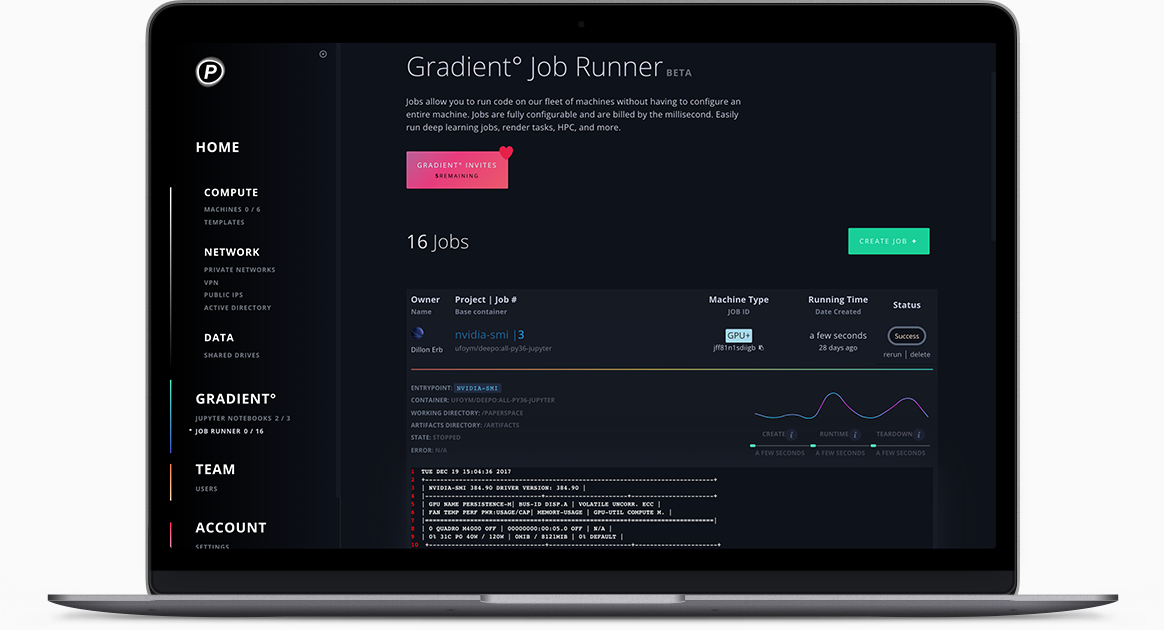

Gradient combines the popular Jupyter Notebook development interface with PyTorch or TensorFlow or other frameworks, and a cloud installation of Docker containers. A special Python module for Paperspace is downloaded locally to run the Paperspace command line, and a few hooks are embedded into one's target PyTorch or other file. From there, it's trivial to kick off the Run command, fetching a PyTorch project from GitHub, and deploying it to however many container installations are needed on bare metal GPU processing managed by Paperspace. (The company earlier this year announced support for Google's second-generation TPU.) Paperspace takes care of details of things such as how to resolve I/O bottlenecks, says Erb, or how to structure storage for apps.

Also: Facebook open-source AI framework PyTorch 1.0 released

The client base tends toward teams of 5 to 15 people, increasingly AI researchers. They pay a per-user, per-month fee, with rising levels of GPU job allowances. An enterprise version, with variable pricing, includes things such as unlimited GPU workloads.

Paperspace's cloud-based programming environment wants to be the gold-standard for easy publishing of machine learning models on whatever collection of GPUs, TPUs, or other processors a team wants.

The point of all this is not to sell GPU computing; that's a commodity, and while Paperspace built its own data centers for some of the work, it also relies on Google Cloud instances. So wholesaling GPUs, or TPUs, is just a ticket to entry into the game. The real magic, insists Erb, is the "stack" of code that makes it easy to tie Jupyter to Github machine learning projects and then to the underlying compute.

Paperspace started out when there were no GPUs offered on a hosted basis. Erb and colleagues -- the team is presently a lean 16 people -- had come from the world of computer-assisted design, and they realized the value of access to GPU farms. (The name Paperspace comes from the CAD world. It refers to the "window into a 3-D world," the company says. As the name of the startup, it's meant to serve as "a metaphor for the notion of a portal into the limitless power of the cloud.")

Paperspace received seed funding from incubator YCombinator for that original mission of building GPU clouds. But then Erb and colleagues noticed something interesting. "We were getting all these requests for anything that particularly focused on TensorFlow and PyTorch," he reflects. "We realized as we dug into it that this was a greenfield opportunity."

Also: Google preps TPU 3.0 for AI, machine learning, model training

Paperspace has collaborated for some time now with Fast.ai, the non-profit organization run by Jeremy Howard and Rachel Thomas that has been teaching courses on machine learning, which was recently profiled in this space. Erb noticed the struggle of students in Howard's courses to bring together even the most basic software tools. "Even just getting Jupyter up and running, and making sure you are not getting charged hundreds of dollars for GPU instances, is a challenge," he observes. "The problem is not access to hardware, that's plentiful; the problem is the software to tie it all together."

Erb hopes the Paperspace stack will become an industry standard for that "layer of abstraction" that sits above whatever GPU, or TPU, or other compute is used by a client. He notes trends in machine learning to abstract away from server infrastructure. One "cool thing" he's been watching is the emergence of ONNX, the industry effort to define a common translation model between different machine learning frameworks. It's a time of tremendous ferment in industry, and "the right level of abstraction for these things is undefined right now," meaning the division of resources between GPU and containers and machine learning frameworks and software tools.

An intriguing question is what happens with Intel in this deal. The company has yet to demonstrate silicon in the wild to any great degree, ceding the field so far to Nvidia's GPUs and Google's TPUs. Erb won't comment on Intel's potential silicon successes, but he's "very excited" about ongoing work between Paperspace and Intel.

"Intel is making really big investments" in AI, he says. "In our conversation with the AI group there, they are all working on the big problem; it's not just hardware and software, it's bigger than either."

Previous and related coverage:

What is AI? Everything you need to know

An executive guide to artificial intelligence, from machine learning and general AI to neural networks.

What is deep learning? Everything you need to know

The lowdown on deep learning: from how it relates to the wider field of machine learning through to how to get started with it.

What is machine learning? Everything you need to know

This guide explains what machine learning is, how it is related to artificial intelligence, how it works and why it matters.

What is cloud computing? Everything you need to know about

An introduction to cloud computing right from the basics up to IaaS and PaaS, hybrid, public, and private cloud.

Related stories:

- There is no one role for AI or data science: this is a team effort

- Startup Kindred brings sliver of hope for AI in robotics

- AI: The view from the Chief Data Science Office

- Salesforce intros Einstein Voice, an AI voice assistant for enterprises

- It's not the jobs AI is destroying that bother me, it's the ones that are growing

- How Facebook scales AI