'ZDNET Recommends': What exactly does it mean?

ZDNET's recommendations are based on many hours of testing, research, and comparison shopping. We gather data from the best available sources, including vendor and retailer listings as well as other relevant and independent reviews sites. And we pore over customer reviews to find out what matters to real people who already own and use the products and services we’re assessing.

When you click through from our site to a retailer and buy a product or service, we may earn affiliate commissions. This helps support our work, but does not affect what we cover or how, and it does not affect the price you pay. Neither ZDNET nor the author are compensated for these independent reviews. Indeed, we follow strict guidelines that ensure our editorial content is never influenced by advertisers.

ZDNET's editorial team writes on behalf of you, our reader. Our goal is to deliver the most accurate information and the most knowledgeable advice possible in order to help you make smarter buying decisions on tech gear and a wide array of products and services. Our editors thoroughly review and fact-check every article to ensure that our content meets the highest standards. If we have made an error or published misleading information, we will correct or clarify the article. If you see inaccuracies in our content, please report the mistake via this form.

Stanford's VR breakthrough could spell the end of clunky headsets - thanks to AI

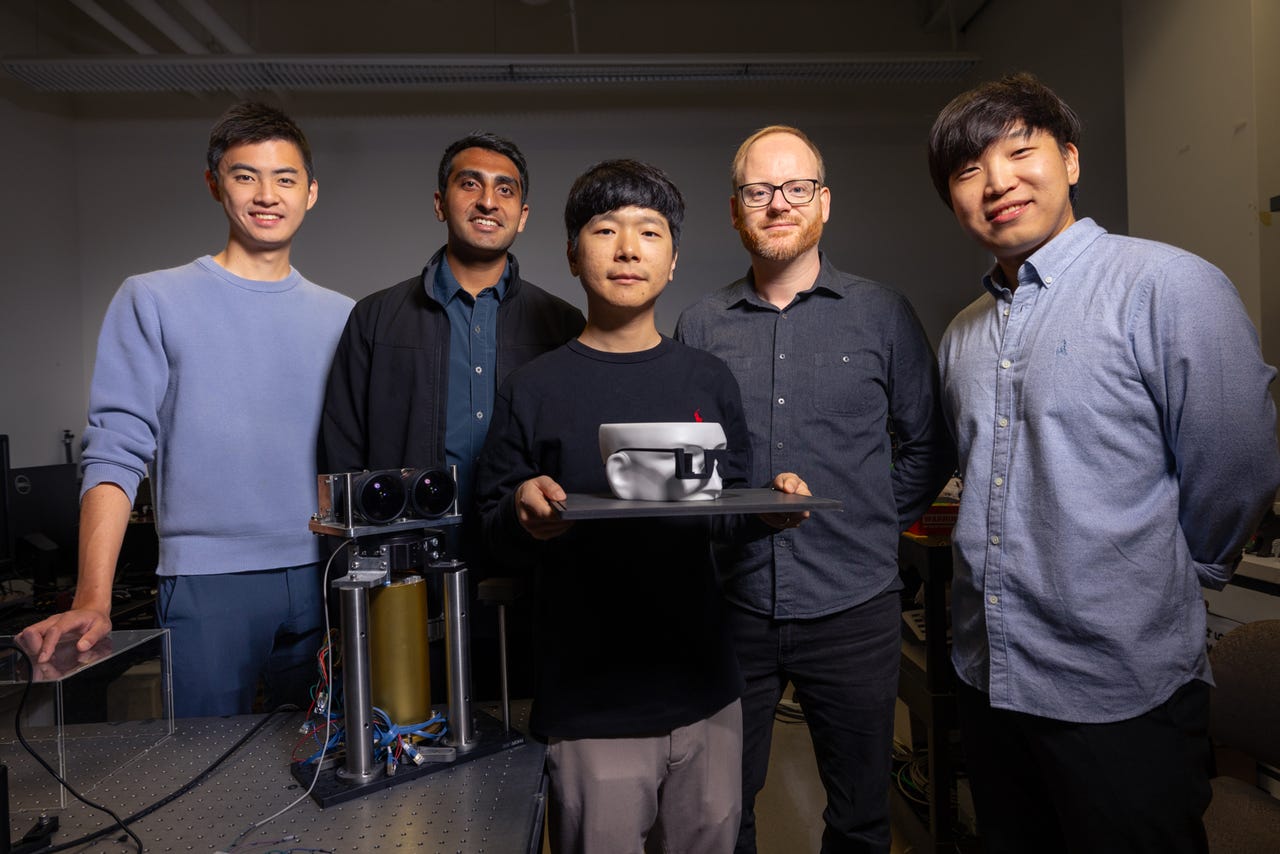

Research team at Stanford from left-to-right: Brian Chao, Manu Gopakumar, Gun-Yeal Lee, Gordon Wetzstein, Suyeon Choi (Photo by Andrew Brodhead).

One of the biggest criticisms of AR and VR, and especially Apple's vision of what it calls "spatial computing," is the bulk of the eyewear. There's no doubt we've reached the point where some XR devices and experiences are amazing, but there's a fairly high wall of annoyance to climb to make use of them.

Devices are heavy, ugly, and uncomfortable, and while the four-year-old Quest 2 is available for $200, prices go up and up, with the $3500 Apple Vision Pro causing wallets to implode.

Also: 9 biggest announcements at Google I/O 2024: Gemini, Search, Project Astra, and more

Although we've long seen the promise of VR, and we all expect the technology to get better, we've mostly had to trust in the historical pace of technological advancement to provide us with assurance of a more practical future. But now, we're starting to see real science happening that showcases how this all might be possible.

A team of researchers at Stanford University, led by engineering associate professor Gorden Wetzstein, has built a prototype of lightweight glasses that can show digital images in front of your eyes, blending them seamlessly with the real world. His team specializes in computational imaging and display technologies. They've been working on integrating digital information into our visual perception of the real world.

"Our headset appears to the outside world just like an everyday pair of glasses, but what the wearer sees through the lenses is an enriched world overlaid with vibrant, full-color 3D computed imagery," says Wetzstein. "Holographic displays have long been considered the ultimate 3D technique, but it's never quite achieved that big commercial breakthrough…Maybe now they have the killer app they've been waiting for all these years."

Also: The best VR headsets of 2024: Expert tested and reviewed

So what is Wetzstein's team doing that's different from the work at Apple and Meta?

Protype holographic glasses

The Stanford team is focusing on fundamental technologies and scientific advancements in holographic augmented reality and computational imaging. They're doing research into creating new ways for generating more natural and immersive visual experiences using sophisticated techniques like metasurface waveguides and AI-driven holography.

Metasurface waveguides?

Let's deconstruct both words. A metasurface is an engineered material that consists of tiny, precisely arranged structures on a surface. These structures are smaller than the wavelengths of the light they're interacting with.

The idea is these tiny nanostructures, called waveguides, manipulate light in strategic ways, altering phase, amplitude, and polarization as it traverses the material. This allows engineers to exert very detailed control over light.

What we've been seeing with both the Quest 3 and Vision Pro is the use of traditional computer displays, but scaled down to fit in front of our eyes. The display technology is impressive, but it's still an evolution of screen output.

Stanford's approach throws that out so that the computer doesn't directly drive a screen. Instead, it controls light paths using the waveguides. Radically oversimplified, it's using these three approaches:

Spatial light modulation: A computer CPU or GPU controls spatial light modulators (SLMs) that adjust the light entering the waveguides. These are tiny devices used to control intensity, phase, or direction of light on a pixel-by-pixel basis. By manipulating the properties of light, they direct and manipulate light itself at the nano-level.

Complex light patterns: A VR device calculates and generates complex light patterns, which allows the headset to dictate the specific ways light interacts with the metasurface. This, in turn, modifies the eventual image seen by a user.

Real-time adjustments: Computers then make real-time adjustments to the nano-light sequences, based on user interaction and environmental change. The idea is to make sure the content shown is stable and accurate for various conditions and lighting activities.

You can see why AI is critical in this application

Doing all this industrial light magic doesn't come easily. AI needs to do a lot of the heavy lifting. Here's some of what the AI has to do to make this possible:

Improve image formation: AI algorithms use a combination of physically accurate modeling and learned component attributes to predict and correct how light traverses the holographic environment.

Optimization of wavefront manipulation: The AIs have to adjust the phase and amplitude of light at various stages to generate a desired visual outcome. They do this using a precise manipulation of the wavefronts in the XR environment.

Handling complex calculations: This, of course, requires a lot of math. It's necessary to model the behavior of light within the metasurface waveguide, dealing with the diffraction, interference, and dispersion of light.

While some of these challenges might be possible using traditional top-down computing, most of the process requires capabilities that are beyond the capabilities of traditional approaches. AI has to step up in the following ways:

Complex pattern recognition and adaptation: A hallmark of AI capability, especially in terms of machine learning, is the ability to recognize complex patterns and adapt to new data without explicitly requiring new programming. With holography AR, this ability allows the AI to deal with the thousands of variables involved in light propagation (phase shifts, interference patterns, diffraction effects, and more), and then correct for changes dynamically.

Real-time processing and optimization: That dynamic correction needs to be done in real time, and when we're talking about light coming into the eye, the need is for truly instant response. Even the slightest delay can cause issues for the wearer, ranging from slight discomfort to violent nausea. But with the AI's ability to process enormous amounts of data as it flows, and then make instantaneous adjustments, human-compatible light processing for AR vision is possible.

Machine learning from feedback: Machine learning enables the XR systems to improve dynamically over time, processing camera feedback and continuously refining the holographic images projected, reducing errors and enhancing image quality.

Handling non-linear and high-dimensional data: The math involved in how light interacts with complex surfaces, especially the metasurfaces used in holography, often requires calculations based on data that is wildly non-linear and contains vast arrays of data points. AI's are built to manage this data by leveraging machine learning's ability to deal with complex data sets and perform real-time processing.

Integration of diverse data types: The data available to produce the images required in holographic AR is not limited to just giant sets of X/Y coordinates. AIs are able to process optical data, spatial data, and environmental information, and use all of it to create composite images.

What does it all mean?

Without a doubt, the single biggest factor holding back the popularity of XR and spatial computing devices is the bulk of the headset. If functionality like that found in Quest 3 or the Vision Pro were available in a pair of traditional glasses, the potential would be huge.

Also: Meta Quest 2 vs Quest 3: Which VR headset should you buy?

There is a limit to how small glasses can become when embedding traditional screens. But by changing the optical properties of the glass itself, scientists would be building on the most accepted augmented reality device in history: our glasses.

Unfortunately, what the Stanford team has now is a prototype. The technology needs to be developed much more to move from research, into fundamental science, into the engineering lab, and then on to productization. While the Stanford team didn't predict how long that would take, it would be a fair bet to assume this technology is at least five to ten years out.

But don't let that discourage you. It's been about 17 years since the first iPhone was released and even in the device's first three or four years, we saw tremendous improvement. I expect we'll see similar improvements in the next few years for the current crop of spatial computing and XR devices.

Of course, the future is out there. What will this be like in 17 years? Perhaps the Stanford team has given us our first glimpse.

You can follow my day-to-day project updates on social media. Be sure to subscribe to my weekly update newsletter, and follow me on Twitter/X at @DavidGewirtz, on Facebook at Facebook.com/DavidGewirtz, on Instagram at Instagram.com/DavidGewirtz, and on YouTube at YouTube.com/DavidGewirtzTV.