Amid questionable benchmarking choices, AMD 'claims' speed title with new Opteron 2222SE

In a release of benchmarks that could fuel the fires of benchmark controversy (which are already blazing hot), AMD today is announcing the official release of its Opteron 2222SE processor. It's the 3 Ghz version of the dual-core Opteron (a 90 nm part of the Opteron family once code-named Santa Rosa) designed to work in dual processor (otherwise known as "2-way" or "2P") systems. The first digit in Opteron model numbers (in this case, a "2") implies the maximum number of processors that a system housing that Opteron can have. In the case of the 2222SE, it's the only number. Technically there is a "1P" version but AMD doesn't target the extremely hot 2P server market with that processor. Also in the 3.0 Ghz offing for AMD will be an 8222SE: a 3.0 Ghz designed to go into 4 or 8-way systems. With two cores, an 8-way system based on the 8222SE would therefore have a total of 16 cores across 8 processor (2 cores per processor).

Listen to a podacst interview: With AMD's rollout of the Opteron 2222SE, David interviewed the company's worldwide Opteron market development manager John Fruehe. To stream or download the interview (or subscribe to our podcasts), use our audio player at the top of this blog entry. Listen to a podacst interview: With AMD's rollout of the Opteron 2222SE, David interviewed the company's worldwide Opteron market development manager John Fruehe. To stream or download the interview (or subscribe to our podcasts), use our audio player at the top of this blog entry. |  Benchmark Charts: Want images? Have we got images. In addition to providing you with a podcast interview with John Fruehe, AMD's worldwide market development manager for Opteron we also have the benchmark charts that AMD is releasing today. Click here to see them. Benchmark Charts: Want images? Have we got images. In addition to providing you with a podcast interview with John Fruehe, AMD's worldwide market development manager for Opteron we also have the benchmark charts that AMD is releasing today. Click here to see them. | |||

I'm going to be straight. I think that we've been too quiet and I think that part of that is that we're trained to be very honest, grounded in reality, [and] truthful with our benchmarks. I'm sick and tired of being pushed around by a competitor that doesn't respect the rules of fair and open competition.

Indeed, upon closer examination, it's hard to argue with Richard's sentiments. The slides (seen here in a ZDNet image gallery) used by Intel during two presentations earlier this year -- one primarily for Wall Street analysts, the other for the press -- very deceptively painted Intel's processors in a far more positive light then they deserved (see my analysis for a more thorough explanation). AMD isn't completely innocent. Amidst the several unfair benchmarking practices it accused Intel of using was one that it subsequently used itself during a recent presentation in China; the usage of retired benchmarks that don't adequately subject systems and processors to modern day loads.

Although AMD officials believe that Intel's public usage of benchmarking data has been unfairly painting the 2220SE in a far more negative comparative light (to Intel's corresponding 2-core 2P targeted 5160) than has been deserved, they also concede that even a fair presentation might only show a marginal lead for the 2220SE on some benchmarks, a near tie on others, and certainly not a unanimous victory across the board for all benchmarks. In other words, the 2220SE was not a slam dunk. But with today's release of the 3.0 Ghz 2222SE, AMD is claiming a clean sweep, at least with the three benchmarks charts that it is going public with today. To hear or download my interview with John Fruehe, AMD's worldwide market development manager for Opteron, you can use ZDNet's audio center at the top of this blog post (you can also use it to subscribe, podcast-style, to any of our audio-based editorial).

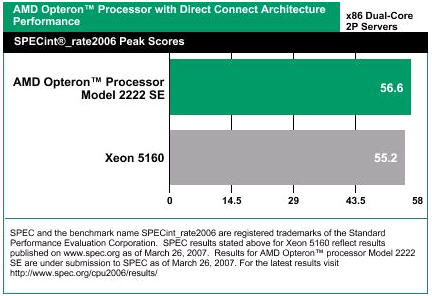

The first of the benchmarks (shown below) compares a two-way 2222SE-based system to an Intel Xeon 5160-based two-way system using SPEC's hot-off the presses SPECint_rate2006 benchmark (continued below)

(continued from above) As can be seen from the above benchmark chart, the 2222SE is shown to have a narrow lead (56.6 vs. 55.2) over the Xeon 5160. Just the mere fact that AMD is publishing a benchmark chart showing such a narrow lead is evidence of how hotly contested the processor market is. However, here again, certain questions regarding best practices when it comes to the presentation of benchmark data need to be raised. As can be seen from the "fine print" that goes with this slide (in our image gallery that shows all three benchmark charts that AMD is talking about today), the systems that generated these benchmark test results were configured so differently that it's pretty much impossible to attribute such a narrow lead to the alleged supremacy of one processor over the other. In addition to a big difference in their RAM configurations (the 2222SE-based system as 8GB, the 5160 has 16GB), the Opteron-based system is running SuSE Linux while the Xeon-based system is running Windows 2003 Server.

Perhaps drawing more attention to these system differences and AMD's choice of what to present is the fact that the second slide which shows AMD's 2222SE enjoying a lead over Intel's 5160 on SPEC's SPECfp_rate2006 involves two systems that are more comparably configured. Whereas the Opteron-based system is once again running SuSE Linux (version 9), the Xeon 5160 system is running the 64-bit version of SuSE Linux (version 10). They're different versions of SuSE Linux, but it's not Linux vs. Windows. Even so, with two such different version of Linux, it's hard to make any definitive conclusions.

The third chart that AMD is offering today, one that includes a comparison of the 2222SE to not just Intel's Xeon 5160, but also a 2P system that's based on Intel's 4-core Xeon 5355, also raises some interesting questions; not just about selection of benchmark data to present, but also how competitive benchmarks are generated as well as how benchmark data is presented. Presumably, the choice to include a 5355-based system was made to show that AMD's 3.0 Ghz 2-core Opteron can outperform Intel's 3.0 Ghz 4-core Xeon. That might be a noteworthy datapoint. But here again, the datapoint's noteworthiness is easily drawn into question.

For starters, according to AMD, that particular benchmark (of the 5355) is expected to be published on the SPEC Web site some time today or tomorrow. When it is, it will undoubtedly appear here. But, in an unusual (but not unprecedented) move, the sponsor of the benchmark is not Intel, but rather it's AMD. With the first two benchmarks, when I asked AMD why the system configurations were so different, it gave a very familiar answer: those are the best/most recent competitive benchmark scores it could find for those tests and those processors on the SPEC.org Web site. This is the same answer that Intel gave me when I questioned its selection of benchmarks.

The problem is that since so many of the benchmarks that are submitted to SPEC are ones where the test was sponsored by a company with a hand in the systems being benchmarked (eg: Intel or a system manufacturer that uses Intel chips is normally the sponsor of tests involving Intel processors), it's hard to find test results that are apples to apples test results. AMD may have control over how it configures its own servers, but it has no control over how Intel tests Intel-configured servers. As a result, when it comes time to create a chart that will be shown publicly, Intel is picking the best competitive scores it can find (regardless of whether they represent comparably configured systems to its own) and AMD is doing the same. One could ask "Well, since some benchmarks are already published, then, when deciding to publish your own benchmarks, why not come up with a configuration that closely maps to the ones that are already published?" At least this way, you get a more honest comparison.

But, the third chart that AMD is showing today is special. That's because neither Intel nor an Intel-based system manufacturer was the sponsor of the Xeon 5355 test. AMD was. Normally, I'd say this is great. But what confounds me is how, when presented with the opportunity to control the configurations of both an AMD system and an Intel system, AMD did not configure the systems as identically as it could (so as to, as best as possible, isolate any performance differences to the processors). Instead, whereas the AMD-based system is configured with 8GB memory, a 250GB SATA disk drive and Novell's SuSE Linux Enterprise Server 9 SP3, the Intel Xeon 5355-based machine is configured with 16GB memory, an 80GB SATA disk drive, and SuSE Linux Enterprise Server 10. While the non-processor aspects of the two configurations are probably closer to each other than in any of the other benchmarks AMD is showing today, it's still not close enough. In other words, it's not apples to apples.

Why did AMD make this choice? According to an AMD spokesperson, the SPECompMbase2001 benchmark is mostly a test of the interaction between processor and memory, with disk access and operating system choice playing a minimal roles. AMD claims it configured the systems as it did to make a point: that its choice to integrate the memory controllers into its silicon (a choice that Intel will apparently be making for processors that will be released next year) was such a good choice that even if it gives an Intel system twice the memory (a theoretical advantage), the Intel system will still lose. My guess is that the 5355 datapoint was not just generated to make comparisons to the 2222SE, but also as a comparative datapoint for AMD's soon to arrive 4-core chips (code-named Barcelona). AMD has already claimed that its Barcelona chips will crush Intel's Covertown 5300 series of 4-core Xeons and when Barcelona finally ships later this year, it will have to back those claims up with some actual test results.

Finally, the third benchmark chart raises yet another question about presentation of benchmark data. Whereas the first two charts focus on raw score comparisons between the systems being tested, the third takes the lowest performing system (the Intel 5160), establishes it as the 100 percent baseline to which others will be compared, and then shows the Xeon 5355-based system outperforming that baseline by 11 percent and the 2222SE outperforming both the 5160 and 5355 by 24 and 13 percent respectively. Going back to the previous two benchmark charts (SPECint_rate2006 and SPECfp_rate2006), had the same "percentage" methodology to presentation been taken, the 2222SE would have shown a 2.5 percent margin over the 5160 on integer performance, and a 15 percent margin on floating point performance. Additionally, in the third chart, had the 5160 been excluded from the chart (or had that comparison been done in a separate chart) and had Intel's 5355 been used as the 100 percent baseline instead, the 2222SE would have shown a 12 percent margin over the 5355 instead of the 13 percent margin that it appears to have as presented.

You can draw your own conclusions about the mixing and matching of methodologies. In a separate must see/must hear blog post that I have coming, I interview AMD's Freuhe about the issue of how benchmarks should be presented and whether AMD needs to be careful about accusing Intel of unfair benchmarking practices if it (AMD) isn't prepared to follow its own advice.