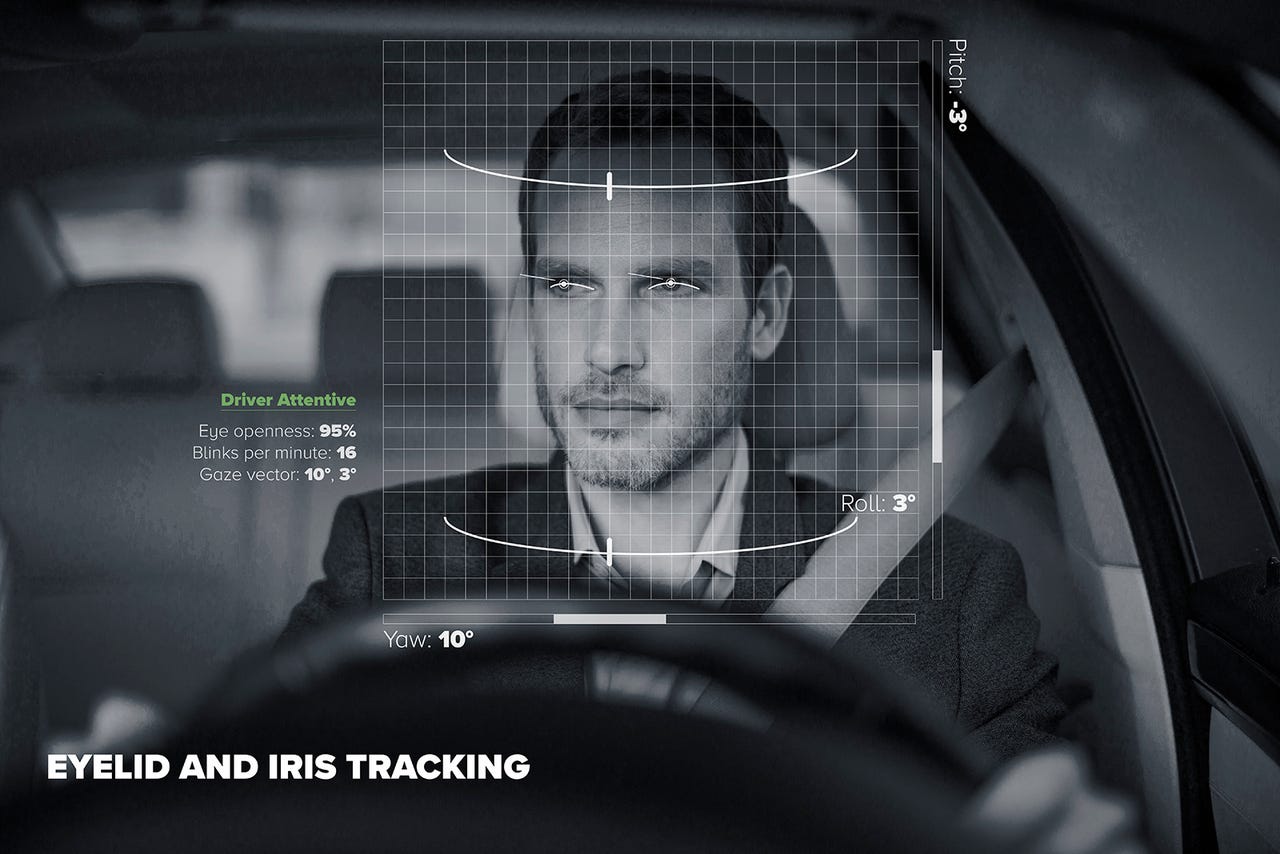

Embedded computer vision could prevent distracted driving

Image: eyeSight

The National Safety Council reports that 2016 was the deadliest year on US roads in a decade. Autonomous vehicles could eliminate the estimated 90 percent of crashes that are caused by human error, but they aren't ready yet. There are legal and practical matters to sort out and consumer advocate groups have urged regulators not to rush self-driving cars to market before they have been thoroughly tested. In the meantime, semi-autonomous vehicles with automated safety features are the next best thing.

According to the US Department of Transportation's latest study (2014), 10 percent of crashes are caused by distraction, and it's no surprise since so many drivers have their eyes on their smartphones instead of on the road. According to NHTSA, "Data shows the average time your eyes are off the road while texting is five seconds. When traveling at 55mph, that's enough time to cover the length of a football field blindfolded." Yikes.

Technology caused the problem -- and newer technology can help solve it. Semi-autonomous safety features already include adaptive cruise control, automatic headlight adjustments, parking assistance, automatic emergency braking, and more. Now a new system called eyeSight uses embedded computer vision to detect when a driver is distracted or drowsy. An infrared camera tracks the driver's eyes while the computer vision and AI software detect the driver's state and analyze it in real time.

A representative from eyeSight Technologies tells us:

The uniqueness and benefit are derived from the combination of camera and software together, each optimized and tailored to complement the other. In addition, thanks to the strength of eyeSight's algorithm, the software is able to run on the minimum hardware requirements in terms of camera and CPU usage, resulting in a more economical package for tier ones and vehicle manufacturers.

If the driver seems drowsy or distracted, the car will alert the driver and take action through the vehicle's other safety systems, such as adjusting the adaptive cruise control to increase the distance from the car ahead. EyeSight can't detect drunk driving yet, but this capability is under development and expected to be ready in the next couple of years.

Embedded computer vision can also be used for convenience, such as identifying a driver and then adjusting the seat, mirrors, and temperature to that driver's preferences. There is also a time-of-flight sensor that is installed in the center console to provide gesture control. Drivers can use simple hand motions to answer phone calls, turn up the radio's volume, or communicate with the car in other ways. A promotional video for eyeSight shows a driver giving a thumbs up to "like" nearby businesses or songs that are playing, although that feature seems counterproductive since it creates a whole new set of distractions.

VIDEO: MIT thinks 3,000 Uber rides could replace all the taxis in NYC