Facebook open sources more machine vision, AI code

Facebook's artificial intelligence research team is open sourcing more code, frameworks, and research on machine vision and facial recognition.

In a blog post, Facebook AI Research, or FAIR, said its latest code on machine vision is being opened up. The trio of tools include:

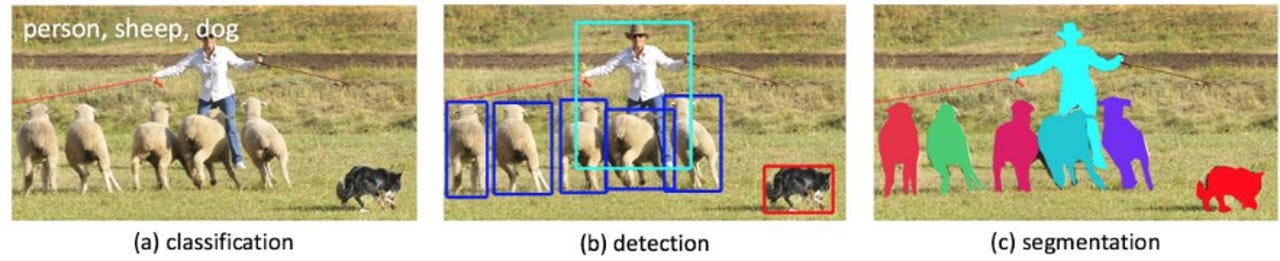

- DeepMask, which is a segmentation framework;

- SharpMask, which combined with DeepMask enables Facebook's machine vision systems to detect every object in an image;

- MultiPathNet, which labels each object and classifies it in an image.

For Facebook, this technology is critical since its users are sharing and using more visual content. Ultimately, Facebook is hoping to enable image searching without tags or captions. Machine vision hasn't been able to catch up with humans yet, but the gap between the two is closing. For instance, The New Yorker recently documented London's team of "super recognizer" humans that can identify perps from grainy images. Facebook images are typically better so a machine has a better chance of picking them out.

Previously: Facebook open sources AI tools, possibly turbo charges deep learning

Among the tools being open sourced, Facebook's DeepMask and SharpMask algorithms are notable. Facebook is launching code, research papers, and demo for the technology.

DeepMask breaks photos down to pixels and then learns objects based on their characteristics. Facebook's neural networks are then trained to learn patters from millions of examples. DeepMask basically breaks images down to binary classifications. SharpMask then refines the output from DeepMask.

Facebook noted:

While DeepMask predicts coarse masks in a feed forward pass through the network, SharpMask reverses the flow of information in a deep network, and refines the predictions made by DeepMask by using features from progressively earlier layers in the network. Think of it this way: to capture general object shape, you have to have a high-level understanding of what you are looking at (DeepMask), but to accurately place the boundaries you need to look back at lower-level features all the way down to the pixels (SharpMask). In essence, we aim to make use of information from all layers of a network, with minimal additional overhead.

What's next? Facebook is hoping to use its AI to dissect video, which is more challenging due to movement.