Facebook: We're adding information warfare to our fight against malware, fraud

Facebook has set out the methods that government attackers are employing.

Facebook security and threat-intelligence teams have taken on the new role of combating governments and non-state actors that are trying to sway politics through fake news and disinformation.

The social network's cybersecurity chief, Alex Stamos, and Facebook's threat-intelligence members, William Nuland and Jen Weedon, outline their new responsibilities in a 13-page document entitled Information Operations and Facebook.

While cybersecurity and threat intel at Facebook historically has focused on fighting fraud and malware, the group now will work to address "information operations", which they define as "actions taken by organized actors (governments or non-state actors) to distort domestic or foreign political sentiment, most frequently to achieve a strategic and/or geopolitical outcome".

"In brief, we have had to expand our security focus from traditional abusive behavior, such as account hacking, malware, spam and financial scams, to include more subtle and insidious forms of misuse, including attempts to manipulate civic discourse and deceive people," they write.

The report discusses the 2016 US Presidential election and acknowledges that fake Facebook personas were used to amplify news stories about data stolen from political targets.

The report doesn't explicitly mention the DNC phishing attacks and subsequent leaks. It also emphasizes that Facebook is not in a position to attribute the activity to any specific actor.

However, the authors add that Facebook's data "does not contradict" the US Director of National Intelligence's January report attributing the campaign against Hillary Clinton to Russian intelligence actions that were carried out under Kremlin orders. These actions included the Guccifer 2.0 persona and the DCLeaks release to Wikileaks, which were then amplified on Facebook and other social-media platforms.

The Facebook report distinguishes between fake news, which can also be financially motivated, and information operations' mostly political objectives that are achieved by coordinating groups on social media to amplify a story.

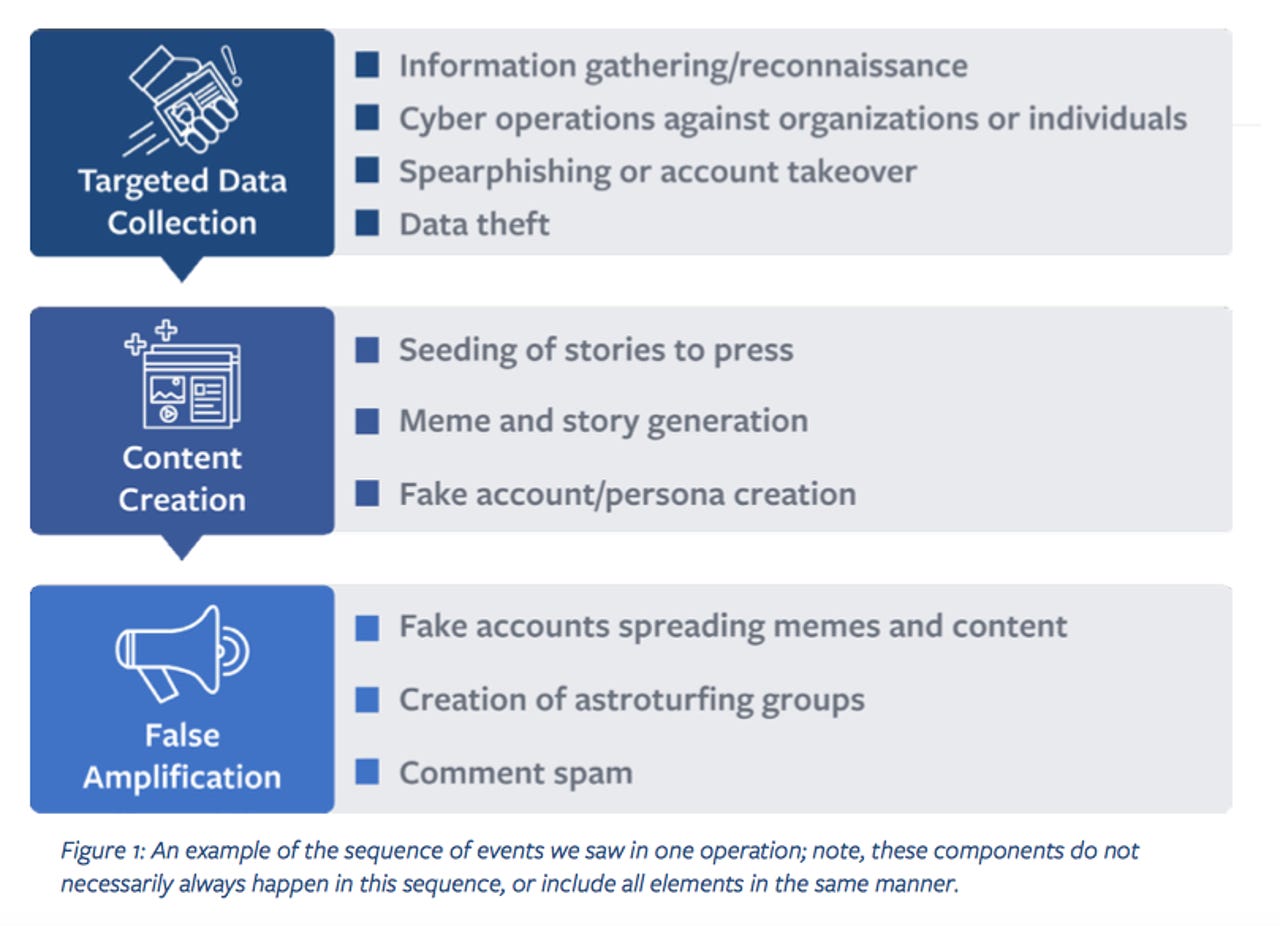

Information operations that Facebook has observed on its network include targeted data collection, such as spearphishing; content creation tactics like memes; and false amplification through high volume fake account registrations and coordinated 'Likes'.

Facebook says it's combating data collection by offering two-factor authentication and notifications to people if they're probably being targeted by advanced attackers.

It also highlights that information operations amplification efforts are generally not the work of 'social bots', but carried out by humans who perform their duties robotically.

"We have observed many actions by fake account operators that could only be performed by people with language skills and a basic knowledge of the political situation in the target countries, suggesting a higher level of coordination and forethought. Some of the lower-skilled actors may even provide content guidance and outlines to their false amplifiers, which can give the impression of automation," they write.

Facebook says it's targeting false amplification with the help of machine learning to analyze "the inauthenticity of the account and its behaviors, and not the content the accounts are publishing".

Using these methods, as it revealed recently, Facebook deleted over 30,000 fake accounts in France on April 13. That was just before the country's election last Sunday.