Google's DeepMind AI: Now it taps into dreams to learn even faster

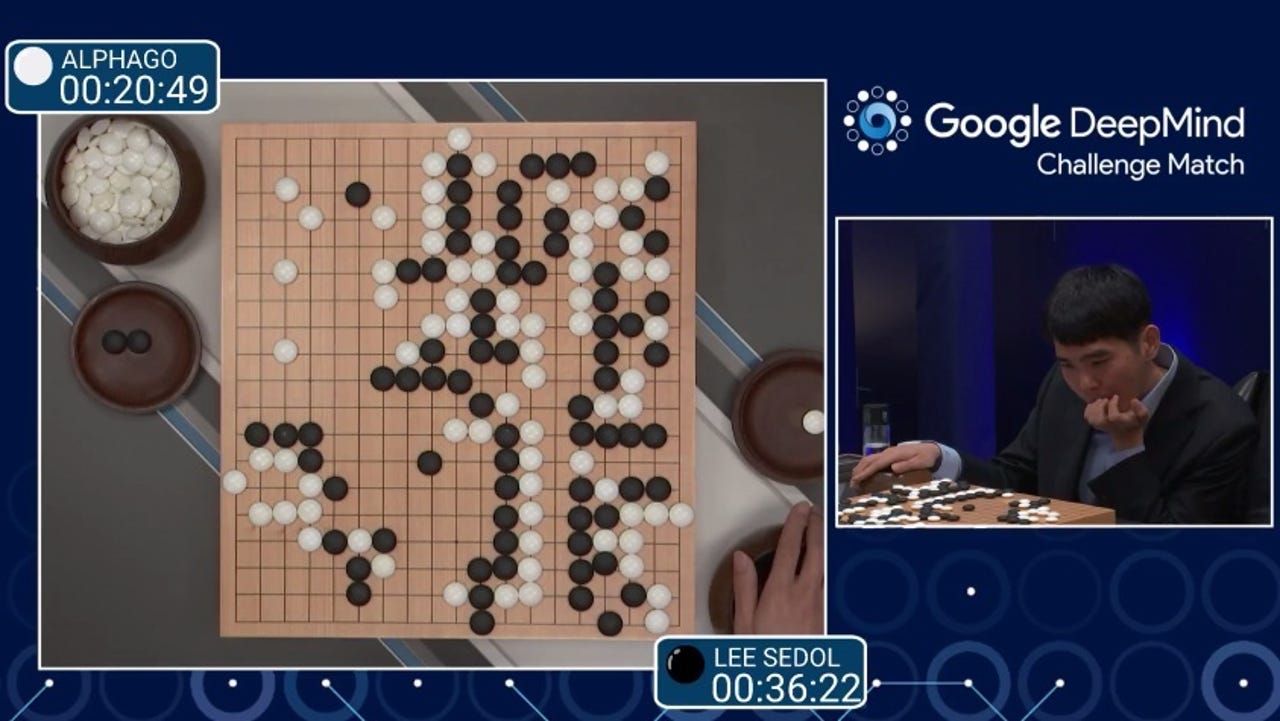

Google DeepMind's new agent uses the same deep reinforcement learning methods that it's previously employed to master the game Go.

The latest AI creation from the researchers at Google's DeepMind lab is UNREAL, a particularly quick agent that could tackle more complex tasks than just games.

DeepMind researchers present their findings in a new paper describing UNsupervised REinforcement and Auxiliary Learning or UNREAL, which in part borrows from the way animals dream to speed up learning.

Testing the agent on Atari games and the 3D game Labyrinth, they found that it was able to learn 10 times faster than its previous algorithms. It also averages 87 percent expert human performance on Labyrinth.

As DeepMind's London-based researchers explain, the agent uses the same deep reinforcement learning methods that it's previously employed to master the game Go and several Atari 2600 games.

However, what makes UNREAL special is that it's been augmented with two additional tasks, one of which borrows from how animals dream and another that resembles how babies develop motor skills.

"Just as animals dream about positively- or negatively-rewarding events more frequently, our agents preferentially replay sequences containing rewarding events," they write in the paper.

The researchers used this concept to teach the agent to focus on visual cues from its recent history of experiences, which signal shortcuts to greater rewards.

"The agent is trained to predict the onset of immediate rewards from a short historical context. To better deal with the scenario where rewards are rare, we present the agent with past rewarding and non-rewarding histories in equal proportion," they explained in a blogpost.

"By learning on rewarding histories much more frequently, the agent can discover visual features predictive of reward much faster."

The other task regards how the agent control pixels on the screen in a way that focuses on learning from actions to tell what is useful to play well and score more highly in a given game.

Using the three techniques combined, the researchers tested the agent on 57 Atari games and 13 levels of Labyrinth.

Part of the achievement is not just creating an agent that excels at each game, but that the agent doesn't need to be customized to learn each game.

As the researchers pointed out, DeepMind's main goal is to break new ground in AI using programs that "can learn to solve any complex problem without needing to be taught how". So, now they have a faster-learning agent and one that's also more flexible.

"We hope that this work will allow us to scale up our agents to ever more complex environments," the researchers said.

READ MORE ON GOOGLE AND AI

- Google Translate: 'This landmark update is our biggest single leap in 10 years'

- Google, LinkedIn, Facebook suggest a focus on mobile before looking into AI

- Intel seeks to advance 'AI for good'

- MindMeld launches conversational AI technology for enterprises

- Is Google Cloud Machine Learning enterprise-ready?

- IBM's Watson AI could soon be in devices from PCs to robots, thanks to Project Intu

- TechRepublic: The future of AI in the US: What it could look like in the Trump Administration