Half man, half machine: The clever haptics-based system helping astronauts in space pilot robots on earth

Last week, ESA's Telerobotics and Haptics Laboratory, based at ESTEC, demonstrated that a human operator 400km away on the International Space Station could make a land-based rover move with near-human precision.

In the wake of natural disasters and the expected effects of climate change, there is increasing global interest in developing exploratory deep-sea, deep-space, and Arctic rovers as well as rovers to aid humanitarian efforts in disaster areas on the ground. But this charge demands robots that can move with multiple degrees of freedom -- robots that move like a human can.

"The control methods we used were very similar to what you would expect from a DARPA challenge," engineer André Schiele, who led the ESA lab's project, told ZDNet. In ESA's demo, autonomous robots execute actions according to instructions from their onboard computers. "But our system combined the best of both a human operator and an autonomous system. This is totally new."

In the ESA system, using haptic technology, the human operator provides all the robot's reasoning, supervision, and decision-making.

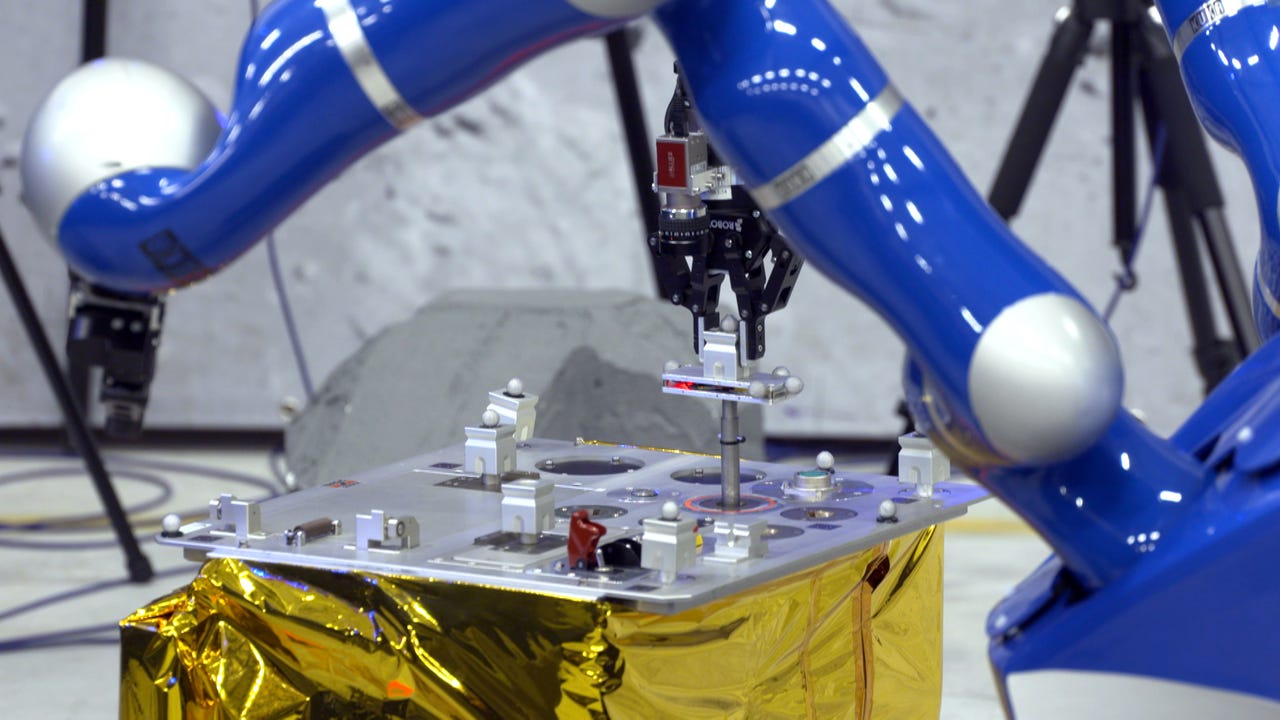

Using the system, a robot on earth was tasked with pushing a peg into a round hole just far enough to establish an electrical connection between the two, and complete a circuit. ESA astronaut Andreas Mogensen, on the International Space Station, piloted the wheeled robot and controlled the movements of its arm with a force-feedback enabled joystick, feeling the resistance the robot sensed as it pushed the peg in.

The robot's task may have seemed simple, but it represented a significant achievement in haptic design and telecommunications. Mogensen and the land-based robot moved in sync despite the 144,000-km roundtrip distance the signal had to travel between them: first, from the ISS to higher orbiting satellites that sit 36,000km away from Earth, then back down to a NASA ground terminal, and finally across the Atlantic to ESA through a transatlantic cable.

During its roundtrip, the data experience a 0.8-second time delay, characteristic of the tracking and data relay satellite system (TDRS) system it relied on. The TDRS system was developed by NASA to keep spacecraft communications better connected to Earth-bound stations.

"Time delay in a closed-loop system is very bad," says Schiele. Usually, the human gives commands to the robot in what is called an open-loop scenario, but in this case, the robot responded to those commands and sent back signals from its own movements: a closed-loop scenario.

"It makes it very quickly unstable," explains Schiele, drawing on an analogy of trying to maneuver your car through a sea of pedestrians and bikes during rush hour. At some point, it becomes so difficult to try to sidestep every roadblock that you just stop.

Of course, the point of ESA's experiment was to complete the task without having to shut it down. Schiele and his team overcame the delay with novel control systems techniques in order to let Mogensen and the robot interact in real-time.Haptic guidance may become the preferred method to control robots in the future because it's more intuitive than sending written computer commands. Investigators at the Japanese Fukushima disaster site now control robots at a site within the radiation zone by tapping away on the small keys of their laptops, sending commands to the robots with large, unwieldy rubber gloves. With haptics, a robot can just copy the operator's movements.

In the future, Schiele hopes to use this technology to control robotic arms in space, to retrieve space debris, as well as to perform repairs of industrial sub-sea equipment.

This week, Schiele and his team discovered something unexpected. At some point during the experiment, there was a 12-second time delay in the human-robot feedback system. Although, they don't yet know exactly what caused the delay, Schiele is pleased that his team's design didn't fail in spite of the holdup. "He still managed to accomplish the task," Schiele says.

Read more about robots