How did IT fall so far behind the tech curve?

Information technology departments are overloaded, missing the consumerization wave, and failing to use new developments to cut their budgets.

Those are some of the takeaways from a Gartner presentation at the IT Symposium in Orlando. The spiel by Gartner analysts David Mitchell Smith and Tom Austin revolves around the state of IT departments as technology is rapidly being changed by their users. How exactly did IT become so crotchety?

Here's the scene setter:

Most IT professionals want the world to proceed in orderly, incremental fashion, with no massive overnight changes and with plenty of time to adapt to external change. Significant discontinuities are the stuff of which nightmares are made. For example, when assumptions about the useful life of an asset shift early in a project, plummeting from several years to several months, investors can get ruined, and people can lose their jobs and more. We see five major intersecting discontinuities on the horizon. They amplify each other. Any one of them can upset the balance of power between users and their IT organization (or between vendors in a segment). Put the five together and let them amplify each other's dislocating impact, and there is major trouble looming.

These five amplifying developments are:

- Software as a service;

- Open source;

- Cloud computing;

- Web 2.0;

- And consumerization.

That list isn't all that surprising but Smith and Austin do capture the conundrum well.

More of the argument:

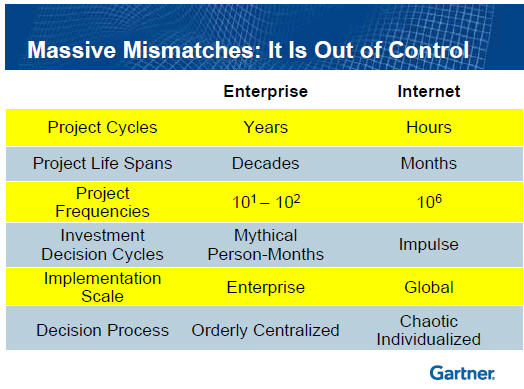

There is a fundamental mismatch between what enterprise IT is good at and what is happening on the Internet. For investment projects, IT organizations typically spend six to eight years from initial conceptualization through selling, planning, testing and implementation of the first release. Project cycles, life spans and frequencies of Internet-related developments (and consumer-related product or service introductions) are radically different.

How do you provide enterprise class technology that's secure while catering to the masses and letting the users innovate?

Gartner argues that IT departments have to assess what they're good at and farm out the rest---to their users. Does IT really need to issue smartphones? Probably not. Instead of supporting worker laptops at $2,500 a pop, give them an annual stipend of $1,000 and let users buy their own PCs. In a nutshell, IT departments should split themselves and give users what they want (and make them buy it too). One side of the IT department will be a top-down dictator and the other will depend on bottom-up free markets.

Now Gartner has been on this user-provided IT pitch for a while now---the research firm equates the company laptop to the company car in the 1970s---and the prediction hasn't exactly become the norm. However, the move to let employees bring their own gear increasingly makes sense. Why? Employees are already bringing what they want to work anyway. Exhibit A: The iPhone. Exhibit B: Google. Exhibit C: Facebook. You get the idea.

Here's pitch for shifting IT to the users:

The choice for IT departments is relatively clear. You can deny that guerrilla IT has exploded in your company (the sales team's use of Salesforce.com and WebEx without central approval). Or you can embrace the digital natives and run with it. If you do the latter, Gartner recommends hiring college interns just to learn from them.

Gartner adds a few examples of security issues and building an architecture that can straddle the control vs. free user market line. However, the case really boils down to developing two separate IT approaches.