Inside Facebook's lab: A mission to make hardware open source

Amir Michael, Facebook's manager of system engineering, is stood in the company's hardware lab trying not to get in the way of the assorted engineers, wheelie-chairs and bottles of water scattered around the room, describing Facebook's attempt to democratise hardware.

"We're trying to take away a lot of the uniqueness of server design" to create a "clean, open canvas" for companies to base their datacentres around, he explains.

What Amir is talking about are the server and storage systems that Facebook uses in its datacentres and how the social-networking leader is hoping that by publishing the designs and specifications of this low-power, low-cost hardware, it can reduce the cost of infrastructure for businesses large and small.

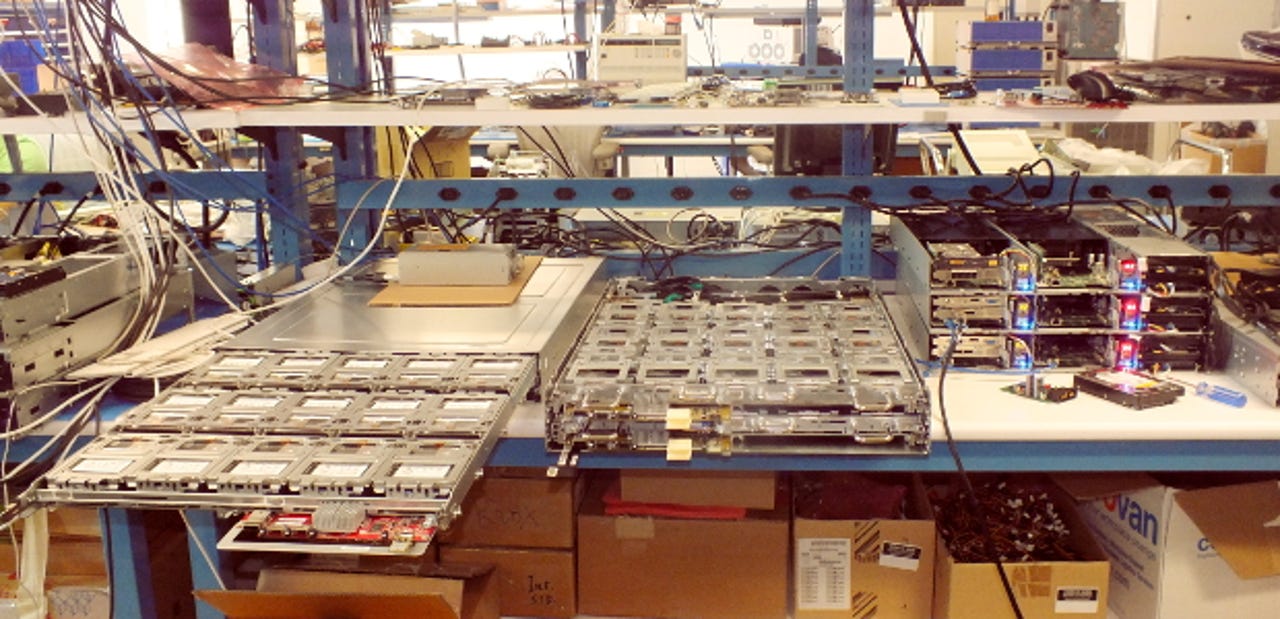

The equipment in the lab — a novel Open Vault storage array and various versions of the Open Compute server — is being developed by Facebook as part of its Open Compute Project, a cross-industry scheme by the company to bring an open-source approach to physical hardware.

The Open Compute Project was launched by Facebook in April 2011 as a way of distributing its server designs, but in an attempt to seek broader participation in the scheme, the company span the project off into its own Foundation in October 2011.

Facebook remains the initiative's de facto leader: its vice president of hardware design and supply chain operations, Frank Frankovsky, is the chairman of its board of directors. That said, the rest of the board are from major enterprises such as Intel, Rackspace, Arista Networks and Goldman Sachs. If these companies are involved in this scheme, you can assume that the Open Compute approach is something that both IT buyers and IT sellers think is worth a bet.

Lifting the industry

"Our goal is to be non-proprietary," Matt Corddry, a senior manager of hardware engineering at Facebook, said during my recent visit to the lab. "We're not trying to maintain an advantage with this gear, we're trying to elevate the industry."

This approach contrasts with other large cloud operators. Google, Amazon and Microsoft are all notoriously secretive about their datacentre infrastructure, though Google occasionally releases research papers outlining some of its more advanced software systems.

"Our goal is to be non-proprietary. We're not trying to maintain an advantage with this gear, we're trying to elevate the industry" — Matt Corddry, Facebook

The Open Compute scheme has received broad industry interest, with both AMD and Intel contributing motherboard designs and CAD documents. Facebook thinks that in time, its Open Compute designs could shake up the enterprise IT landscape.

"What I see happening is a lot of these principles that we've shared will start to take root in enterprise systems as well," Michael said. "The server can be lightweight; it can be vanity-free."

In fact, the Open Compute Foundation says it believes upcoming Open Compute motherboards designed by Intel (codenamed 'Decathlete') and AMD ('Roadrunner') could, in time, become "a universal motherboard, in terms of functionality, supporting 70 to 80 percent of target enterprise infrastructure use cases".

As for the hardware itself, both the Open Compute server and storage equipment is designed differently to the types of gear being made by enterprise vendors such as HP, IBM and Dell.

Sled servers lead the way

The servers, (pictured), are based on a sled chassis design that is designed to work with Facebook's Open Rack. This is a new approach to server rack design that seeks to distribute equipment typically found on servers — power systems, networking and so on — and plug it into the rack itself.

They take power in from a power distribution system that lives in a portion of the rack, rather than the server, and the drives are situated at the front to make it simpler to swap them out if they fail.

The prototype servers (pictured) are version 2 of the Open Compute specification.

The major differences in the new compute server compared with its predecessor are a move to a single motherboard per chassis, larger fans (now 80mm, up from 60mm) that consume less power, and the incorporation of an air duct in the server sled's chassis. This means Facebook can save on the cost of building plastic air ducts then fitting them to its servers.

In the future, Facebook hopes to entirely remove the drive from the web servers and boot off a low-power, more-reliable mSATA solid-state drive. A 60GB drive should be sufficient to host Facebook's OS and its logs.

mSATA drives are typically used in laptops, but Michael's team has built an adapter that lets Facebook use them in servers.

Corddry is keen on this, as it lets Facebook obtain cost savings from a "really high volume commodity part", he said, noting that "you don't need enterprise-grade equipment to boost a web server".

Open Vault storage push

The other major project the Facebook hardware labs team is working on is a way of redesigning storage arrays to suit large-scale datacentres.

The Open Vault equipment, codenamed Knox (pictured), packs multiple hard drives onto a retractable sled. This can be pulled out and then, using a hinge, lowered to allow engineers to easily swap drives out in case of failures.

Facebook has a constant backlog of equipment that needs maintenance, Michael said, with a rough annualised failure rate of about one percent. For this reason, making it easy to maintain kit and swap out failed drives has become a priority.

Knox has a feature that lets it cut the power to individual drives when they are not being used, and differing numbers of drives can be attached to each motherboard according to the processing needs of the storage server.

Sometimes it really is a simple matter of turning it off and on again, according to Facebook's team

This gives Facebook two useful features. To start with, it can give power to its 30-odd drives according to the frequency with which their data is accessed. In other words, regularly accessed information can be kept on drives that are always switched on, while rarely touched data can be put on drives that are by default powered-down and only switched on when an access request is made. That lets the company save on power.

Another benefit is that it gives the company a way to solve hardware problems.

"Drives... actually fail the most in our datacentre," Michael said. "Part of our procedure is when a drive fails we try and power cycle it."

Yes — sometimes it really is a simple matter of turning it off and on again, according to Facebook's team. "A lot of drive manufacturers get returns with no trouble found," Corddry said.

An additional benefit of Knox is that its design makes it relatively easy to manipulate the proportion of storage assigned to...

... processing power, so data-intensive servers will get more computers, and less-intensive ones - such as an array storing a bunch of rarely accessed three-year-old photos — get less.

"Typically when we deployed storage before, we kept a very fixed ratio of CPU to disk," Michael said. "What is unique about Knox is we can vary the number of disks to each CPU."

So far, Knox has gone through three separate iterations: red boards mean it's the first generation, yellow, the second, and green, production. Facebook hopes to begin mass production of Knox systems in October, and they will be manufactured by Wiwynn, an Asian original device manufacturer.

The Asian Connection

Facebook's use of Wiwynn and other ODMs, such as Quanta, represents another Open Compute approach that could be a boon for IT buyers.

By using an ODM, businesses can customise an Open Compute server or storage design according to their needs, then go directly to a manufacturer for the hardware to be built. This completely sidesteps the typically hefty mark-ups added by both channel companies and enterprise vendors.

Wiwynn already has customisable Open Compute storage and server designs available on its website.

Besides giving customers more options aside from the channel and enterprise vendors, the scheme has also improved the fortunes of the ODMs themselves.

To meet expected demand for such equipment, Quanta said in May that it will launch a US-based subsidiary to sell Open Compute hardware to US companies.

Some businesses will still go the OEM route, Michael said, but this is more due to the associated integration, delivery and support services, combined with an aggressive salesforce, than technical need. Ultimately, Michael thinks OEMs such as Dell, HP or IBM are primarily geared to deal with customers with a few servers, rather than the thousands that Facebook, Google or Microsoft operate.

The long road to an open-source datacentre

So far, HP, Dell, AMD, Intel and a few other vendors have stated that they are working on Open Compute-based kit.

In the future, Facebook hopes to see component vendors publish some of the designs of their equipment in an open-source format.

"It's starting to get ticked off around the edges," Michael said, noting that Mellanox is expected to publish as open source an Open Compute server network-interface card (NIC). It does not threaten the company's business model to do this, as "what they're trying to sell is the silicon", he argues.

Along with this, he hopes Intel could openly publish some of its motherboard designs. "Intel doesn't really need to keep [them] secret," he said.

And, one day, he thinks it could be possible for this component openness to "get into the level of the chip as well," though that is probably a long way off.

All in all, "we're seeing really impressive design work from members of the Open Compute consortium", Corddry said. "We really have what you would call an open-source movement."

If the scheme continues, then Corddry thinks Open Compute gear could fit 90 percent or more of basic datacentre infrastructure needs.

Barriers to entry

The Open Compute initiative could succeed because it has the potential to lower the barriers of entry to datacentre-intensive technologies such as web search, according to Simon Wardley, a researcher at the Leading Edge Forum.

"If we look at cloud computing in terms of infrastructure, this is all about commodity provision, it's all about operational efficiency," Wardley said. "Anything [that lowers the barrier of entry] in that space will enable competitors to set up and compete."

"There's been all sorts of open-source collaborative efforts around hardware before, but I can't think of one at this scale," he added.

If more vendors participate in the effort and more components get an open-source variant, then Michael thinks it could spur a major change in the datacentre technology industry.

"A lot of the [datacentre] infrastructure has the potential to come down in cost," Michael says. "From the end-user level, the stuff that's already free, you'll get more of it. The things that you pay for will just benefit."