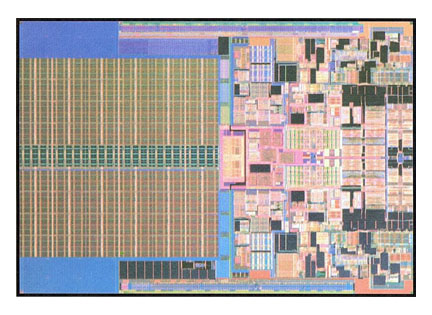

Inside Intel's Penryn

Like all new processors, Intel's Penryn is a mixture of novel ideas and older, established designs. In Penryn's case, the mix leans heavily towards the latter; the processor is, at heart, the same as the previous Core 2 chip — just shrunk to fit 45nm instead of 65nm design rules.

A tick, not a tock

This follows Intel's 'tick-tock' design methodology, adopted after the company went through an embarrassing period of dropped projects and missed deadlines. There are two major thrusts to innovation in silicon: process and architecture. Process sets the ground-rules for chip design — what the electronic, thermal and physical parameters are for circuits and how to make them economically; architecture defines what can be done with the results. As process and design are interdependent, trying to make major advances on both fronts simultaneously multiplies the risk without necessarily multiplying the advantage. Intel has therefore put both process and architecture on two-year development cycles — which roughly corresponds to the periodicity of Moore's Law — but one year out of sync with each other.

Penryn uses an existing architecture, but shrinks the process technology down from 65nm to 45nm.

Penryn is a 'tick' — an old architecture on the new 45nm process. Next year will see the 'tock', Nehalem, a brand-new architecture built upon what will then be the old process: the year after that will see another tick, Westmere, which is a shrunk-down Nehelam on the new 32nm process. This makes for much more manageable and predictable development, but runs the risk that advances in performance year on year may not be as spectacular as once expected.

Performance

At first sight, Penryn would seem to confirm that analysis. Benchmarks versus the previous generation of processor show modest gains, if any, on most software. Areas with significant performance improvements are highly specialised, and likely to stay that way. But that's to be expected: the processor runs at roughly the same clock speed as its antecedents and has roughly the same architecture.

Penryn's advantages are more subtle. The most significant for the future are in process and, in particular, the transistor design. The metal gate, high-k transistor in the chip was first announced by Intel in 2003, is one of the most important innovations in Penryn and will be the basis for at least the next three generations of chip, until the end of 32nm development. Intel isn't saying what happens after that, but there's a good chance that for 22nm and below manufacturers will have to use a radically different sort of transistor with multiple gates surrounding a very thin sliver of silicon. Intel's version of this is known as a 'trigate' transistor; similar configurations are called FinFETs by others.

Intel's new 'high-k metal gate' transistor, introduced in Penryn family, uses new (and currently secret) materials in the electrode and gate insulator.

However, the high-k metal gate transistor is here today. This has two major differences to existing transistors; the gate — the electrode that contains the switching voltage — is made from as-yet-undisclosed metal alloys, while the gate insulator, which isolates the switching voltage from the switched, has an also-secret exotic blend of silicon and other materials, most notably hafnium. This high-k mixture can be made much thicker than the pre-45nm design's silicon oxide insulating layer, while having very similar electrical characteristics. That's just as well, as the 65nm layer was just a few atoms thick and couldn't be made any thinner.

Intel claims many advantages for this new transistor, most notably a 20 percent increase in performance, 10 times less gate leakage and 5 times less leakage across the transistor when it's turned off — all with respect to the 65nm design. The company has also developed ways of fine tuning transistor performance, allowing higher performance to be traded off against lower power consumption and vice-versa. These trade-offs can be mixed in the same design, to save power in parts of the circuit where performance isn't needed without compromising speed elsewhere, or more generally across different parts. This means that low-power portable and mobile chips can be made to the same basic design as high-power server parts, but with their transistor parameters set appropriately.

Power consumption

As may be expected, the modest (but useful) performance increase of the underlying transistor technology is overshadowed by its much superior use of power. To make the most of this, Intel has added a new Deep Power Down (DPD) mode to the previous four low-power states in the 65nm designs. In DPD mode, the processor dumps almost its entire state into a special 8KB memory powered by the I/O voltage supply, and then drops the voltage in the rest of the core to a very low setting. Everything stops, all data outside the special memory is lost and the power consumption drops to under 200 milliwatts.

The reason that Intel doesn't just turn off the power to the core completely is that if it did, it would take too long to recharge the chip when it's time to turn back on. When that time comes, the chip follows exactly the same procedure as it does when turning on from cold or after a hard reset: however, it also checks the state of the internal memory and, if it realises it's coming back from deep sleep, restores everything to where it was immediately beforehand.

Deep Power Down (DPD) is a new low-power state — the lowest of five in the Penryn architecture. Only mobile variants currently use it, though.

The decision to keep a few hundred millivolts on the chip during deep sleep means the chip can effectively save power even if it goes into Deep Power Down mode every three milliseconds or so — the energy needed to recharge it is such that any more often than that, it actually costs more power to make the journey there and back than is saved. Intel claims that with Deep Power Down enabled and used, the processor can save up to 44 percent during normal office tasks, and with an exit latency of around 150 to 200 microseconds it has little impact on perceived responsiveness. So far, although the circuitry is present on all Penryn variants, it's only enabled for mobile parts, primarily because only the mobile chipset, Santa Rosa, supports it.

Anywhere the designers could save power, they did. For example, although Penryn is a 64-bit chip it spends a lot of its time doing 32-bit operations because they make up the vast majority of existing software. Whenever possible, therefore, the chip turns off the upper 32 bits of the 64-bit data path, isolating them from power — or the upper 48 bits if only 16 bits are in use; the same idea works at all levels, with entire areas of the chip being disabled through power-switching transistors when not in use, even if only very temporarily.

Architecture

The basic architecture of Penryn is familiar to anyone who's followed processor design over the past ten years. Instructions are fetched in from memory — hopefully cache — in as big a chunk as possible, to minimise slow bus transactions. The first step once they're on-chip is pre-decode, where the chunk is broken down into the individual instructions and their length determined. The instructions are then pushed into a 64-byte deep queue, which acts a buffer that absorbs temporary delays between fetching and decoding, and this happens in general with four instructions per clock cycle.

Penryn's basic architecture is unchanged from the previous 65nm processor generation.

The processor then works out how to allocate its internal resources to the instructions. It has a big pool of registers — many more than the standard register set that the programmer knows about — and allocates these so that, as far as possible, data doesn't need to be moved between them. It's often possible, for example, to spot a case where the program takes the contents of the EAX register and moves them to ECX, then overwrites EAX with other data. It's quicker to internally rename the EAX register to ECX while loading a third unnamed register with the new data, and then rename that register to EAX: that saves one complete data move.

That example also demonstrates out-of-order execution, where the processor spots instructions that aren't dependent on previous events and can be run using otherwise unused internal resources. This is efficient, but produces results in a different order to that expected by the program: these results are held in a reorder buffer, which holds the results until they're ready to be returned. Then, the retirement unit sorts out which results are due out in what order, and unloads them appropriately.

Another unit, the memory order buffer, holds data loads that may have relations to other loads or stores, and checks what dependencies exist. If the data is going to memory-mapped I/O and depends on information being stored, it doesn't dispatch the load before the store completes. If the load references the store address, it forwards the result from the store. If there are no dependencies, the load can be despatched ahead of the store. That gives some large advantages, as it avoids the delays of going off-chip — a process chip designers regard as expensive.

Penryn also keeps a history table of how previous data loads behaved and whether they had a dependency the last time they were executed. This is part of the disambiguation unit, which decides ahead of time whether a particular action is likely to need an expensive memory access or not. Although this doesn't always work — and when it doesn't, the effects can be quite dramatic, with the entire internal pipeline having to be reloaded to a previous state. Intel engineers call this 'nuking the pipeline' — it does compensate to some extent for the chip's reliance on a frontside bus and the lack of an on-board memory controller.

Architectural tweaks

During the design of Penryn, Intel tweaked this predictive behaviour primarily for power consumption — again, resulting in a far more relaxed thermal envelope when going for speed increase compared to previous designs. This also allows a trick that Intel calls Enhanced Dynamic Acceleration Technology — known to the rest of us as overclocking. If one core in a multicore design is quiescent, there's enough slack in the power budget for the entire chip for the other core to be accelerated past its normal limits. That's particularly useful for single-threaded applications, which still make up the bulk of software.

Enhanced Dynamic Acceleration Technology allows an idle core to be powered down and an active core to be overclocked.

Power savings come with performance enhancements in other ways too. The chip can spot when loops habitually generate cache misses, for example, and does a speculative pre-fetch ahead of time to populate the cache with the data in case it happens again. That's tied in with a dynamically resized window that trades off memory bandwidth against latency. If the memory bus is quiet, the window increases to soak up the spare bandwidth just in case; if the bus is very busy, the chip fetches less to reduce the load, at the risk of having to go back if it misses data that's needed later.

Lots of this behaviour is fine tuned in the microcode, the internal program that defines how the different parts of the processor work together. This can be configured differently for different parts, with different trade-offs made for mobile and server parts. This is entirely invisible to the operating system and applications that run on the chip, but makes a difference to performance and power consumption.

There are plenty of straightforward design tweaks too. For example, Intel's basic mathematical divider circuit has remained unchanged since the original Pentium: it works by long division, and acts on two bits of the quotient at a time. That's been upped to 4 bits for the Penryn divider, which effectively doubles the speed. Also, processors are notoriously bad at handling data that's not nicely stored in memory — that is, if it doesn't start and stop on convenient memory boundaries. This misalignment needs a lot of juggling to be efficiently handled, and Penryn's designers spent a lot of time tuning the chip to work well with what they call 'junk code'.

Virtualisation performance is also improved, by the chip maintaining a better internal track of the states of the virtual machines and maintaining a more precise control of just those parts of the circuit that need to change when virtual states are entered and exited. Intel says that performance is better by between 25 and 75 percent for some instructions, although the company is still working out exactly how to benchmark virtualisation.

SSE4

Other apparently major improvements are so specialised that they're only of interest to a particular segment of the market. Penryn has 47 new multimedia instructions as part of its SSE4 extensions; these are designed to treat large arrays of data in a single go, and bring many new ways to compare, shuffle, compute, merge and store the sort of information found in video and imaging applications.

Penryn's 47 new SSE4 instructions will mostly benefit video and imaging applications.

In particular, Intel says, this brings big benefits for video compression, where the chip is exceptionally good at analysing streams for motion estimation. This extracts features from the stream and tracks the way they move from frame to frame, and is a very significant factor in making low-bandwidth, low-latency, high-quality video. The additions in Penryn will have the most impact on high definition video; outside that area, though, they will have few applications.

Conclusion

Penryn is faster. It's also better at power consumption — a lot better. But perhaps the most important aspect of the design is that it demonstrates continual improvement compatible with the existing code base, with plenty of opportunities for the future. It has also been delivered to spec, on time and in quantity.

Intel's tick-tock design rule may yet have problems of its own in the future, due to its very rigid nature and far-reaching predictive power. But for now it seems to be performing so well that the problems it brings are entirely for the competition.