Meet Mycroft, the open source AI who wants to rival Siri, Cortana, and Alexa

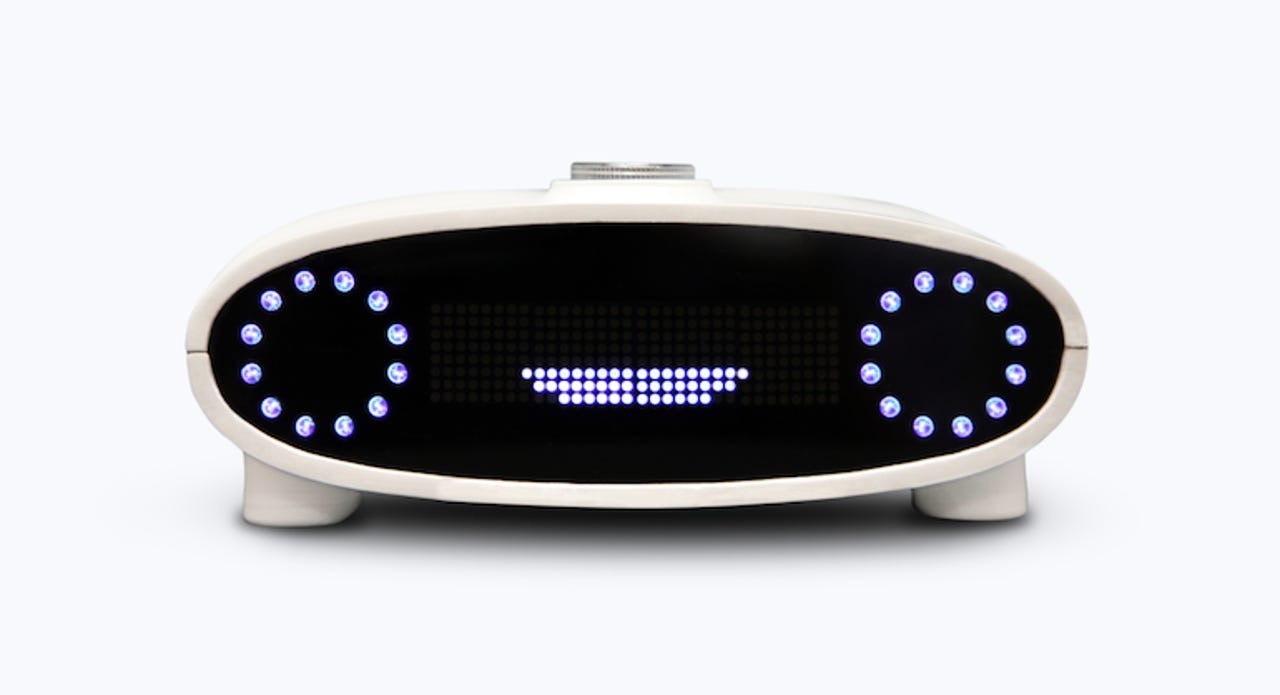

The open source reference device that's the face of the Mycroft AI platform.

It might bear a passing resemblance to ET, but Mycroft's smart home system is more than a pretty face: it's an attempt to define what it means to be human through technology.

The first seeds of the open source home hardware AI platform that was to become Mycroft came to Joshua Montgomery during a refit of a Kansas maker-and-enterpreneurship space he was setting up. Montgomery wanted the building to have the same abilities as the systems seen on classic sci-fi films and series.

"It was inspired by the Star Trek computers, by Jarvis in Iron Man," Montgomery told ZDNet. He wanted to create the type of artificial intelligence platform that "if you spoke to it when you walked in the room, it could control the music, control the lights, the doors" and more.

After investigating the state of open-source voice control systems on which he could build the platform, Montgomery found there was work to be done. "It turned out there was a complete void. Open source software doesn't have an equivalent to Siri, Alexa, or Cortana," Montgomery said.

Last year, after building the AI system and setting up a company to commercialise it, the decision was taken to seek crowdfunding. But, as "software projects seldom get funded on Kickstarter," said Montgomery, the company decided to build a reference device to go with its software platform: a hub device based on Raspberry Pi 3 and Arduino.

If the industrial design looks a bit familiar, there's a reason for that: the device was inspired by friendly robots and other science-fiction staples.

"We wanted it to give realistic cues when you're relating to it... We wanted it to be friendly, to be different to the very vanilla, very plain systems" already out there, Montgomery, who is Mycroft's CEO, said.

As well as being rather sweet, the reference device was intended to attract developers by giving them a stable hardware platform to work on. "We made the reference device so developers would known they had a good speaker and a good microphone. It helps [developers] to have apples-to-apples comparisons, so they can focus on solving software problems, not on finding what microphone works," Montgomery said.

The company's Kickstarter went off: it raised over $127,000, and a subsequent Indiegogo campaign generated another $160,000. The reference devices began shipping to the first buyers at the start of April, and all backers should get their devices by July.

The system is powered by Snappy Core Ubuntu, and is now composed of four software parts: the Adapt Intent Parser, which converts the natural language commands of users into data the system can act on; the Mimic Text to Speech engine; Open Speech to Text; and the Mycroft Core to integrate the parts.

The system's cheeky face can speak to you to deliver updates on the weather and news, and can take voice commands to control other internet enabled devices around the home or office -- switch on the coffee machine, for example, or stream a particular music playlist.

The Mycroft platform started off life intended to help people voice-control their thermostat, manage their calendar, and lock the door. But, due to its open source nature, developers have taken the system and run with it, adding all sorts of skills to the system.

One developer, who was going to visit his Pokemon fanatic young cousin, was able to plug a Pokemon wiki into the system. "It became an expert on all things Pikachu," Montgomery said. Mycroft will also tell a raft of Chuck Norris jokes too, thanks to the work of one developer and fan of the martial arts star.

So far, so cute. But Mycroft has a bigger aim in mind: to make a strong AI system capable of replicating human skills. The company wants to create an AI that makes it "difficult, if not impossible, to tell if you're speaking to a computer or not... we're making an AI that's as real as person".

In order to do that, Mycroft is breaking down what it means to be human into various skills sets, and then training the AI platform to learn them, much in the same way a baby learns to understand language, and is then able to speak a few words, put sentences together, and comprehend object permanence as it grows up.

"The idea is that we break up what a person is into discrete skills and develop software that simulates them. It's a hugely resource intensive process," says Montgomery. That's one of the reasons why the company pursued an open source ethos -- to build up a community of committed developers that can work on building up the AI's skills. Currently, Mycroft has dozens of developers involved (the Mycroft Core is available on Github) and hopes to raise that number to a thousand before too long.

Mycroft is also supporting open source with the hope that AI, likely to be one of the most significant technologies of the coming decades, isn't the preserve of the usual tech behemoths.

"You point at things and grunt -- that's where we've been stuck since the 1970s [with UIs]. Now we're launching into a new era where computers talk in natural language. Companies need to not be held hostage to giant tech companies when they deploy these technologies," Montgomery said.

While the likes of Facebook, Google, and Apple have been investing in 'weak' AIs -- systems that use their intelligence for a particular task or set of tasks -- Mycroft is one of the smaller number of companies looking to building strong AI with human-level capabilities and intelligence. A true strong AI has yet to be built, and some believe it never will be.

For Montgomery, however, it's a matter of time and technology. AIs are already able to replicate humans if you're talking about two-month old babies, he points out, yet they're a way off being able to rival a fully-grown college graduate.

The CEO believes we may be another 15 years away from a true strong AI, and aims to have Mycroft "demonstrate something approximate to a human" on the TED stage of the AI XPRIZE in 2020.

On the way to the 2020 goal, the company is working on incremental upgrades to the Mycroft platform: improving the cadence of the system's voice to make it sound less robotic, and working on other skills around speech synthesis to make it sound more like a human and less like a computer. It also hopes to expand the range of languages the system can process beyond English to the likes of Spanish, Arabic, and the Indic languages.

For now, however, there are no plans to bring a Mycroft hub mark II to the market. "The reference device is the only hardware we're planning at the moment," Montgomery said, but added the company would be happy to work with a hardware partner on a Mycroft-based Amazon Echo style device, or in-car system. "Automobile [AI] systems are the most demanded features, the most complained about, and the least used," he said. "We'd love to do something there."