Microsoft shows off Brainwave 'real-time AI' platform on FPGAs

On August 22, Microsoft unveiled (again) its Project Brainwave deep-learning acceleration platform for real-time artificial intelligence (AI).

(I say again, because Microsoft has talked about Brainwave before -- at least a couple times in 2016. This time the Brainwave unveiling happened at Hot Chips 2017 this week.)

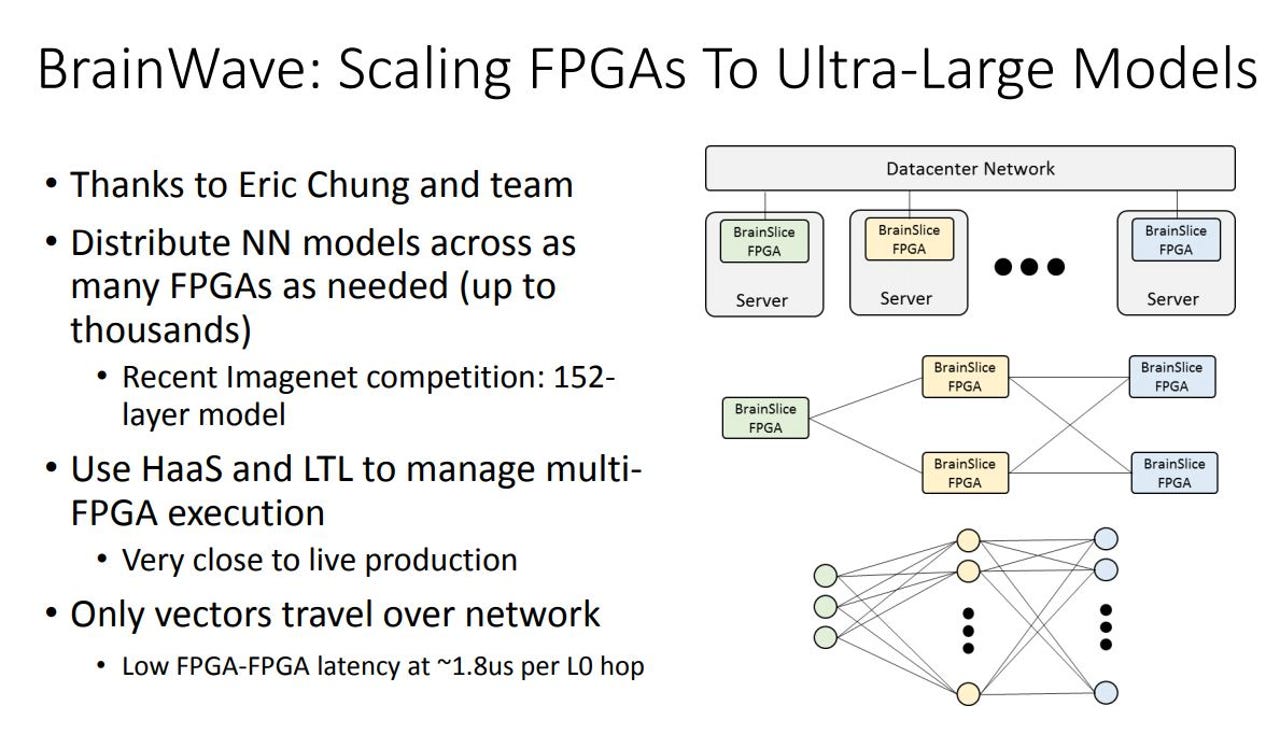

Brainwave consists of a high-performance distristributed system architecture; a hardware deep-neural network engine running on customizable chips known as field-programmable gate arrays (FPGAs); and a compiler and runtime for deployment of trained models, according to today's Microsoft Research blog post.

If you want a slightly less buzzword and acronym-laden picture of what this looks like, this might help:

As I blogged last month, Brainwave is a deep-learning platform running on FPGA-based Hardware Microservices, according to a Microsoft presentation on its configurable-cloud plans from 2016. That presentation mentions "Hardware Acceleration as a Service" across datacenters or the Internet. Brainwave distributes neural-network models across as many FPGAs as needed.

As I noted late last month, Microsoft officials were planning to discuss Brainwave at the company's recent Faculty Research Summit in Redmond in July, but changed their minds.

At Hot Chips 2017, Microsoft officials said that using Intel's new Stratix-10 chip, Brainwave achieved sustained performance of 39.5 teraflops without batching. Microsoft's point: Brainwave will enable Azure users to run complex deep-learning models at these kinds of levels of performance.

Here's a new architectural diagram from Microsoft's Hot Chips presentation showing the components of Brainwave:

Microsoft is looking to Brainwave running on hardware microservices as pushing the boundary of the types of AI-influenced services possible to deploy in the cloud, including computer vision, natural-language processing and speech.

Microsoft officials have said they will make FPGAs available to external developers via Azure in calendar 2018.

Microsoft is not the only company looking to FPGAs in its cloud datacenters; both Amazon and Google are using custom-built silicon for AI tasks.

PREVIOUS AND RELATED COVERAGE

How Microsoft plans to turn Azure into an 'AI cloud'

Microsoft is forging ahead to make FPGA processing power available to external Azure developers for data-intensive tasks like deep-neural-networking tasks.

Microsoft to implement 'Catapult' programmable processors in its datacenters

A Microsoft Research pilot focused on field-programmable gate arrays in datacenters has passed muster and will be implemented by the Bing team in 2015.

Azure is becoming the first AI supercomputer, says Microsoft

Microsoft's CEO on how its cloud computing service is evolving